This one Reddit post sums up a pattern almost every project manager has seen: the drop-off between how carefully we plan and how casually we measure what actually happens.

We build airtight plans, forecast every milestone, and call the kickoff a success. Then execution begins, and tracking slips into the background, buried in spreadsheets or forgotten in updates. But those gaps between expectation and reality are where the most useful insights live.

In this blog post, we’ll show you exactly how to track planned vs actual effort and spot where estimates go off. You’ll also see how ClickUp helps you capture real effort and turn insights into smarter planning.

Let’s get started! 💪

- What Is Planned vs. Actual Effort?

- Why Tracking Planned vs. Actual Effort Improves Project Delivery

- What Causes Differences Between Planned and Actual Effort

- Metrics to Track When Comparing Planned vs. Actual Effort

- How to Track Planned vs. Actual Effort: Step-by-Step

- Real-World Examples of Tracking Planned vs. Actual Effort

- Best Practices for Accurate Effort Tracking and Variance Reduction

- Common Mistakes That Lead to Inaccurate Planned vs. Actual Effort Tracking

- Frequently Asked Questions

What Is Planned vs. Actual Effort?

Planned vs. actual effort (also known as variance analysis) is a performance indicator that measures the alignment between what was expected to be done and what happened during execution.

It looks at two key components:

1. Planned effort

Also known as ‘baseline schedule,’ planned effort represents the estimated amount of time and resources a task, sprint, or project is expected to take.

It’s established during the planning phase based on factors like complexity, scope, past project data, and resource availability. This planned value acts as the reference point for evaluating performance and predicting workloads.

2. Actual effort

Actual effort reflects the true amount of time and effort spent completing a task or project. It captures everything from start to finish, including unplanned or additional work that emerged along the way.

This reflects how the work played out in practice, beyond the assumptions made during planning.

Understanding effort variance

The difference between planned and actual effort is known as effort variance. You calculate it as a percentage or absolute difference to see how far off your estimates were.

A small variance indicates accurate estimation and stable processes, while a large one may point to underestimation, scope creep, or inefficiencies.

Effort variance directly shapes:

- Capacity planning: When actual effort consistently overshoots plans, your team’s usable capacity shrinks, and they start over-committing, burning out, or never hitting forecasted delivery

- Timeline reliability: Missed estimates cascade into delayed milestones. If you’re always planning for 40 but delivering 50, you miss budget hours and delivery promises

- Team morale: Teams that constantly miss estimates feel pressure and second-guess their planning. Clear variance tracking gives honest feedback and helps teams improve estimation confidence

🧠 Fun Fact: Archaeologists found thousands of ostraca (pottery shards used like sticky notes) in the village of Deir el-Medina that recorded everything from daily attendance and sick leaves to material quotas and task assignments. Workers were organized into structured teams called gangs and phyles, each with its own leadership hierarchy.

The difference between planned estimates and actual logged effort

Here’s a direct comparison to make this crystal clear:

| Aspect | Planned effort | Actual effort |

| Purpose | Guiding decisions, allocating capacity, forecasting delivery | Measuring what really happened |

| Source of truth | Project plans, estimation tools, and sprint planning sessions | Timesheets, task updates, and software tracking systems |

| Level of certainty | Predictive and subject to uncertainty | Definitive and evidence-based |

| Adjustability | Can be revised during planning or at key checkpoints | Fixed once the work is done (historical record) |

| Role in performance analysis | Serves as the benchmark for evaluating variance | Serves as the comparison point for validating estimates |

🔍 Did You Know? The human brain uses ‘optimism bias,’ which makes us believe we’re faster, smarter, or more efficient than we are.

Why Tracking Planned vs. Actual Effort Improves Project Delivery

Here’s how effort tracking elevates delivery performance:

- Enhances accuracy in future planning: Gives managers concrete data to refine estimation models. Over time, these lead to more reliable predictions, realistic delivery schedules, and balanced workloads

- Enables proactive issue detection: Acts as an early warning signal, highlighting schedule deviations or unrealistic estimates before they spiral into project overruns

- Optimizes resource utilization: Reveals whether team members are overburdened or underutilized

- Strengthens accountability and transparency: Reinforces ownership and fosters mutual trust within the team by giving them visibility into a project’s progress and challenges

- Enables data-driven decision making: Provides quantifiable metrics that support informed decisions. Whether requesting additional budget, resizing a sprint, or revising deadlines, leaders can justify changes using empirical evidence

- Builds stakeholder confidence: Demonstrates control over both planning and execution. Clients and executives gain trust when they see consistent measurement and rational explanations

🔍 Did You Know? Before mechanical clocks existed, medieval monks used candle clocks: candles with marks to estimate work durations.

What Causes Differences Between Planned and Actual Effort

Understanding why planned effort and actual effort diverge is crucial because, unless you diagnose the root causes, you’ll keep repeating the same estimation errors. ➿

1. Optimistic estimation (Planning fallacy)

Teams often underestimate complexity or risk because they plan from a best-case mindset rather than reality. Psychology research calls this the planning fallacy, which is a common cognitive bias where planners underweight past delays.

2. Scope changes

Even small tweaks to requirements during a phase silently add work, stretching effort beyond initial estimates. Scope creep includes all the additional coordination, rework, and testing that come with changes.

3. Resource availability and interruptions

Team members get pulled into unplanned tasks, meetings, support, or emergencies. And every disruption adds unplanned hours.

📖 Also Read: Time Tracking Projects With Time Doctor

4. Hidden complexity

Unknowns (integration issues, environment setup, dependency delays) often surface only once work begins, increasing actual effort. Plus, ambiguous, incomplete, or frequently changing requirements make it difficult to reliably forecast effort.

5. Unreliable estimation techniques

Effort is often estimated using rough guesses, incomplete analogies, or inconsistent methods, which introduces systematic error before work even starts.

Teams with poor project time management and standardized estimation processes tend to consistently under or overestimate.

6. Limited estimator experience

There’s a clear gap if your estimates come from people with limited domain, technical, or project experience. Lack of familiarity with the team’s actual throughput, tooling, or dependencies also skews estimates away from reality.

🧠 Fun Fact: Research shows that teams improve estimation accuracy when more people are involved, but accuracy drops again if too many people participate. That was the basis for Planning Poker in Agile.

Metrics to Track When Comparing Planned vs. Actual Effort

The real value comes from tracking metrics that explain why effort drifted, how it affected delivery, and what it changed downstream, across schedules, costs, resources, and business outcomes.

Let’s break down the key metrics to track. ⚒️

Core effort metrics

These performance metrics compare what you planned to spend in effort vs. what you did. They form the baseline for all variance analysis.

1. Effort variance (%)

Effort variance is the percentage difference between actual effort and the original estimate. This makes it easy to compare accuracy across tasks, teams, or projects of different sizes.

🧮 Formula: Effort variance (%) = (Actual effort − Planned effort) ÷ Planned effort × 100

👀 How to interpret:

- Positive value: More effort than planned (under-estimation)

- Negative value: Less effort than planned (over-estimation)

- Near zero: Accurate estimation

📮 ClickUp Insight: While 40% of employees spend less than an hour weekly on invisible tasks at work, a shocking 15% are losing 5+ hours a week, equivalent to 2.5 days a month!

This seemingly insignificant but invisible time sink could be slowly eating away at your productivity. ⏱️

Put ClickUp’s Time Tracking and AI assistant to work and find out precisely where those unseen hours are disappearing. Pinpoint inefficiencies, let AI automate repetitive tasks, and win back critical time!

2. Planned hours vs. actual time spent

This metric compares estimated hours with logged time for tasks, sprints, or projects. It quickly exposes gaps caused by interruptions, rework, meetings, or unclear scope.

Here’s how to calculate it:

- Planned hours: Sum of estimated effort

- Actual time: Sum of time tracked or logged

Then, compare the two over time or per work type.

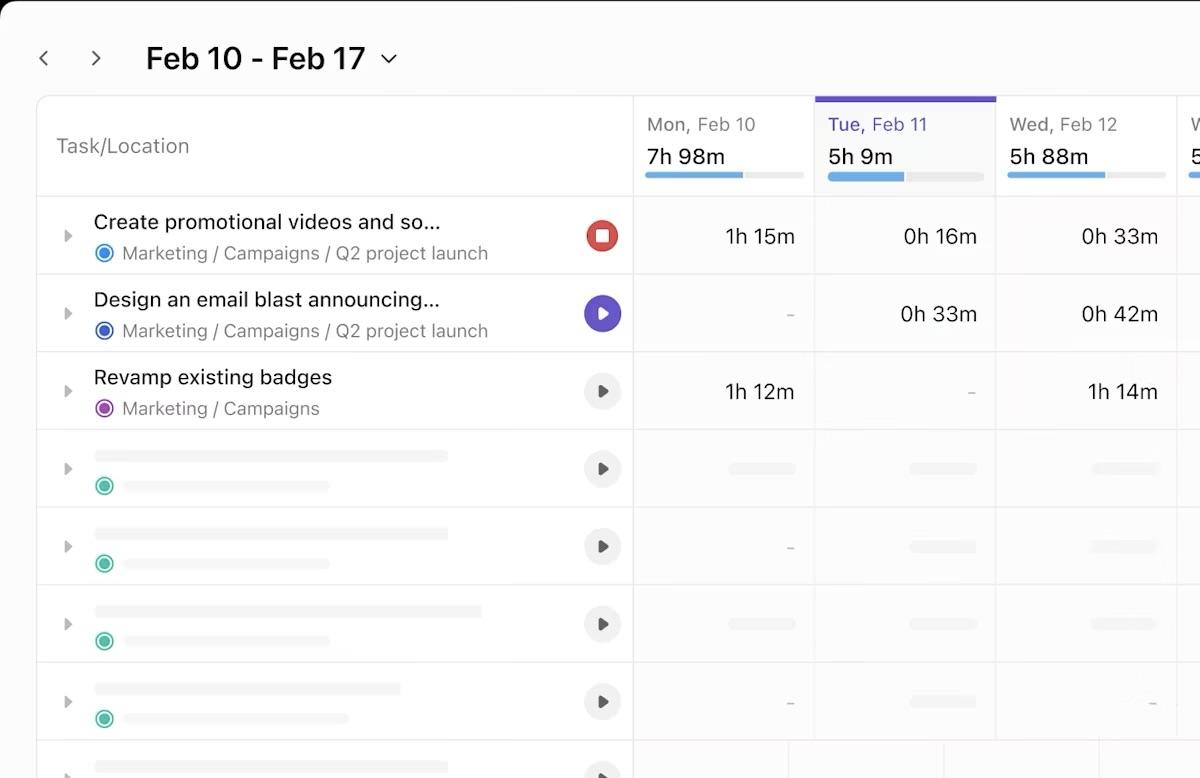

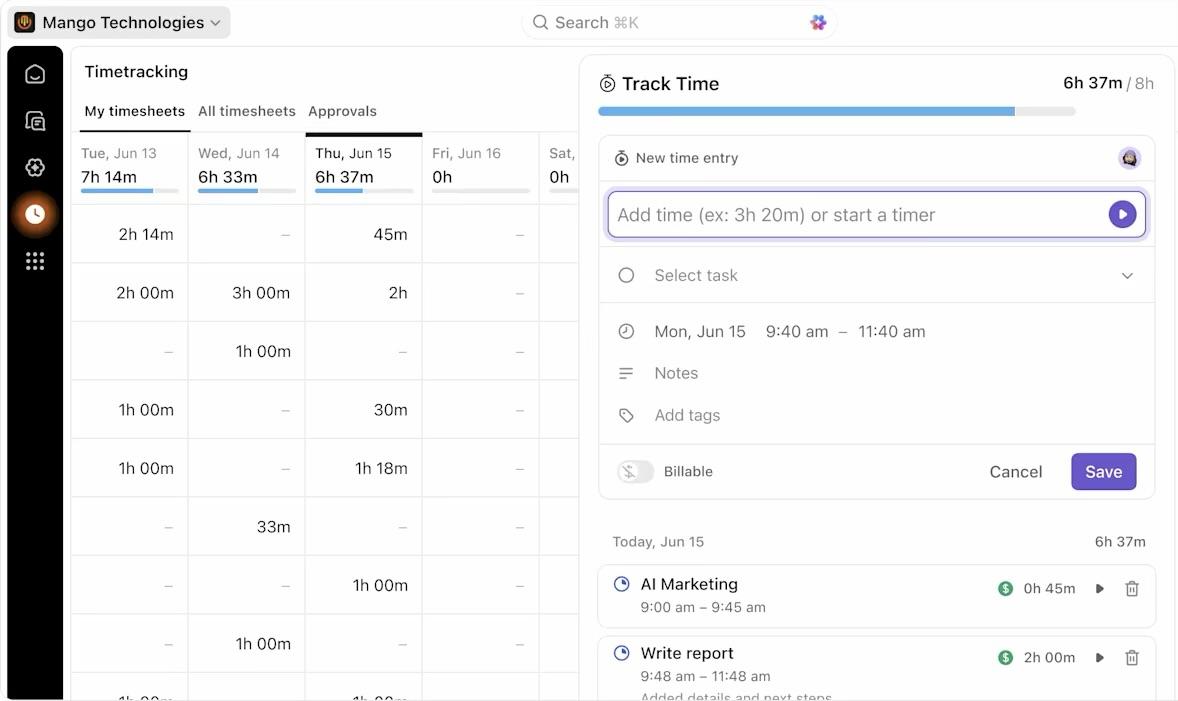

💡 Pro Tip: Use ClickUp Timesheets to review planned hours vs. actual time after hours are submitted and locked. When team members submit their timesheets, it seals those entries and routes them for approval, so your comparisons are based on finalized, reviewable data.

Schedule and time metrics

These metrics help you connect effort differences to project timeline performance, so you can see how much work was done and how it impacted scheduling and delivery.

3. Schedule variance (SV)

Schedule Variance tells you whether the work you’ve completed so far is ahead of or behind where you planned to be at a specific point in time. It’s part of Earned Value Management (EVM), a way to quantify progress against a baseline.

🧮 Formula: SV = Earned Value (EV) − Planned Value (PV)

- Planned Value (PV) is the amount of work you expected to have done by now, expressed in value terms (e.g., hours or budgeted cost)

- Earned Value (EV) is the amount of work completed up to this point, again in value terms, based on your original plan

👀 How to interpret:

- A positive SV means more work is done than planned (you’re ahead of schedule)

- A negative SV means less work was done than planned (you’re behind)

- Zero SV means you’re exactly on schedule

4. Schedule performance index (SPI)

SPI takes the idea of SV and turns it into a ratio. Instead of comparing planned and actual progress, it shows how efficiently you’re progressing relative to the plan.

🧮 Formula: SPI = Earned Value (EV) ÷ Planned Value (PV)

👀 How to interpret:

- SPI > one means you’re completing work faster than planned (ahead of schedule)

- SPI = one means you’re on schedule

- SPI < one means you’re completing work slower than planned (behind schedule)

5. Cycle time

Cycle time measures the actual elapsed time it takes for a work item to go from start to finish once work begins. It shows how fast work moves through your workflow. Shorter cycle times usually mean fewer bottlenecks and more predictable delivery.

Here’s how it works:

- Start the clock when a task moves into ‘in progress’ or ‘active’ status

- Stop when it reaches ‘done’ or ‘complete’

- The time between those points is the cycle time

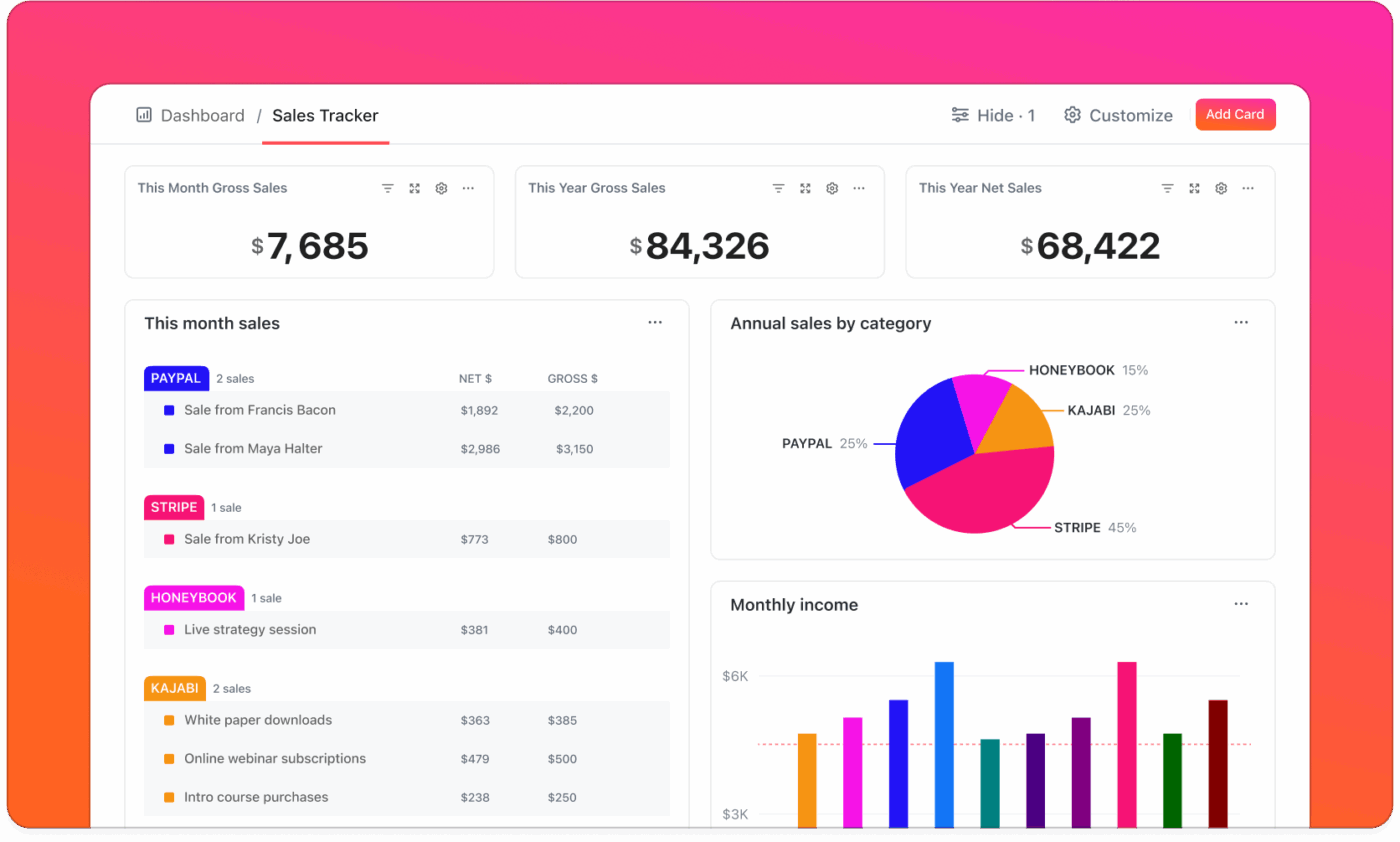

🚀 ClickUp Advantage: Track cycle time alongside effort, progress, and delivery metrics with ClickUp Dashboards.

You can combine multiple views into a single dashboard and see how effort variance impacts flow in real time. This helps leaders spot stalled work, emerging bottlenecks, or slowdowns before they turn into missed commitments.

6. Throughput or velocity

Throughput and velocity both measure output, but they come from slightly different perspectives:

- Throughput counts the number of work items completed in a given period (e.g., tasks per week), making it a simple measure of delivery rate

- Velocity is an Agile concept that sums up how much work (often in story points or defined units) a team completes in a sprint or iteration. It gives a sense of capacity over time

Feeling overwhelmed by tasks? Learn a simple, seven-step way to stay on top of your work with ClickUp. 👇🏼

Cost and budget metrics

When effort exceeds expectations, costs usually follow. These metrics tie effort variance to financial impact.

1. Cost variance (CV)

Cost Variance measures whether the value of completed work justifies the money spent on it.

🧮 Formula: CV = Earned Value (EV) – Actual Cost (AC)

👀 How to interpret:

- Positive CV: Under budget

- Negative CV: Over budget

2. Cost performance index (CPI)

This index measures how efficiently money is being spent relative to the value delivered. CPI is useful for forecasting the final project cost if current trends continue.

🧮 Formula: CPI = EV/AC

👀 How to interpret:

- CPI > one: Cost efficient

- CPI = one: On budget

- CPI < one: Overspending

🧠 Fun Fact: The structured, repeatable process of conducting a ‘lessons learned’ session took shape in the 1980s, inspired heavily by the U.S. Army’s formal After Action Review (AAR) model.

Resource and efficiency metrics

These metrics explain whether effort variance stems from workload imbalance or capacity issues.

1. Resource utilization rate

How much of the available capacity is actually being used.

🧮 Formula: Utilization = Actual hours worked/Available capacity x 100

👀 How to interpret:

- Very high: Burnout risk

- Very low: Underuse or planning inefficiency

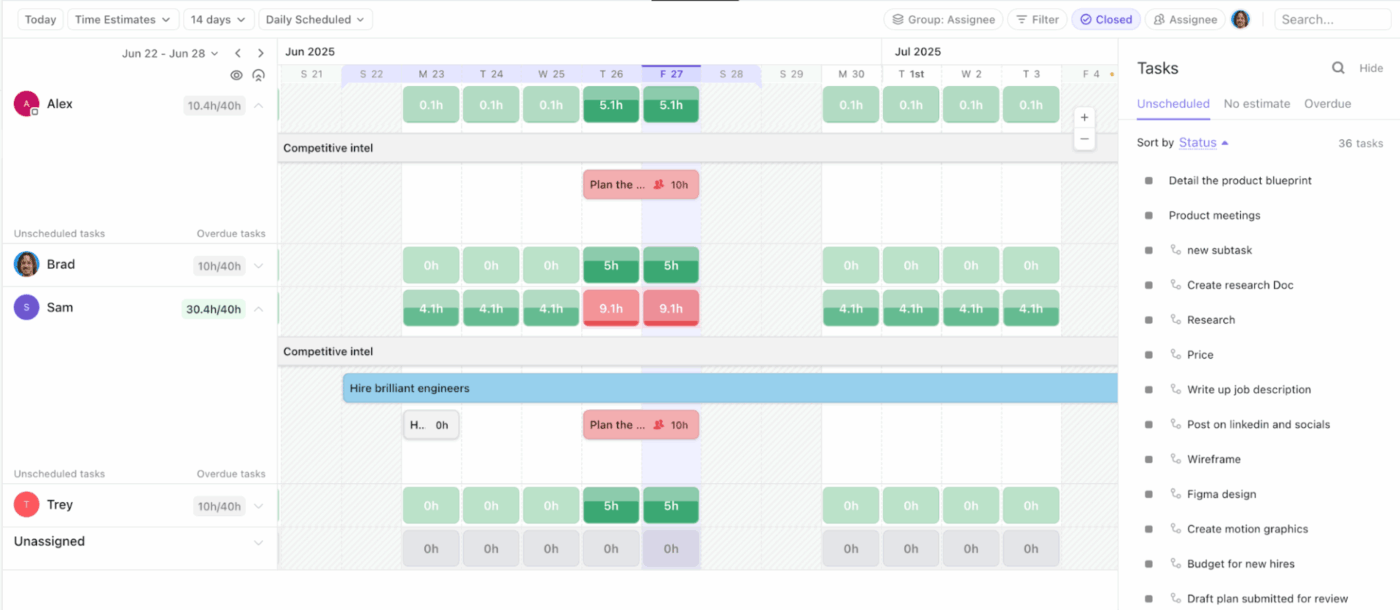

💡 Pro Tip: Add ClickUp Workload View to your workspace and visualize assigned effort against available capacity for individuals or teams. Instead of relying on utilization percentages alone, managers can visually see when people are overcommitted or unevenly loaded.

How to Track Planned vs. Actual Effort: Step-by-Step

Now that you understand why effort tracking matters, what causes gaps, and which metrics to monitor, it’s time to put it into action.

This section breaks down a practical, step-by-step approach to tracking planned versus actual effort in real workflows.

Along the way, you’ll also see how ClickUp supports you as the world’s first Converged AI Workspace. Its AI capabilities are built directly into your work so your tasks, documents, chats, and workflows stay connected, helping you avoid context switching. 🤩

Step #1: Define planned effort clearly

Before execution starts, you need an explicit answer to one question: How much effort do we believe this work will take?

Planned effort is a documented commitment, expressed in time, capacity, or relative effort, that can later be compared to reality. So without a defined estimate, overruns look like surprises instead of signals.

Planned effort should be:

- Set before work starts

- Defined at a task or work-item level

- Consistent across similar types of work

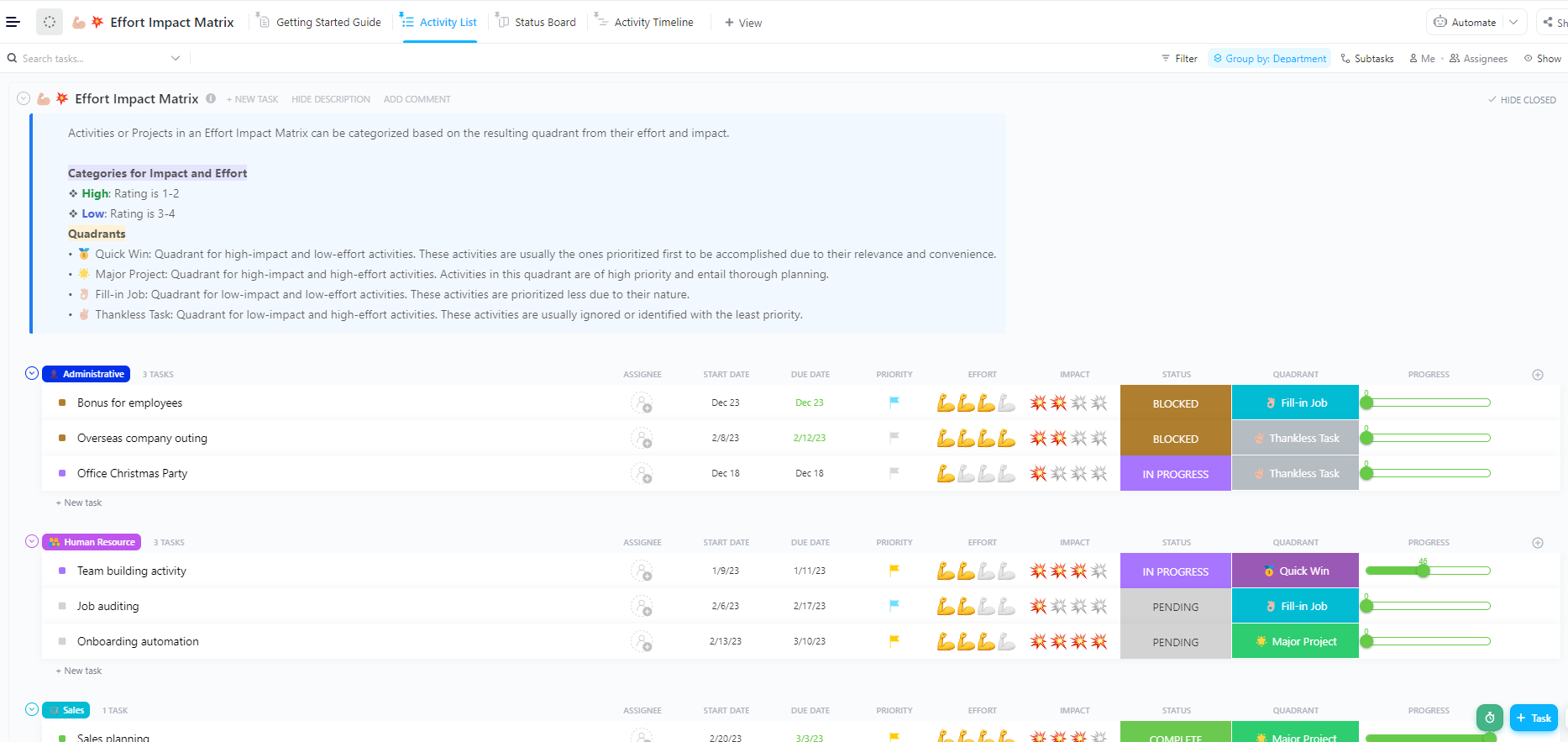

Before assigning time or points, teams often need clarity on which work deserves tighter estimation. The ClickUp Effort Impact Matrix Template helps you visually map upcoming ClickUp Tasks by expected effort and delivery impact.

Use it to identify high-impact, high-effort work that needs a deeper breakdown and tighter estimates. It also helps you avoid over-investing estimation time on low-impact or exploratory tasks

Use it to:

- Categorize tasks for easy identification with ClickUp Custom Fields like Department, Effort, Impact, Quadrant, and Progress

- Switch between different ClickUp Views, such as the Activity List, Status Board, and Activity Timeline

- Mark ClickUp Task Statuses, such as Blocked, Complete, In Progress, and Pending, to keep track of task progress

- Surface delivery-critical work that should be planned earlier or buffered

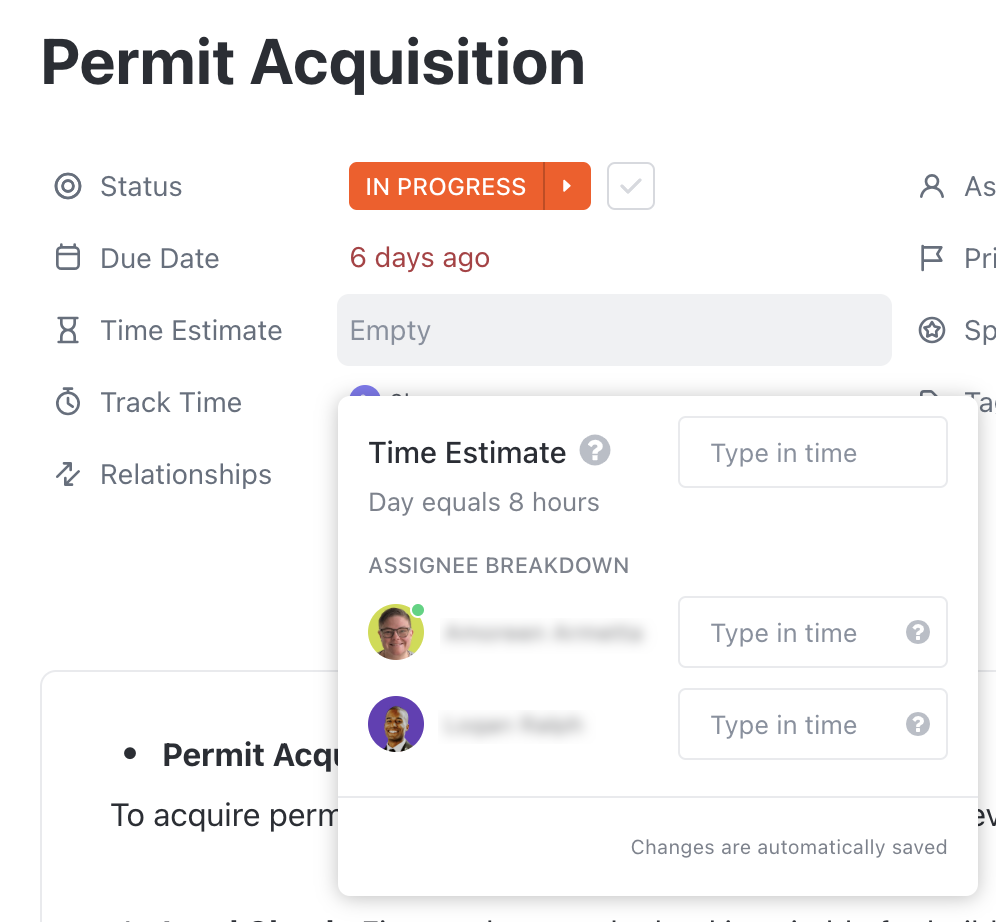

🚀 ClickUp Advantage: Document how long a task is expected to take before work begins with ClickUp Time Estimates. You can add a time estimate directly on each ClickUp Task, define how many working hours make up a day, and choose how estimates are displayed.

Step #2: Treat the initial plan as a baseline

Once work begins, the planned effort must remain intact. Changing estimates mid-execution hides variance and removes accountability from the planning process.

Of course, plans can change, but changes should be tracked separately, not overwritten.

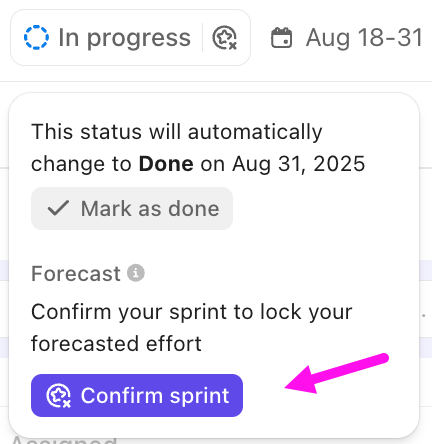

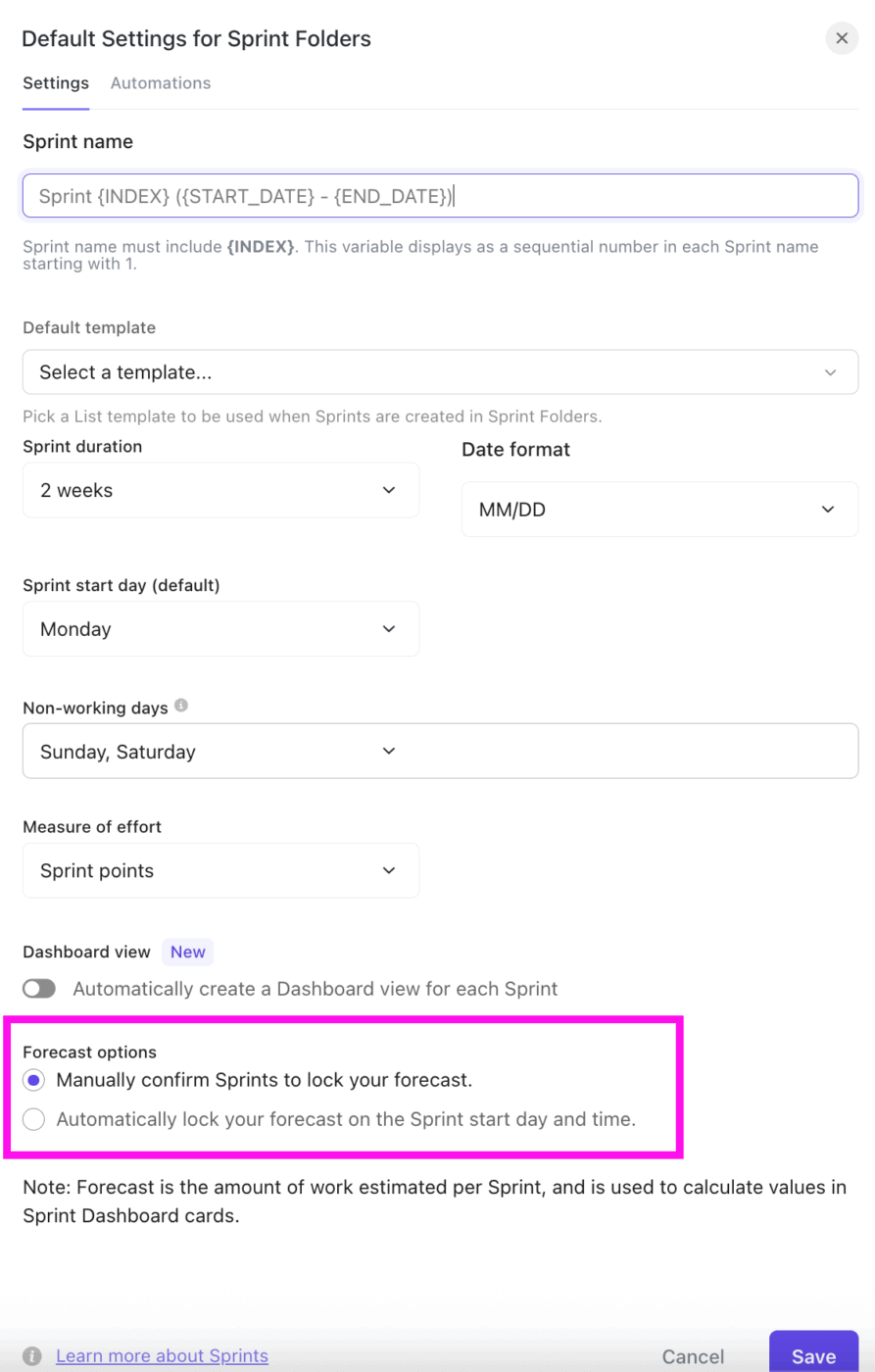

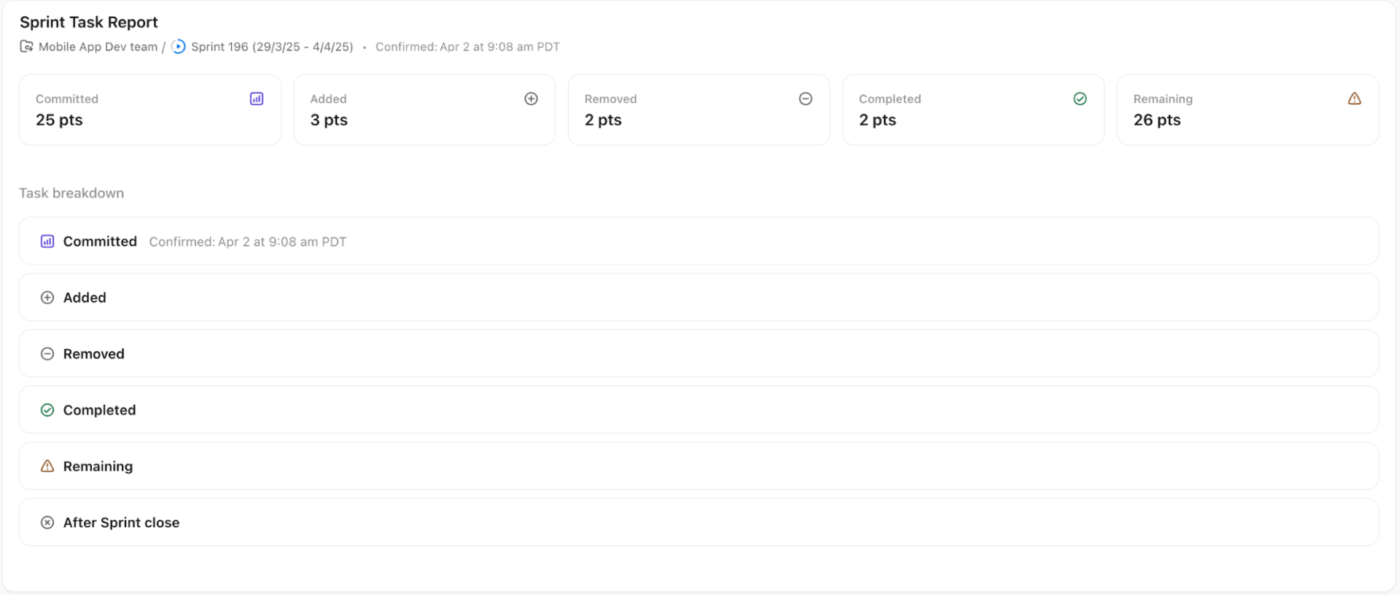

For teams running Agile or even semi-structured delivery cycles, ClickUp Sprints are designed to lock planned effort at the right moment. At the start of a sprint, effort is defined using Sprint Points or Time Estimates.

ClickUp then lets you lock this forecast, using either of these two ways:

- Manually confirm a sprint: After sprint planning is complete, you can confirm the sprint from the Sprint header and lock the total forecasted effort. Any new points or estimates added afterward do not change the original forecast, preserving your baseline

- Automatically lock a forecast: If your entire team consistently finishes planning before the sprint starts, you can configure ClickUp to lock the sprint forecast start date and time. The system captures the total Sprint Points or Time Estimates at that moment and uses them as the fixed baseline

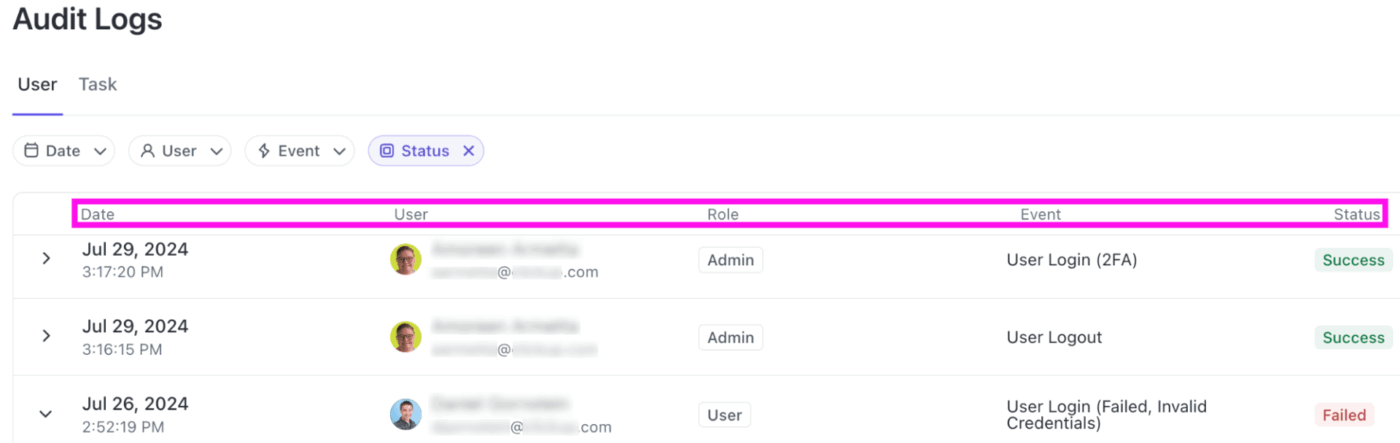

For non-agile teams, ClickUp Audit Logs provide a clear history of what changes and when. It records key changes, including:

- Task updates, such as assignee changes, Custom Status changes, and Custom Field value edits

- Creation, deletion, or movement of Lists, Folders, and Spaces

- User actions tied to specific dates, roles, and outcomes

Step #3: Capture actual effort as work happens

One of the fastest ways actual effort gets distorted is when teams rely on end-of-sprint memory instead of live data. Therefore, it’s important that you capture effort as close to real time and low-friction as possible.

Here’s how:

- Ask team members to log time as they change task status, rather than waiting until the end of the day/sprint

- Keep the logging action inside the same tool where work lives, so no one has to switch systems just to record effort

- Set a clear expectation like ‘update effort at least twice a day’ and reinforce it in standups and sprint reviews

ClickUp Time Tracking is designed specifically for this kind of real-time capture. Team members can start and stop timers from the ClickUp Task itself, use a global timer, or add time retroactively when needed.

Time can be tracked from desktop, mobile, web, or the Chrome extension, so you can calculate hours worked regardless of where you work.

Hear from Alistair Wilson, Digital Transformation Consultant, Compound, on how ClickUp helped them:

💡 Pro Tip: Capture effort changes as they happen throughout the sprint using the ClickUp Sprint Task Report Card. Sometimes called a say-do report, it shows exactly how your original commitment changed during execution.

Step #4: Compare planned vs. actual effort at meaningful levels

Planned vs. actual comparisons only become useful when you analyze them at the right level of effort. Looking at a single project’s total usually hides the real story. Instead, break the effort down so you can identify where the estimates slipped, why, and what to change next time.

Start at the task level, and look for these patterns:

- Which types of tasks consistently exceed estimates?

- Do integrations, testing, reviews, or refactors run longer than expected?

- Are certain tasks underestimated regardless of who works on them?

Next, roll task data up to the phase or project level, for example, discovery, design, build, testing, and deployment.

Here, the goal is to uncover structural delivery issues:

- Does discovery keep expanding beyond its original scope?

- Does testing regularly consume more effort than planned?

- Are late-stage project phases absorbing earlier estimation errors?

When a phase consistently overruns, you can respond with targeted fixes, such as tighter scope, clearer exit criteria, and time-boxed phases, rather than open-ended work.

Finally, compare effort at the team or role level. Aggregate planned vs. actual effort by:

- Role (design, frontend, backend, QA, ops)

- Squad or function

- Specialist vs. generalist contributors

👀 Bonus: Try this template!

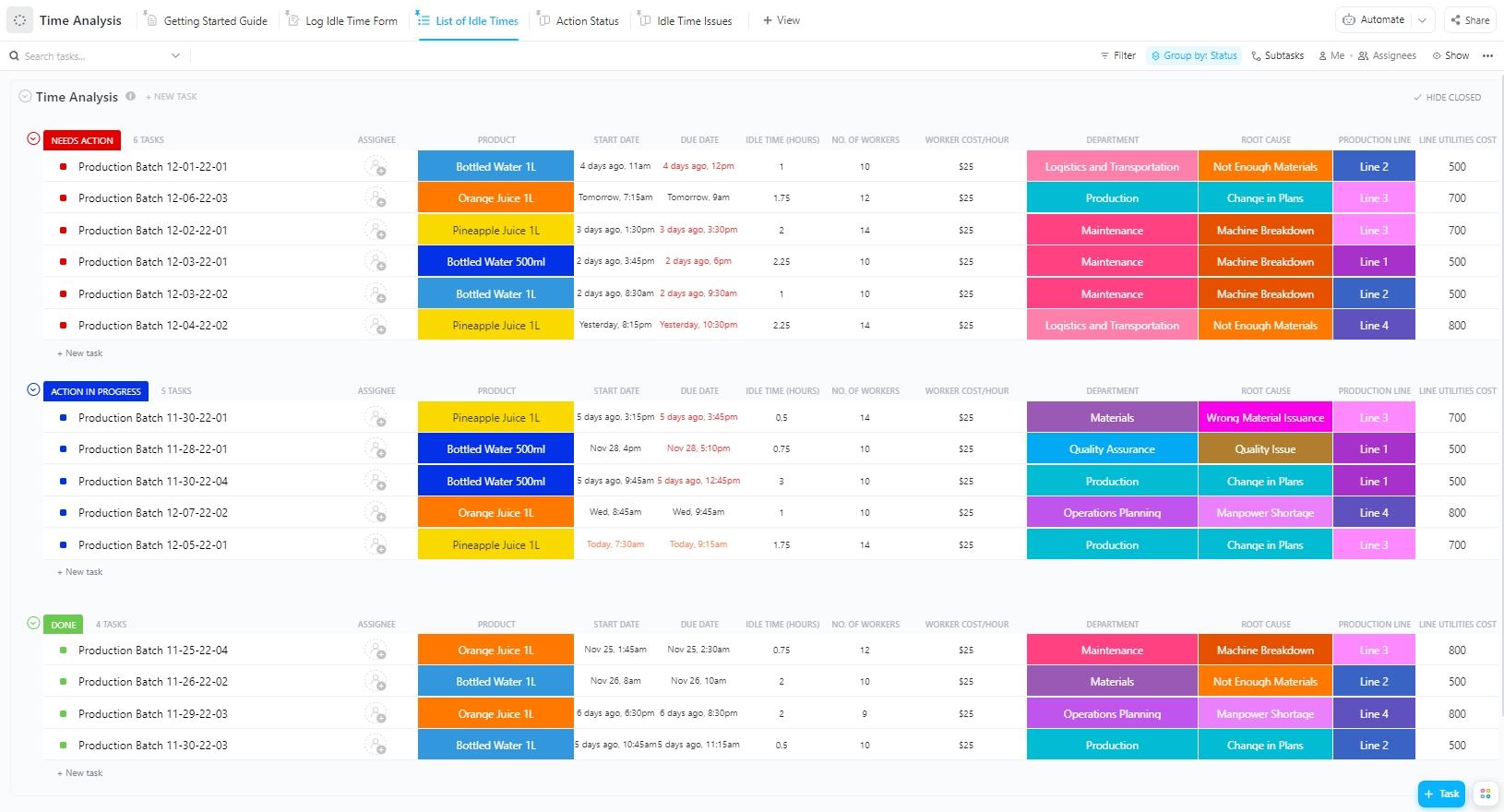

Once you start comparing planned vs. actual effort across tasks, phases, and roles, raw numbers alone can get overwhelming. The ClickUp Time Analysis Template helps you organize that data to surface patterns.

With this template, you can:

- Track effort progression with ClickUp Custom Statuses like Action In Progress, Done, and Needs Action to clearly see where work stalled, extended, or requires rework

- Capture data with Custom Fields like Idle Time Hours, Worker Cost per Hour, Department, and Number of Workers to understand why certain tasks or roles overran

- Analyze overruns with purpose-built Custom Views, such as Idle Time Issues View, Action Status View, and List of Idle Times View

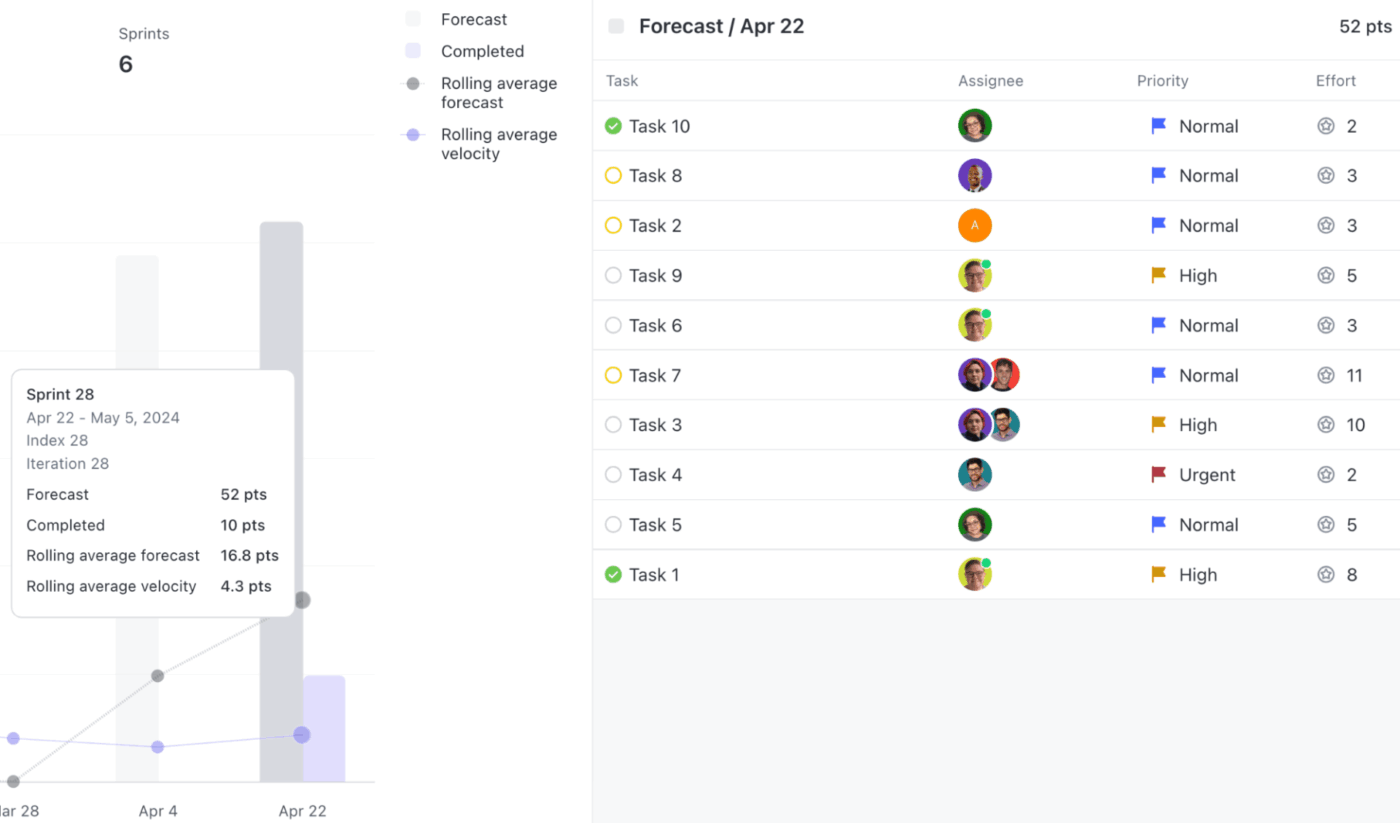

🚀 ClickUp Advantage: Once you know how to compare planned vs. actual effort, ClickUp Sprint Cards let you analyze effort at the task, phase, and team level using real-time data that stays connected to the work itself.

For instance, the ClickUp Sprint Burnup Card is ideal for understanding how reality diverges from the original plan every day.

Then, the ClickUp Sprint Burndown Card zooms in on execution discipline, showing how efficiently planned work is actually being completed within the sprint.

At the sprint start, the chart begins with 100% of planned effort remaining. As tasks are completed, the remaining effort line moves downward toward zero.

Step #5: Review variance during execution

Effort variance only helps if it changes decisions. When teams wait until the end of a sprint or project to review planned vs. actual effort, they’ve already lost the chance to influence the outcome.

The real advantage comes from reviewing variance while work is still unfolding, when there’s still room to adjust scope, sequencing, or expectations.

Early variance doesn’t always show up as a missed deadline. More often, it appears as subtle execution signals:

- Work starts but doesn’t finish at the expected pace

- Remaining effort stays flat for multiple days

- High-effort tasks dominate the second half of the sprint

- The team appears ‘busy,’ but the completed effort lags behind the plan

Reviewing these variances during mid-execution allows teams to:

- Separate planning issues from execution issues

- Adjust delivery expectations before trust is eroded

- Avoid compressing work into the final days of the sprint

💡 Pro Tip: Leverage the ClickUp Velocity Card to determine whether what you’re seeing is a one-off sprint issue or part of a larger delivery pattern. Instead of reacting to variance in isolation, compare the current sprint’s progress against your team’s rolling average velocity from the last 3-10 sprints.

Here’s how this adds clarity during mid-sprint reviews:

- If the estimated time of completion falls well below the rolling average velocity, the issue is likely execution-related

- If the rolling average velocity itself is consistently lower than the forecasted effort, the problem points to systemic issues, such as overestimation

- If forecasted bars stay high while completed work remains flat across multiple sprints, it signals a persistent delivery bottleneck

Step #6: Use historical effort data to improve future estimates

This is where tracking planned vs. actual effort finally pays off.

Without a proper feedback loop, teams repeat the same optimistic assumptions and treat missed estimates as isolated incidents rather than signals. When you look across multiple projects or sprints, trends begin to emerge that aren’t visible in single deliveries:

- Certain work types consistently exceed their original estimates

- Some teams or roles finish close to plan, while others regularly overrun

- Small tasks tend to get finished predictably, while mid-sized tasks carry the most variance

- ‘Simple’ work with external dependencies takes longer than planned, more often than not

Use this data to recalibrate future performance estimates, set more realistic capacity limits, decide where work needs to be broken down further, and add buffers intentionally.

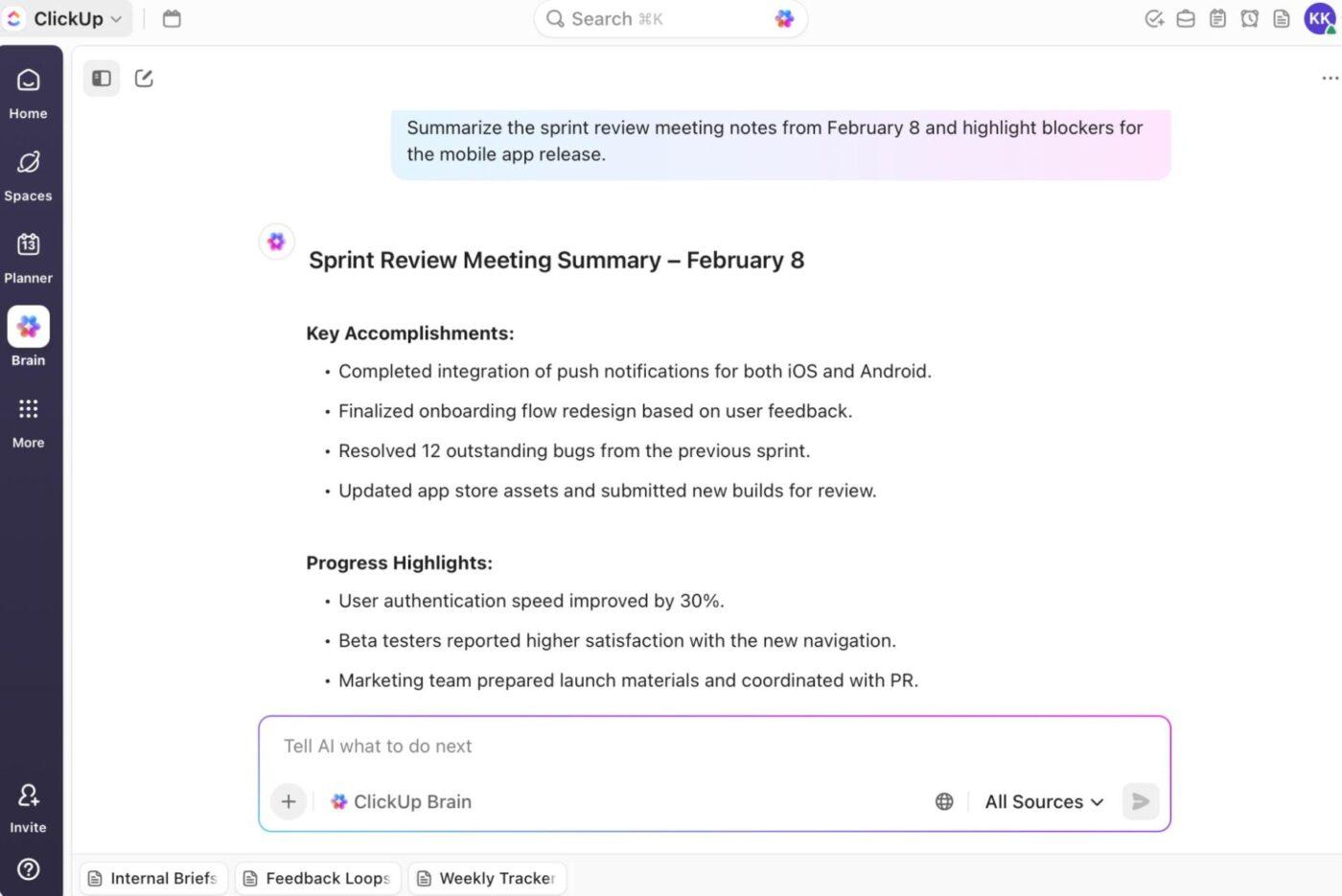

💡 Pro Tip: Making sense of the historical effort data across sprints, projects, and teams can be challenging. ClickUp Brain adds real value here.

ClickUp’s context-aware AI is built directly into your workspace. Unlike generic AI time management tools, it understands your tasks, time estimates, statuses, sprint data, and activity history.

It helps by:

- Producing clear summaries of entire sprints or projects, pulling together planned vs. actual effort, blockers, and delivery outcomes

- Reviewing your historical data collection to recalibrate future project estimates and decide where work should be broken down

- Deploying ClickUp Agents to continuously monitor and interpret effort patterns across sprints and work types based on your prompts

You can also use ClickUp Docs to document sprint retrospectives, reviews, summaries, and decisions about buffers, scope changes, or task breakdowns. Then, ClickUp Brain can extract key insights from the Doc, summarize historical data, and highlight recurring themes.

Here’s how you can put ClickUp’s AI capabilities to use:

Real-World Examples of Tracking Planned vs. Actual Effort

Tracking planned vs. actual effort only becomes meaningful when you see how it plays out in real delivery scenarios.

The following examples show how different teams use effort comparisons to uncover delivery risks, explain missed plans, and improve estimation accuracy over time.

Comparing planned story points to actual velocity in Agile teams

In Agile delivery, story points represent the planned effort or complexity a team estimates for a set of user stories or backlog items. Velocity, on the other hand, measures how many of those story points the team completes within a sprint.

If a team’s planned story points consistently exceed its completed velocity, it indicates that estimates are overly optimistic, introducing schedule risk and unstable commitments.

📌 Example: At the start of an Agile initiative, a team plans 40 story points for a sprint. By the sprint end, they complete 28 points, yielding a velocity of 28. Over the next 3-4 sprints, the team consistently finishes between 26 and 30 points.

This pattern reveals that the initial plan was not grounded in delivering reality. Teams can adjust future trend commitments to a realistic velocity band (e.g., 26-30).

Monitoring planned hours vs. logged hours in project schedules

If an initiative is planned in hours, matching those estimates against actual logged time reveals how closely execution matched the plan.

📌 Example: A project plan estimates 120 hours for design, 240 hours for development, and 80 hours for testing.

When the work concludes:

- Design logged 140 hours (+16%)

- Development logged 260 hours (+8%)

- Testing logged 100 hours (+25%)

This variance breakdown shows testing as the biggest estimation gap. If actual hours < planned hours, you may be overestimating and compressing capacity unnecessarily. And if actual hours > planned hours, you likely need to revisit your planning process or examine hidden work categories.

🚀 ClickUp Advantage: Planned vs. actual effort breaks down when real work never gets captured. ClickUp BrainGPT’s Talk to Text helps close that gap by turning spoken updates into structured, trackable inputs right where work happens.

During standups, sprint reviews, or mid-sprint check-ins, team members can simply speak blockers, progress, rework, or scope changes. Talk to Text converts those conversations into clean, contextual text inside ClickUp. It auto-formats notes, links relevant ClickUp Tasks, and adds @mentions or follow-ups as you talk.

For instance, while reviewing sprint progress, a delivery manager can use Talk to Text to narrate what changed mid-sprint like, ‘extra QA cycle’ and ‘vendor delay.’ BrainGPT instantly turns that into structured documentation tied to the sprint. Over time, these transcripts become a rich input for improving future estimates, velocity analysis, and delivery forecasts.

Identifying chronic underestimation patterns across teams

Across multiple teams and cycles, some types of work (for example: integrations, bug fixes, and documentation) can consistently overrun original estimates. When you aggregate effort data over time, these patterns emerge clearly and create opportunities for systemic improvement.

📌 Example: In over five cycles:

- Feature development consistently hits estimates within ±5%

- Integration work overruns by ~30-40% each time

- Documentation is underestimated by ~50%

Chronic patterns signal estimation bias, and addressing these biases improves future planning accuracy dramatically.

Using effort variance to improve future capacity planning

On its own, effort variance explains past performance. But when used consistently over time, it becomes one of the most reliable inputs for resource allocation. Two teams with the same availability can have very different adequate capacity if one routinely overruns estimates or completes work late in the cycle due to various time constraints.

📌 Example: If a team consistently runs at –15% variance (uses 15% more project effort than planned), planners will:

- Adjust future capacity forecasts downward by that factor

- Build buffers into commitments to protect delivery dates

- Redistribute workloads or add cross‑functional capability

If a team runs with a +10% variance (takes less effort than planned), planners may adjust estimates upward or explain why time was saved (e.g., efficiencies or task simplification).

🔍 Did You Know? Francesco Cirillo invented the Pomodoro Technique in the 1980s, not to improve productivity, but to reduce anxiety about time. Timeboxing accidentally became one of the easiest ways to compare planned vs. actual effort.

Best Practices for Accurate Effort Tracking and Variance Reduction

Let’s explore practical habits and planning behaviors that help you produce more reliable estimates and reduce delivery surprises for continuous improvement.

- Ground estimates in evidence: Leverage historical data from past projects or sprints to inform estimates rather than relying on gut feel alone. Standardize how you capture past performance

- Try different techniques: Don’t default to a single method. Combine bottom-up estimating with project estimation techniques like ‘three-point estimation (optimistic/likely/pessimistic)’ and ‘expert judgment’

- Make estimation a team sport: Involve developers, designers, QA, and operations in estimating work. Cross-functional input reduces blind spots that often cause actual effort to exceed planned effort

- Document assumptions and constraints explicitly: Record assumptions about scope, dependencies, skill levels, and risk. Tracking them upfront helps you understand why the variance occurred later

- Calibrate and adapt future forecasts based on patterns: Use effort variance trends to adjust velocity, capacity, or buffer levels for future sprints or phases

🔍 Did You Know? Your brain is better at recalling how long something previously took than guessing how long it will take. That’s why tracking actual effort is indispensable; it retrains your future estimates.

Common Mistakes That Lead to Inaccurate Planned vs. Actual Effort Tracking

Even well-planned projects fail to track effort accurately.

Here, we’ll break down the mistakes that distort planned vs. actual effort and how to fix them to drive continuous improvement in estimation and delivery confidence.

| Mistake | Solution |

| Failing to capture all actual effort (meetings, reviews, admin, rework often go unlogged) | Include all relevant effort by defining categories (e.g., rework, support, research) in your tracking practice so actuals reflect true effort |

| Ignoring external dependencies and their impact on effort | Map dependencies explicitly in planning and add contingency time based on historical delays with those vendors or processes |

| Letting scope creep hide in effort totals | Baseline scope at the start and separate re-estimate or added work, so planned vs actual comparisons remain meaningful |

| Not updating estimates when the project context changes | Revisit and rebaseline estimates when requirements shift, or unknowns are uncovered; tie estimation updates to milestone checkpoints |

| Ignoring cognitive biases in estimation (anchoring, wishful thinking) | Train teams on common biases and use structured estimation techniques like Planning Poker or data-driven forecasting to counteract subjective judgment |

📖 Also Read: Tips to Improve Time Management Skills at Work

Reduce The Actual Effort of Planning With ClickUp

Planned vs. actual effort estimates fail because the data lives in too many places, gets updated too late, or never connects back to the original plan.

ClickUp brings planning, execution, and actual analysis into a connected system. You get ClickUp Sprints to preserve your original commitments, and ClickUp Time Tracking for capturing effort as work happens.

Dashboards turn raw data into live visibility across tasks, teams, and timelines. And ClickUp Brain ties it all together by summarizing trends, surfacing variance patterns, and turning historical effort into guidance.

Sign up to ClickUp for free today! ✅

Frequently Asked Questions

Some of the most frequently asked questions we get about TOPIC.

Planned vs. actual effort is the comparison between the effort you estimated for work (in hours, story points, or other units) and the effort actually spent when the work was done. It highlights where estimates were accurate and where execution differed from the plan.

Effort accuracy is measured by comparing planned effort to actual effort, often as a percentage variance: ‘(Actual effort – Planned effort) ÷ Planned effort x 100.’ A lower variance indicates closer alignment between estimates and reality.

Project management and time tracking systems such as ClickUp let you record estimates, log actual time, and report on the differences in dashboards or reports.

Estimates often differ due to optimism bias, hidden complexity, interruptions, dependencies, or unknown risks. People tend to imagine ideal conditions and overlook real-world variability, a phenomenon described as the planning fallacy.

Teams improve estimation by leveraging historical delivery data, incorporating cross-functional perspectives, and refining estimation techniques. It’s also best to review past variances to inform future estimates. Continuous learning and retrospective analysis can help refine accuracy.