How to Automate Voice Generation with AI (Tools, Workflows & Use Cases)

Sorry, there were no results found for “”

Sorry, there were no results found for “”

Sorry, there were no results found for “”

You heave a sigh of relief. It’s finally done, you’ve edited the video, ensured the visuals are sharp, and the script is ready. You go over the script again and realize the voiceover is left. That’s when the frustration seeps in again.

There’s no time for the standard ‘stumble over a word, restart, lose your pace’ routine.

Most projects stall here, getting bogged down in the time-consuming and unpredictable task of adding voiceovers. The good news is that you don’t have to keep doing it this way.

In this guide, we’ll explore how to automate voice generation with AI. As a bonus, you’ll also find out how ClickUp helps manage scripts, tasks, and publishing workflows all in one place. 🤩

AI voice generation converts written text into speech that mirrors natural human speaking patterns. It relies on machine learning models trained on vast speech samples to capture tone, rhythm, pauses, and emotion.

The result is expressive, realistic, and adaptable voices that fit different contexts. With AI voice tools, you can create lifelike narration or dialogue instantly.

🧠 Fun Fact: An AI tool was able to bring back the voice of legendary British broadcaster Sir Michael Parkinson for an entire eight-part podcast series. This just proves how far speech cloning has come (not to mention the debate it has sparked along the way).

AI text-to-speech (TTS) isn’t new, but the gap between older systems and today’s AI-driven speech generators is striking. Traditional TTS tools were built to ‘read text aloud,’ producing robotic voices that got the job done but lacked any sense of natural flow.

On the other hand, AI speech generators utilize deep learning to replicate tone, pacing, and emotion authentically (to the extent possible).

Here’s how they differ:

| Aspect | Traditional TTS | AI Speech Generator |

| Voice Quality | Flat, robotic, and easily recognizable as synthetic | Natural, expressive, and often indistinguishable from human voices |

| Flexibility | Limited to fixed pronunciations and monotone delivery | Dynamic intonation, emotional tones, and adaptive pacing |

| Customization | Basic controls like speed and pitch adjustments | Fine-grained control over tone, style, accent, and cadence |

| Learning Ability | Rule-based, no adaptation to context | Learns from large speech datasets, mimics human patterns |

| Use Potential | Suitable for simple reading tasks | Versatile for narration, branding, apps, and interactive content |

🧠 Fun Fact: Sir David Attenborough was ‘profoundly disturbed’ after hearing his own voice cloned by AI, saying things he never actually said. Look at this YouTube video to watch his interview:

Bringing automation into voice work reshapes how audio is created, delivered, and scaled. Let’s look at some advantages:

🔍 Did You Know? Jonathan Harrington, a professor of phonetics and digital speech at the University of Munich, has spent decades studying how humans produce sounds and accents.

Here’s what he has to say about AI voices:

In the last 50 years, and especially recently, speech generation/synthesis systems have become so good that it is often very difficult to tell an AI-generated and a real voice apart.

Well, how do you make it work? The idea of turning a script into a likelike audio sounds great, but the most critical step is setting up a workflow that saves time.

And so, we have ClickUp, the everything app for work, to make this setup easier. It combines project management, knowledge management, and chat—all powered by AI that helps you work faster and smarter.

Here’s a breakdown of exactly how to automate voice generation with AI step-by-step (with some help from ClickUp). 👀

First things first, decide where your AI voiceovers will come from. There are plenty of great AI voice generator platforms out there.

The right fit depends on what you need most:

🔍 Did You Know? The first computer to ‘sing’ was an IBM 7094 back in 1961. It produced ‘Daisy Bell’ in an early speech synthesis demo that inspired the HAL 9000 scene in 2001: A Space Odyssey.

Before you can generate a great voiceover, you need a polished script that’s ready to go.

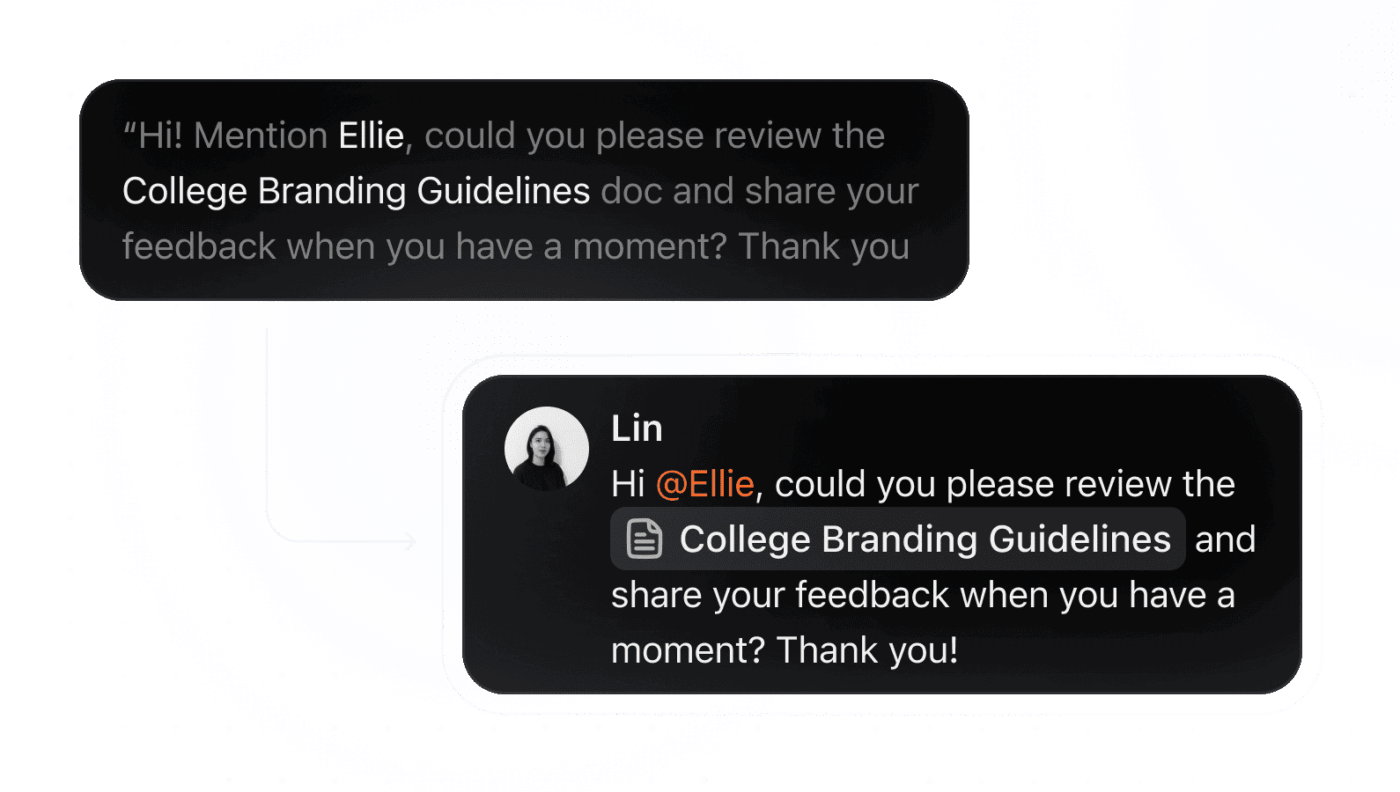

Use ClickUp Docs as your central hub for writing, reviewing, and refining. Work alongside your team side by side in real time, so writers, editors, and stakeholders can all stay aligned.

You can also add rich text formatting, tables, and links to ClickUp Tasks to keep everything structured and easy to follow. That way, your script is organized, accessible, and set up for seamless automation later.

📌 Example: If you’re building a video tutorial series, create a Doc with sections for the intro, main content, and closing, and share notes. Editors can drop comments on specific lines while writers adjust the text live, with every change syncing instantly for the whole team. You can also add tables to track pacing notes or voice styles, and bookmarks to jump between different parts.

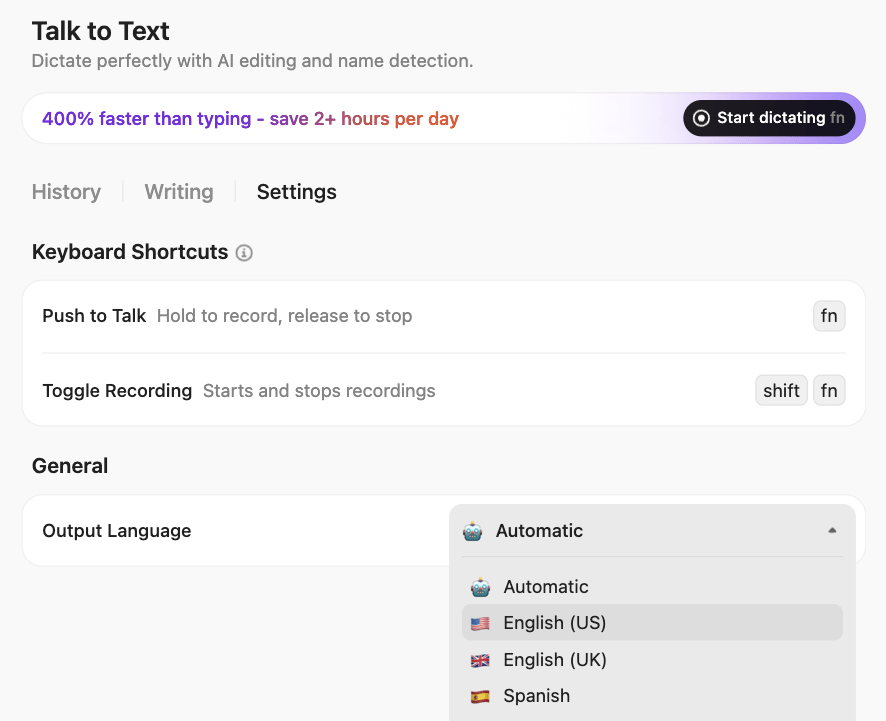

Voice-first workflow with ClickUp Brain Max

ClickUp Brain MAX turns your workspace into a Talk to Text studio—so you can draft scripts, leave revisions, or log task updates just by speaking. No typing, no switching tools, no “I’ll format this later.”

Result? Faster script cycles, fewer rewrites, and less friction between idea → voice → execution.

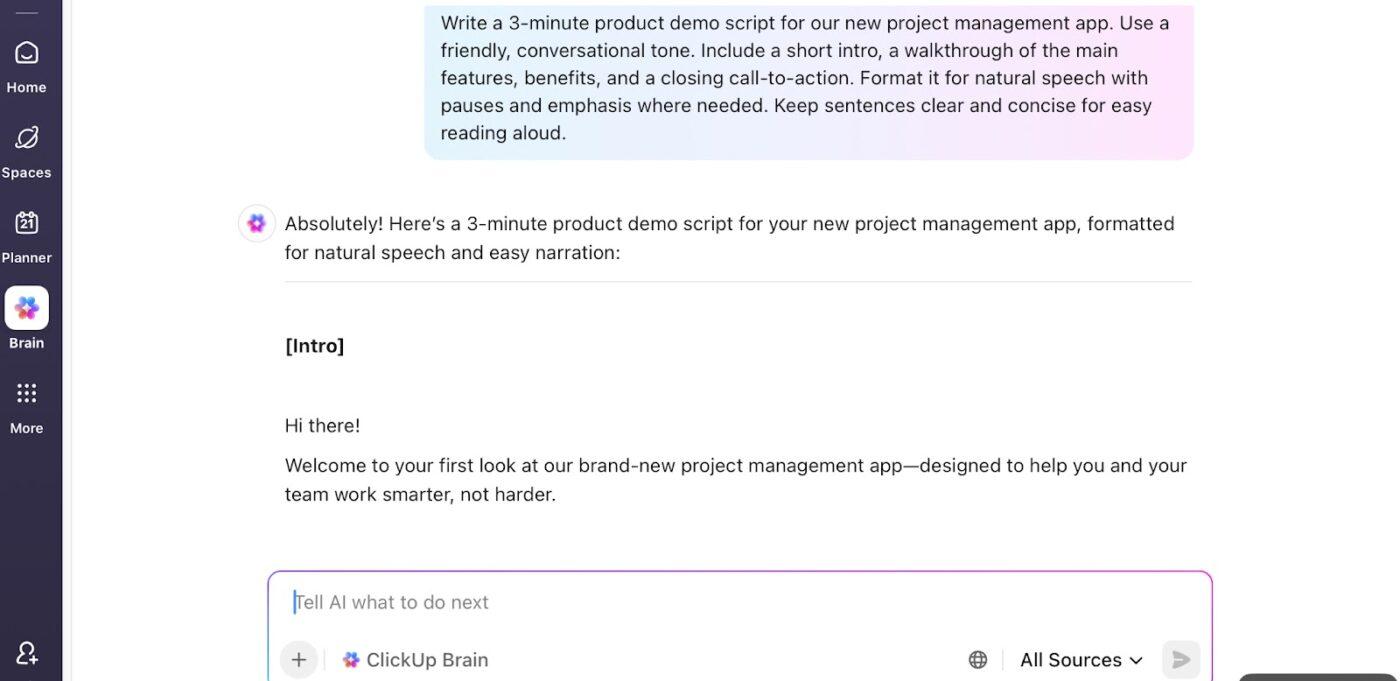

Worried about your tone? ClickUp Brain sharpens narration, cuts fluff, and formats your text for natural delivery right within your ClickUp Doc.

Think of it as a script editor. You can:

✅ Try This Prompt: Add pauses for emphasis so it’s easier to follow when read aloud and summarize the technical jargon into 2-3 short sentences.

Find out more about ClickUp Brain:

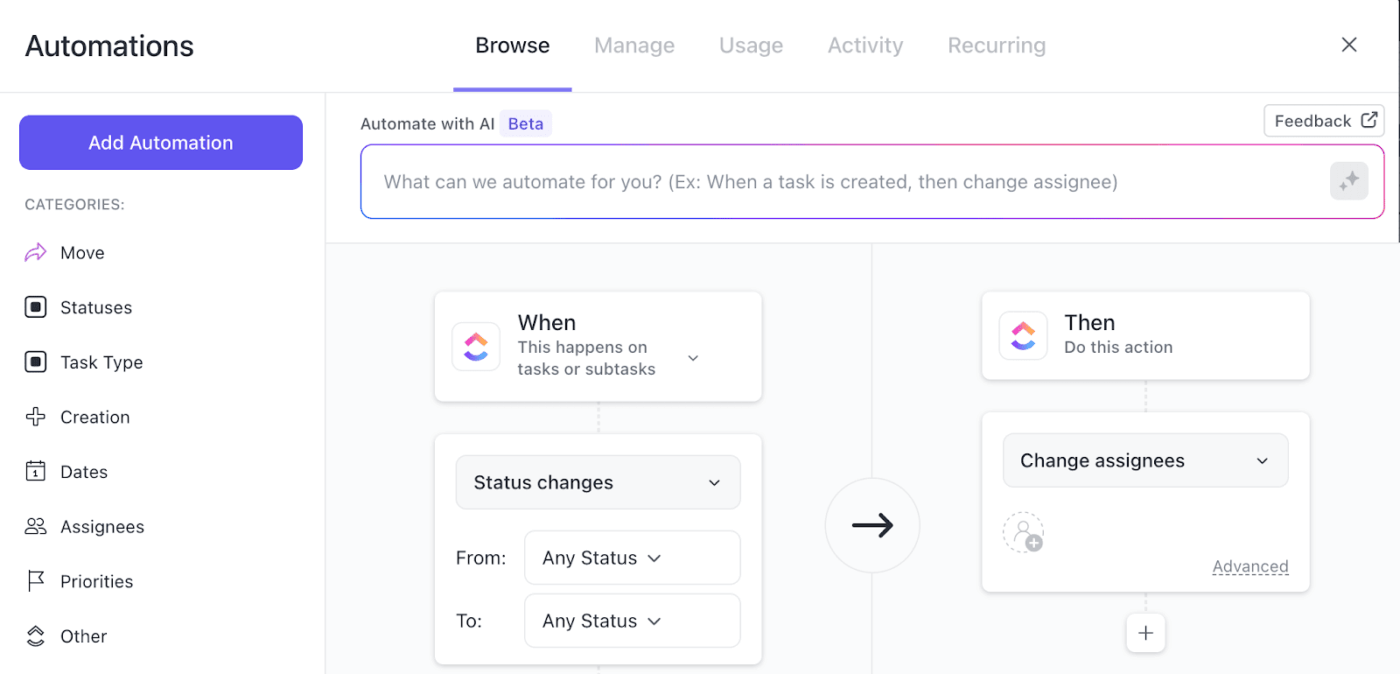

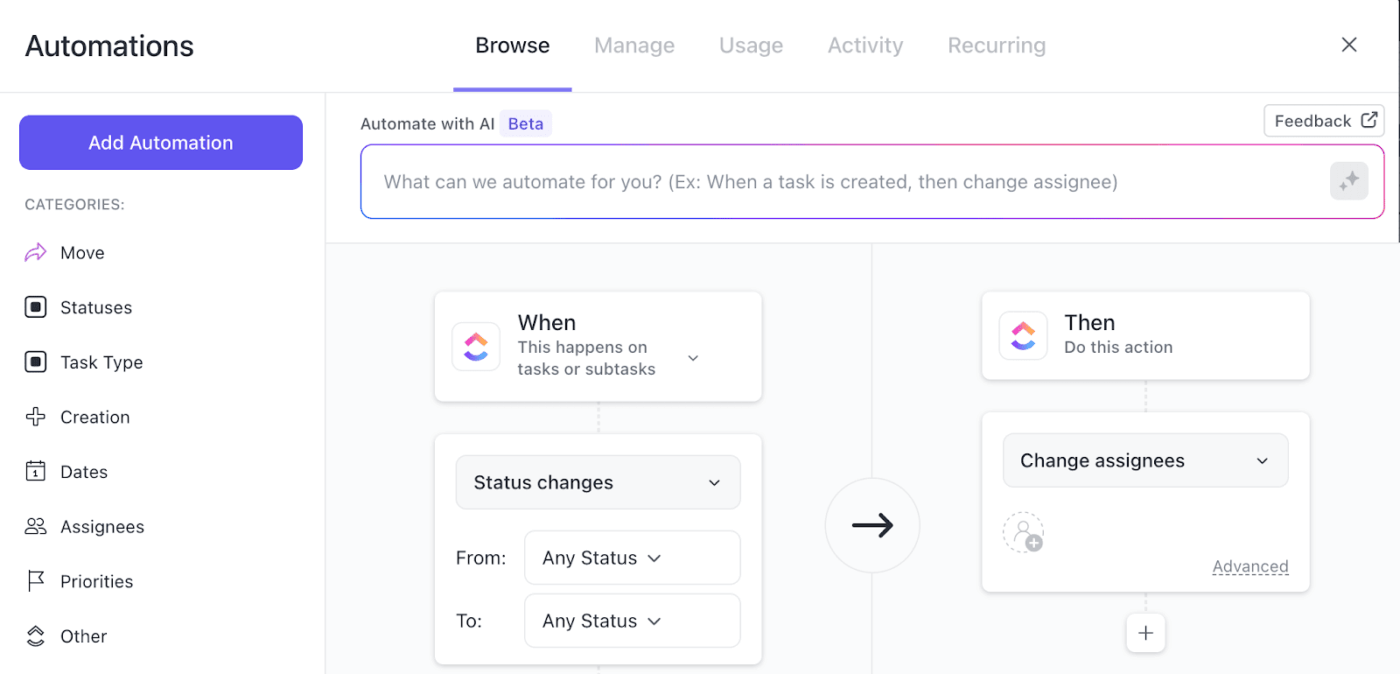

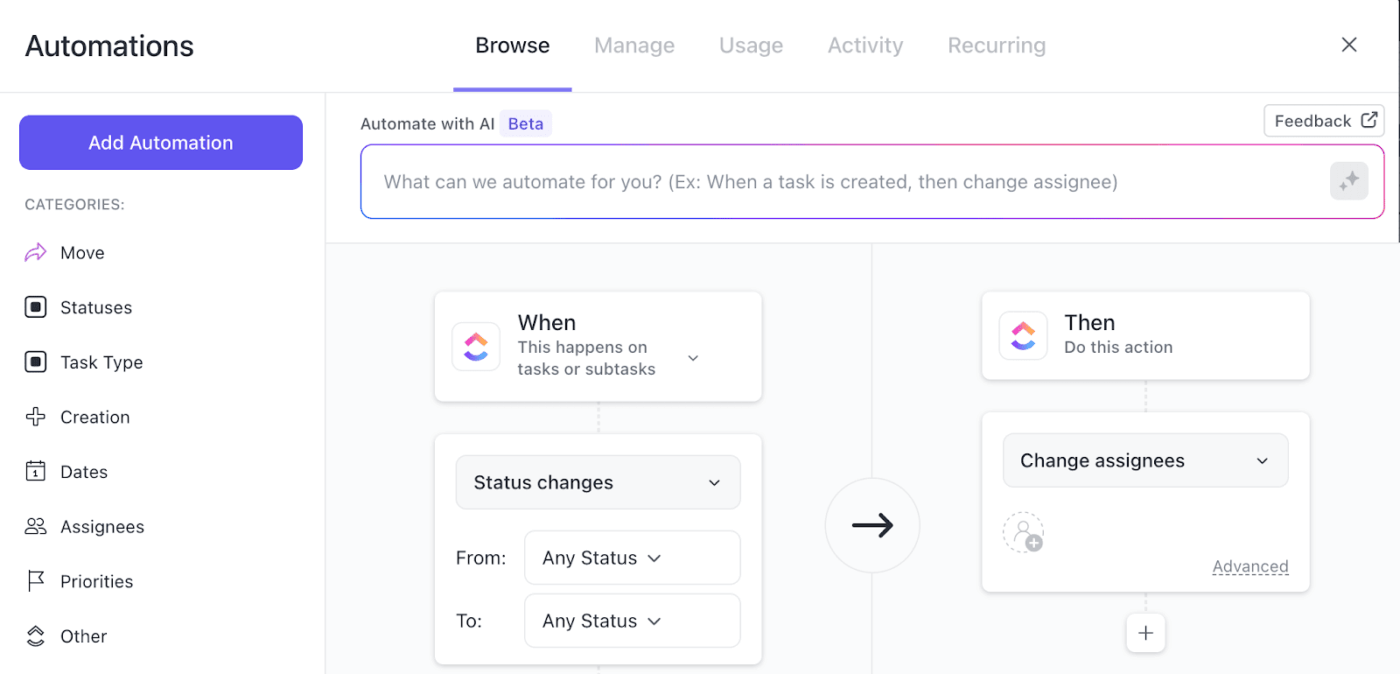

Once your script is ready and audio is generated, turn to ClickUp Automations.

You can build workflows on a simple principle: ‘If this, then that.’

For instance, you can set up an automation for when a task status changes to ‘Audio Generated.’ ClickUp automatically assigns it to the editor, notifies them in ClickUp Chat, and moves the task to the ‘Editing’ list.

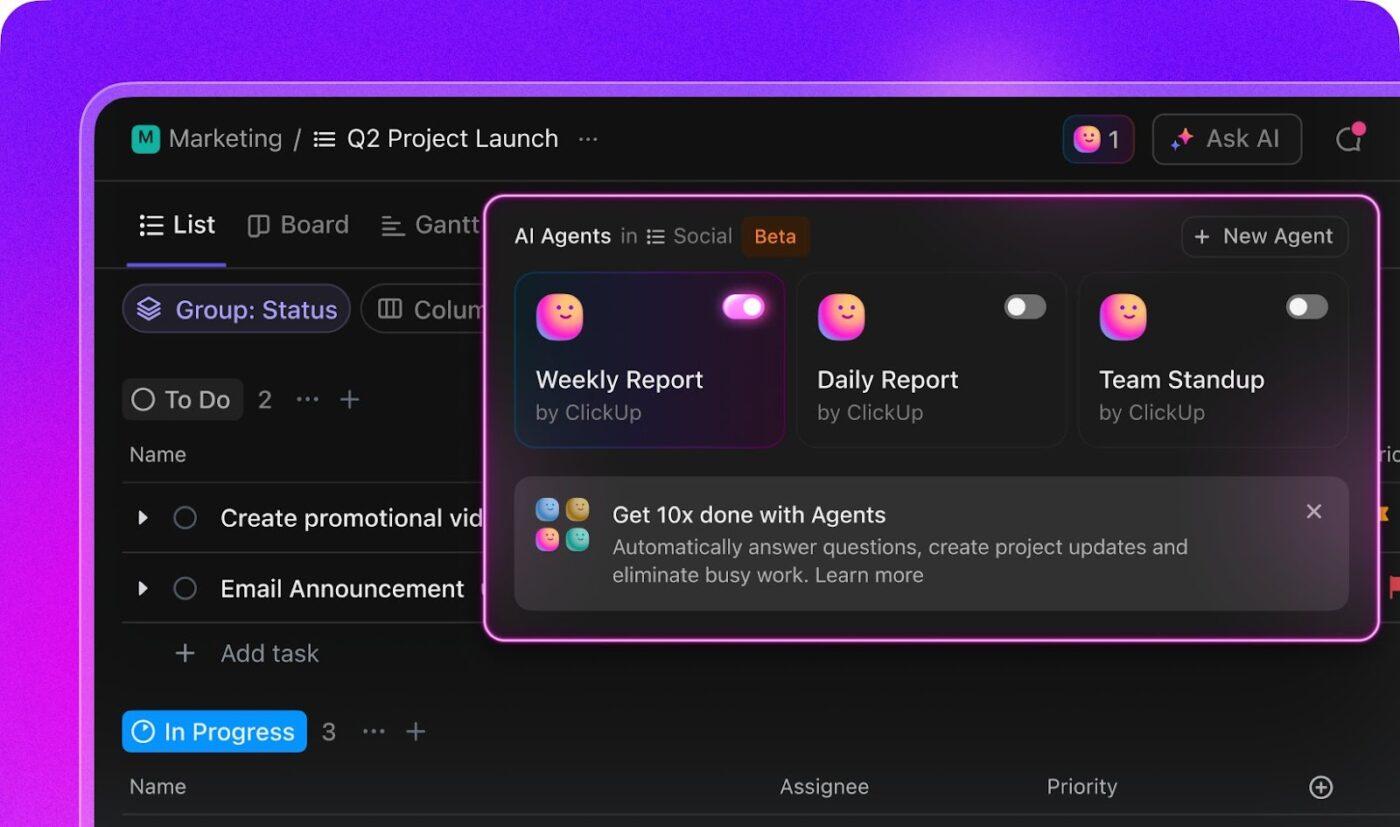

🚀 ClickUp Advantage: ClickUp AI Autopilot Agents keep projects moving without human intervention.

They watch for triggers, like a task marked complete, and then run the next set of actions automatically. This means files get generated, attached, and routed to the right people, updates are shared instantly with teams, and tasks progress to the next stage without delays.

AI isn’t just for tech pros—it’s for every one of us. From planning meals to managing money, AI can simplify your entire day. Learn how in the video below!

Most commercial text-to-speech software comes with strings attached: limited voices, usage caps, licensing fees, and little room for true customization.

Open-source text-to-speech helps here.

These tools give you complete control over voice training, deployment, and scaling, breaking the cycle of vendor lock-in.

Here are our top picks for the best AI voice generators. 💁

ClickUp is already well-known as a flexible, all-in-one workspace platform that brings tasks, docs, chat, whiteboards, and automation into a single environment.

What makes it particularly compelling now is ClickUp Brain MAX, ClickUp’s contextual AI super-app that integrates deeply into your entire workflow. It doesn’t just “add AI” — it connects to your actual work (tasks, docs, chats, integrations) so you get one intelligent assistant rather than many disconnected tools.

Best Features:

Limitations:

Pricing:

Ratings & Reviews:

Coqui TTS is a community-driven project that offers high-quality, neural network-based TTS models. It supports multiple languages and provides pre-trained models for ease of use.

Best features

Limitations

Pricing

Ratings and reviews

📌 Ideal for: Developers looking to implement customizable TTS solutions in applications such as virtual assistants, e-learning platforms, and accessibility tools.

⚡ Template Archive: ClickUp’s Meeting Minutes Template helps you capture agendas, key points, and action items in one place. The meeting notes template keeps your discussions structured and decisions documented so nothing gets missed.

Piper TTS is a lightweight, fast, and efficient TTS system designed for real-time applications. It’s optimized for performance and can run on various devices, including mobile platforms.

Best features

Limitations

Pricing

Ratings and reviews

📌 Ideal for: Managers requiring real-time voice feedback, such as navigation systems, interactive kiosks, and assistive technologies.

Festival Speech Synthesis System is a comprehensive, general-purpose TTS system developed by the University of Edinburgh. It provides a full text-to-speech system with various APIs and supports multiple languages.

Best features

Limitations

Pricing

Ratings and reviews

📌 Ideal for: Researchers, developers, and educators who want an AI transcription tool for experimentation, academic projects, or building tailored voice solutions.

eSpeak NG (Next Generation) is a compact, open-source speech synthesizer that supports a wide range of languages. It’s primarily known for its small footprint and efficiency.

Limitations

Pricing

Ratings and reviews

📌 Ideal for: Developers, hobbyists, and embedded system projects where efficiency and multilingual support matter more than ultra-realistic voice quality.

📖 Also Read: How to Use AI for Meeting Notes (Use Cases & Tools)

Automating AI voice generation brings both technical and ethical challenges, particularly when striving for realism and security.

Here are some persistent challenges:

AI voices can be cloned from just seconds of recorded audio, sometimes without the originator’s knowledge. That raises serious ethical and even legal questions.

Plus, voice actors have raised concerns about their work being used to train synthetic voices without full disclosure or compensation.

🔍 Did You Know? A Scottish actress objected when her voice was used without permission for public announcements, prompting a repeal of the AI voice.

Even high-fidelity AI voices can feel flat.

Researchers find that AI struggles with conveying subtle emotional cues like empathy or sarcasm. These are elements that human speakers naturally adjust based on context.

Without this nuance, even a perfectly enunciated line can feel hollow, especially in storytelling or patient communications.

A recent study found that synthetic speech systems perform worse with regional accents, reinforcing linguistic privilege and unintentionally excluding diverse speakers.

In multicultural settings, like global customer support or multilingual e-learning, this can undermine inclusivity and accuracy.

🧠 Fun Fact: Actor Val Kilmer, who lost his voice due to throat cancer, had it synthetically recreated using his past recordings. This enabled him to reprise his iconic role in Top Gun: Maverick.

Users often can’t tell whether a voice is human or AI-generated. In fact, about 80% of listeners matched an AI voice to its human counterpart, while only around 60% correctly identified a voice as synthesized.

This blurring of trust can be problematic, especially if malicious actors exploit synthetic voices for scams or misinformation.

📖 Also Read: How to Transcribe Voice Memos to Text

Audio deepfakes are no longer sci-fi. In numerous high-profile fraud cases, like CEOs being mimicked to authorize fraudulent transfers, realistic AI voices have been weaponized.

In fact, this risk shows up starkly in political misinformation, too. AI-cloned voices of public figures were used in harmful election disinformation campaigns.

🔍 Did You Know? The word ‘deepfake’ is a mix of ‘deep learning’ and ‘fake.’ These AI-powered creations can swap faces, tweak lip movements, and even generate new voices, making them almost indistinguishable. While they’re often used for entertainment, the same tech poses big challenges for authenticity in AI-generated voice automation.

Teams often manage multiple tools to track drafts, recordings, and final files, which slows everything down.

As we’ve explored, ClickUp brings all of that into one workspace. Let’s look at how you can leverage some of its other tools to manage your voice generation workflow. 🔁

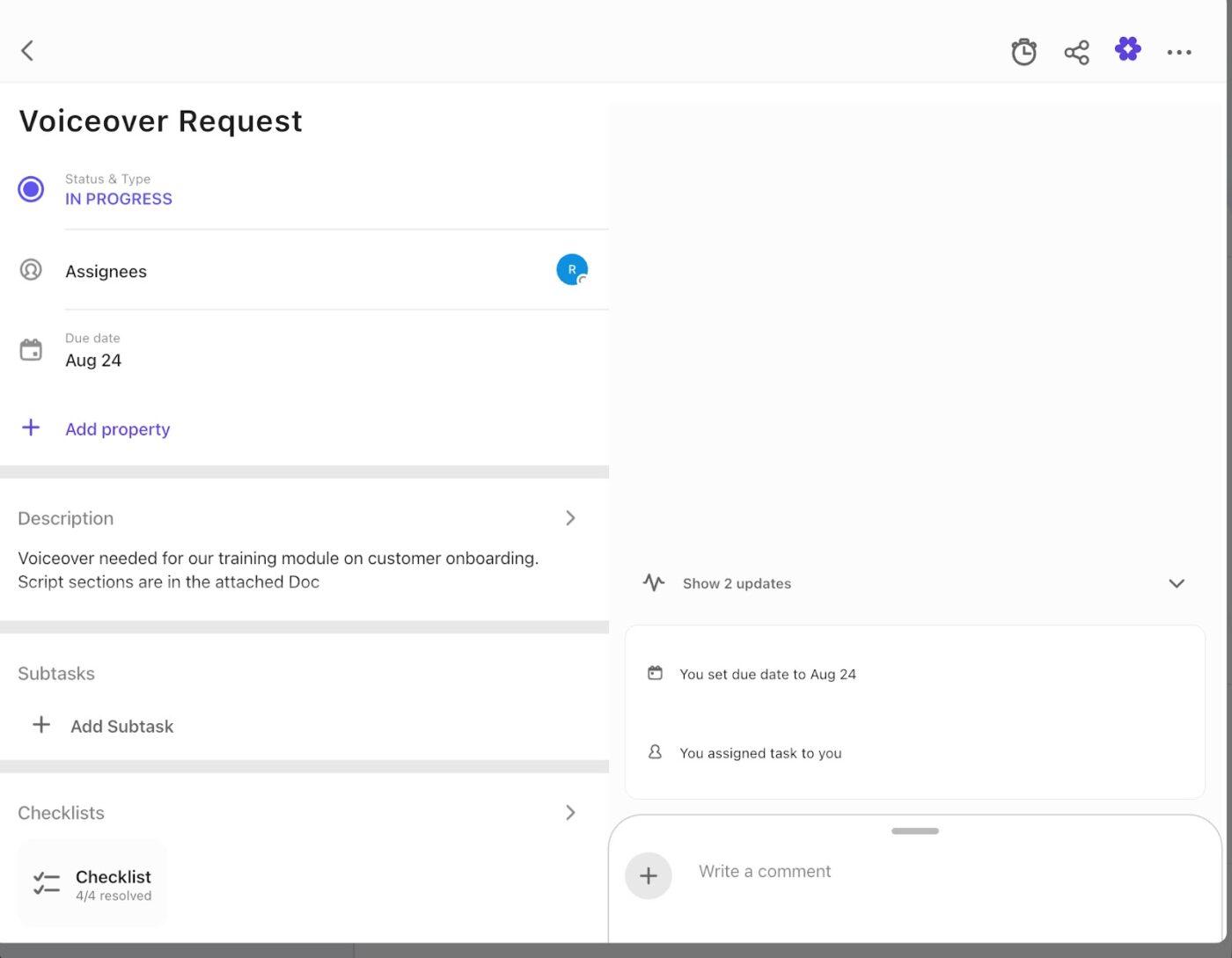

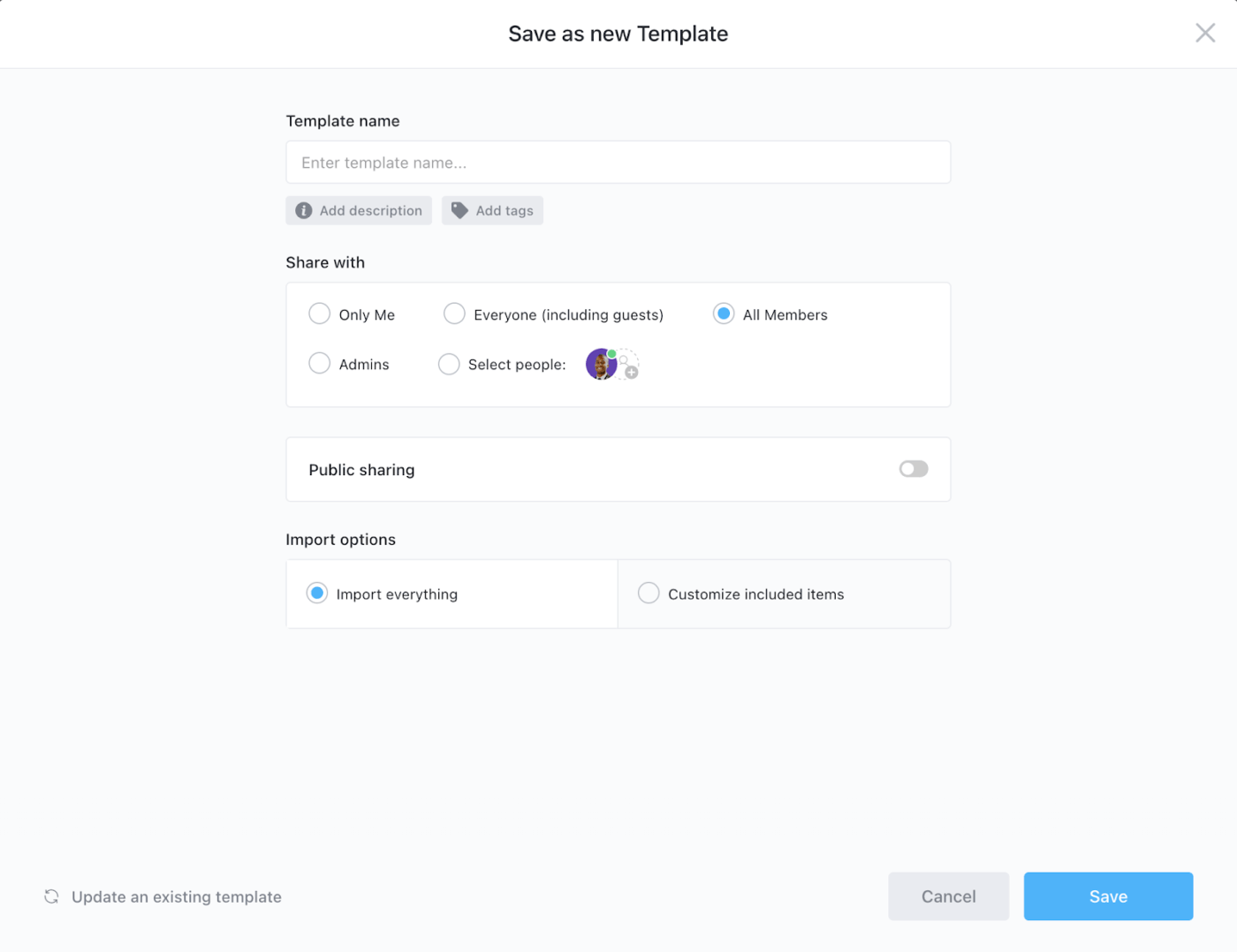

To avoid building tasks from scratch, set up a template with all key details. This can include ClickUp Custom Fields, a deadline, and an assignee (a voice artist, editor, or project manager).

You can also include Fields such as ‘language,’ ‘tone,’ or ‘style guide’ to make sure every request is clear from the start.

To keep projects moving smoothly, add a checklist inside the task that outlines the full process. For example: Script Review → Voice Recording → Editing → Publishing.

Once you’ve built a task that captures everything you need, save it as a reusable template (say, ‘Voiceover Request’).

📮 ClickUp Insight: 57% of people get interrupted during planned focus sessions, and 25% of those interruptions come from people. 🤦🏾♂️

But guess what? Many of these urgent questions and quick check-ins can be automated with AI Agents that can provide answers, status updates, and more.

ClickUp’s Autopilot Agents can do all of that and even take care of custom workflows. Just set up the triggers, and you’re good to go!

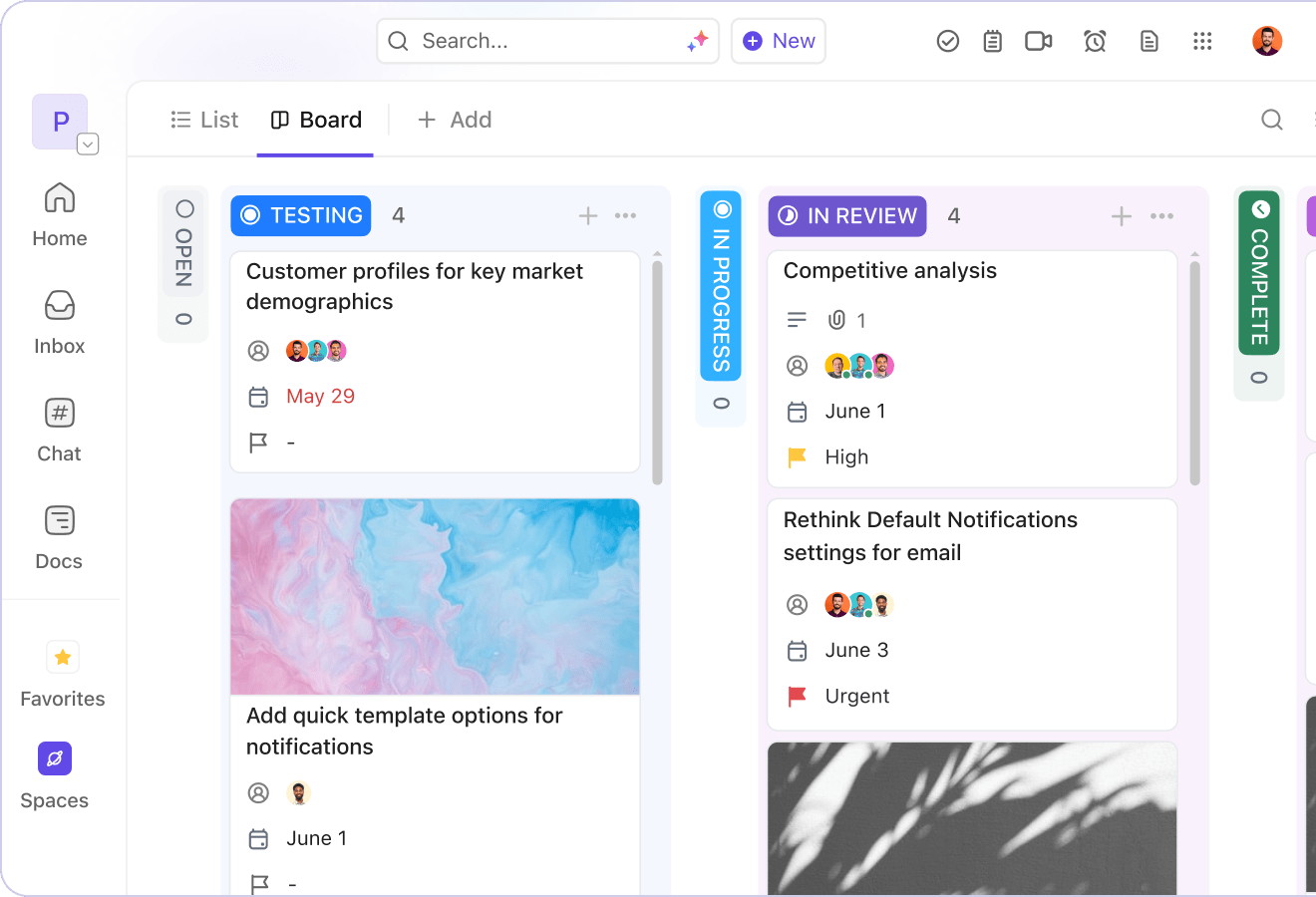

Keeping your voice generation projects on track means knowing both where each task stands and how the entire schedule looks at a glance. ClickUp Views make that possible, giving you flexible ways to visualize progress, spot bottlenecks, and stay ahead of deadlines.

Take the ClickUp Board View, for instance.

If you’re producing multiple videos at once, you can set up columns for stages like Script → Review → Voice → Publish. As each task moves forward, simply drag it from one column to the next.

This makes it easy to see when scripts pile up in ‘Review’ or when recordings aren’t making it into ‘Editing.’

Teams can collaborate right inside the board, adding comments, sharing files, or updating task details in real time. You can even set work-in-progress (WIP) limits to prevent too many projects from getting stuck.

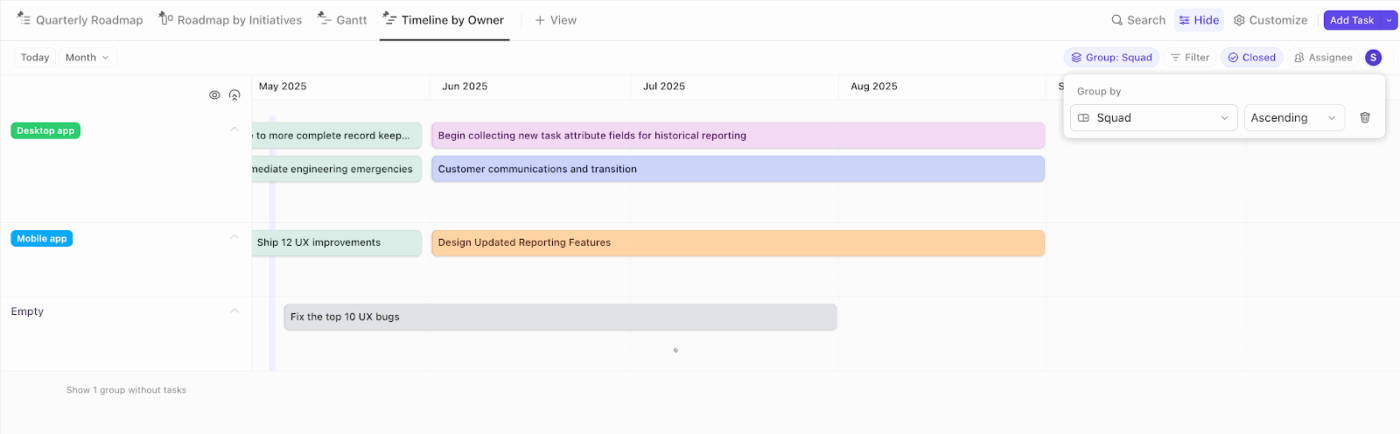

When you need a broader perspective, switch to the ClickUp Timeline View.

For instance, your production calendar shows every task with a start and end date, mapped out against dependencies. A recording session can’t begin until the script passes review, and publishing won’t happen until editing wraps.

With milestones added, you can highlight key points like ‘Final Review’ or ‘Launch Day,’ making it easier to track progress toward big deadlines.

A user shares:

ClickUp is great for when there are multiple tasks/subtasks for a particular project and all members of the team need to be kept updated. A well-designed folder or list can easily replace the need for communicating via email and Slack/MS Teams. The different views also help identify priorities and create timelines effectively.

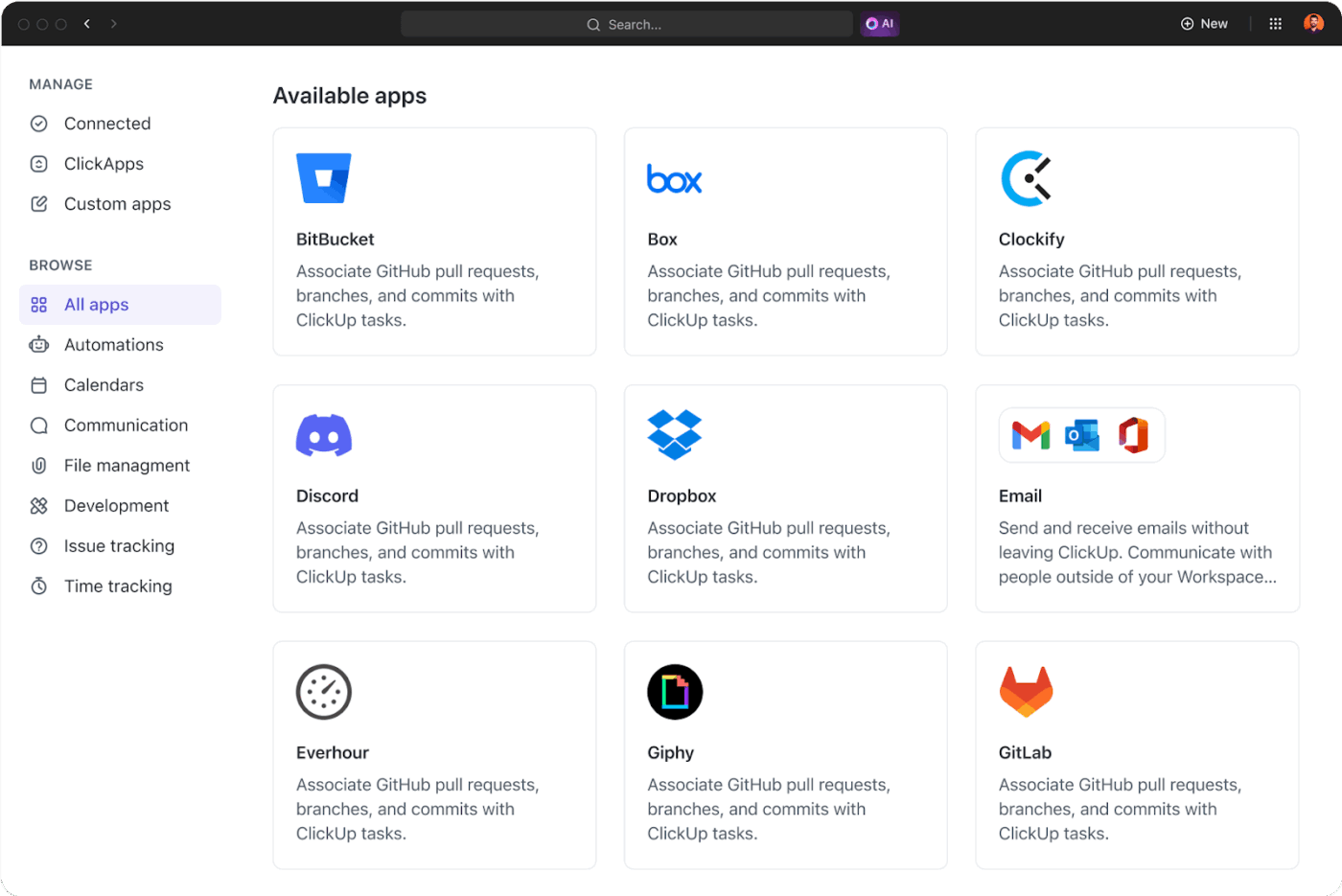

If you’re working with multiple tools like Gmail for stakeholder communication and Dropbox to manage audio files, it can get tiring.

ClickUp Integrations connect your tech stack directly to your workspace.

For instance, drop a Google Doc script into a ClickUp task, sync deadlines with your Google Calendar, or link recorded audio files from cloud storage so everything lives in one place. If your team manages edits in Figma, those workflows also tie right into ClickUp.

📖 Also Read: Top Free Screen Recorders With No Watermark to Use

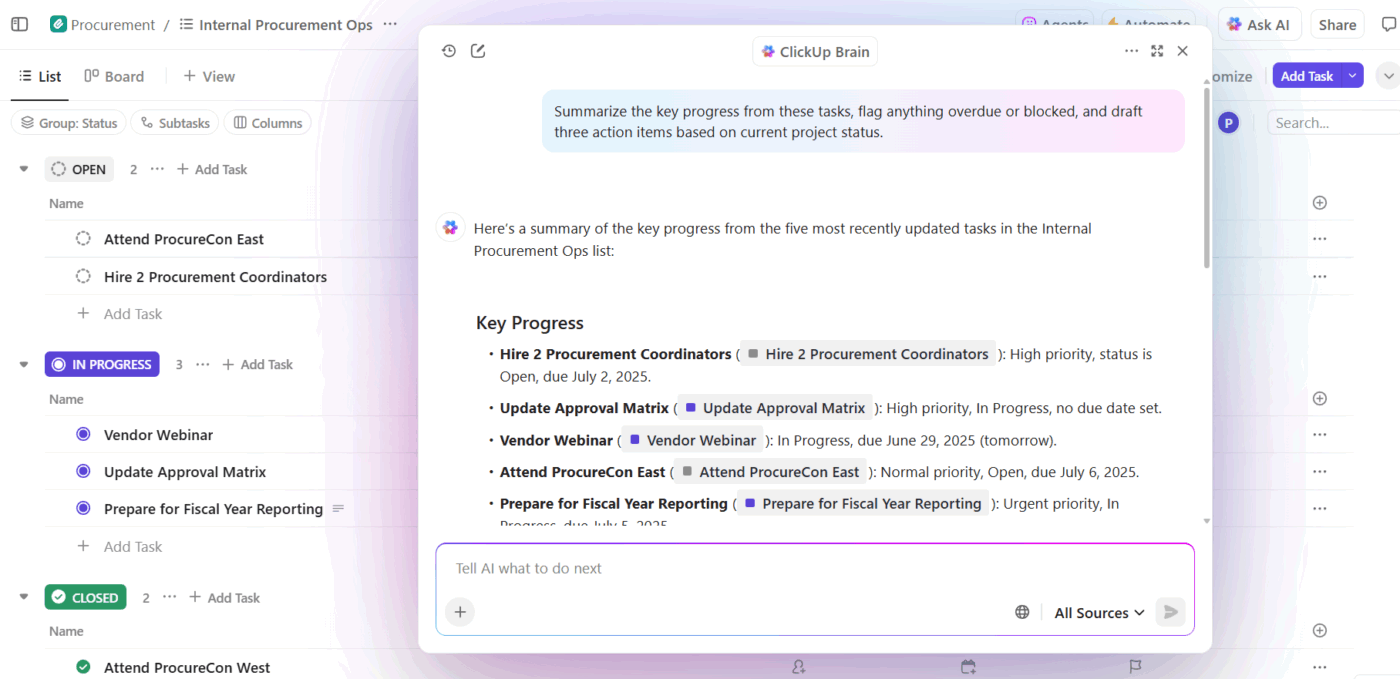

ClickUp Brain acts as your built-in project assistant, helping you stay on top of voice generation tasks.

With the AI Project Manager in charge, all you have to do is ask: ‘Which videos are still waiting on a voiceover?’ or ‘Which tasks are blocked in the editing stage?’ You’ll get instant answers from your workspace.

Plus, with ClickUp Enterprise Search, you can pull results from across your workspace and connected tools.

So if you need the updated French script buried in last week’s email thread, or the latest audio draft saved in a linked drive, ClickUp Brain surfaces it in seconds.

🚀 ClickUp Advantage: ClickUp Brain MAX transforms your workflow with workplace-wide, voice-first intelligence.

Tap into its Talk-to-Text functionality to dictate messages, tasks, or docs. This is 4x faster than typing! The speech-to-text software also lets you access premium AI models like GPT-4.1, Claude, and Gemini automatically optimized for your task.

As models become smarter and more adaptive, AI voice generation is shifting toward human-like qualities. There are developments being made for voices that sound real and respond with context, emotion, and intent.

Here are some key trends shaping what’s next:

📖 Also Read: Best AI Meeting Summarizers

Voice generation is no longer a niche tool. It’s quickly becoming a core part of how teams produce content, build apps, and communicate at scale.

However, project managers tend to forget that the challenge is streamlining the workflow as well. You’ve to manage scripts, reviews, and publishing steps that make the final output usable.

ClickUp fits in here. You’ve got task templates for consistent requests and Board and Timeline Views to track progress. Docs are the perfect space to store scripts, while ClickUp Brain is excellent for instant updates.

With these tools by your side, you’ve got yourself a streamlined production studio.

Sign up to ClickUp for free today! 📋

Not entirely. AI voices are great for tasks like training videos, product demos, or quick content updates where speed and scalability matter. But for projects needing deep emotional nuance or artistic expression, human voice acting still gets the edge. Many teams use a mix of both depending on the project.

Modern systems learn from massive datasets and adapt to accents, tone, and pacing. With features like noise filtering, context recognition, and emotional intonation, natural-sounding AI voices are becoming more prominent. Accuracy continues to improve with ongoing training and real-time feedback loops.

Yes, but with conditions. You can legally use AI-generated voices in most commercial projects, provided you follow the licensing terms of any tool you’re using. However, voice cloning a real person’s voice without consent can raise ethical and legal issues. Always check the terms of use before publishing.

Absolutely. Many AI voice generator tools support dozens of languages and accents, making them useful for global teams, localized marketing campaigns, and accessible learning content.

© 2026 ClickUp