Top AI Prompting Techniques to Improve Output Quality

Sorry, there were no results found for “”

Sorry, there were no results found for “”

Sorry, there were no results found for “”

You ask AI to draft a product launch email or analyze competitors—but the result sounds flat and generic. So you rephrase, add more context, and try again. Still not right. 😕

That’s because AI is only as good as the prompt.

The difference between a generic reply and a real thinking partner comes down to how you ask.

This guide walks you through practical AI prompting techniques—and how teams across content, product, and ops can use them to get sharper, more nuanced responses.

📌 Did You Know? According to McKinsey’s Global Survey, 65% of companies reported using generative AI in at least one business function.

Prompt engineering is the practice of giving clear, specific instructions to get the desired outputs from Artificial Intelligence (AI) tools, especially large language models (LLMs) like GPT.

These models rely on natural language processing to interpret your instructions—which means the clarity of your words directly shapes the quality of their AI responses.

It’s pretty much like giving directions to someone who’s never been to your city. You can either say, ‘Head north and you’ll find it,’ and hope they get there. Or, you can give them the street name, landmarks, and the exact house number to look for.

In terms of prompt engineering, this means:

📊 Stat Alert: The Stanford AI Index found that:

*For all the techniques here, we show you what they look like in practice, in ClickUp Brain, our built-in AI assistant.

Effective prompt engineering is part art, part science. While only practice can help you master the art, to learn the science (i.e., techniques), scroll down and explore how to ask AI a question 👇

Zero-shot prompting is the simplest technique for prompt engineering. You give the AI a direct prompt to perform a task, but no examples of how to do it.

Since modern large language models are trained on diverse patterns of language, reasoning, and knowledge, they can perform specific tasks independently, even without explicit examples (this is known as zero-shot learning).

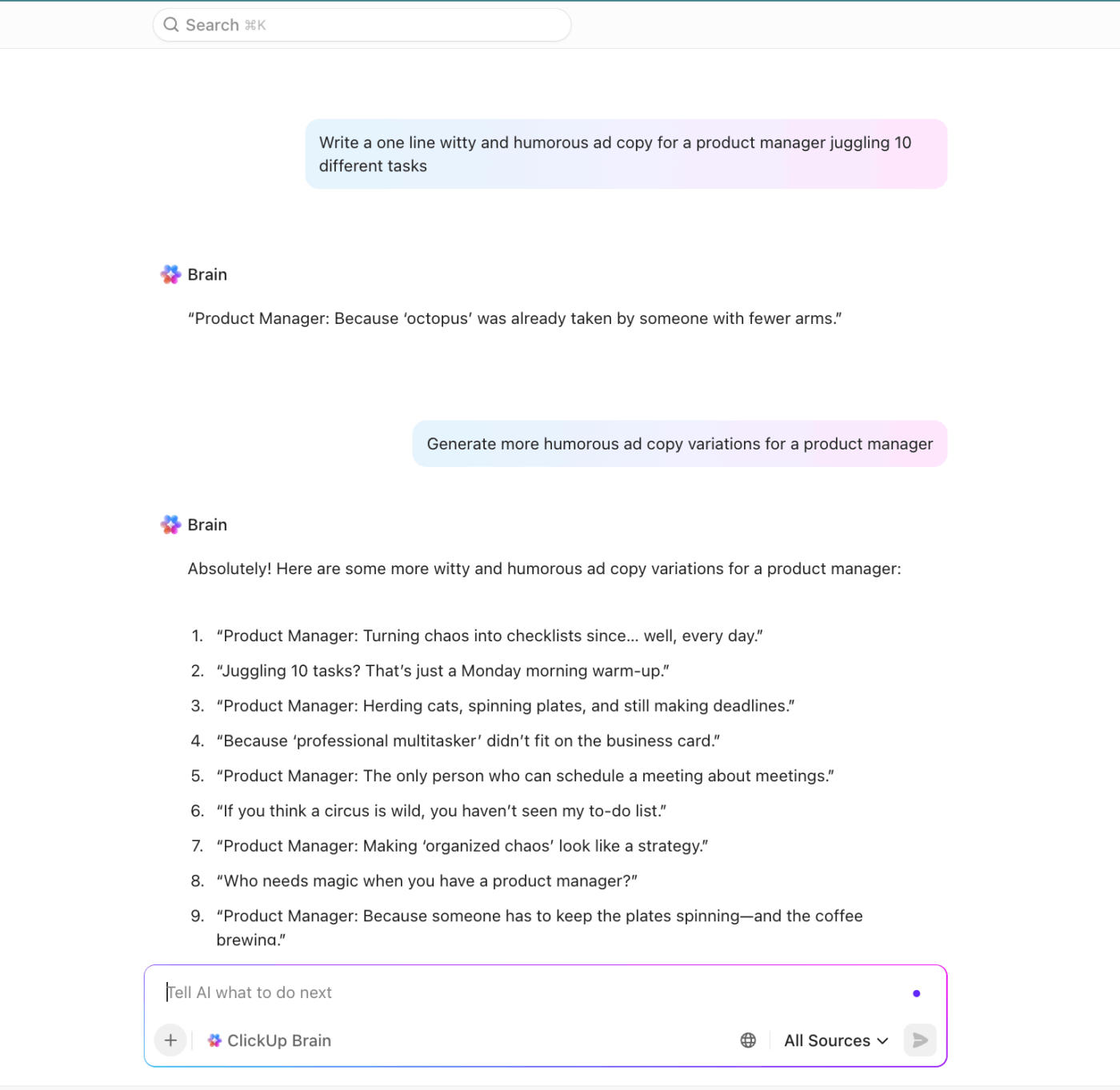

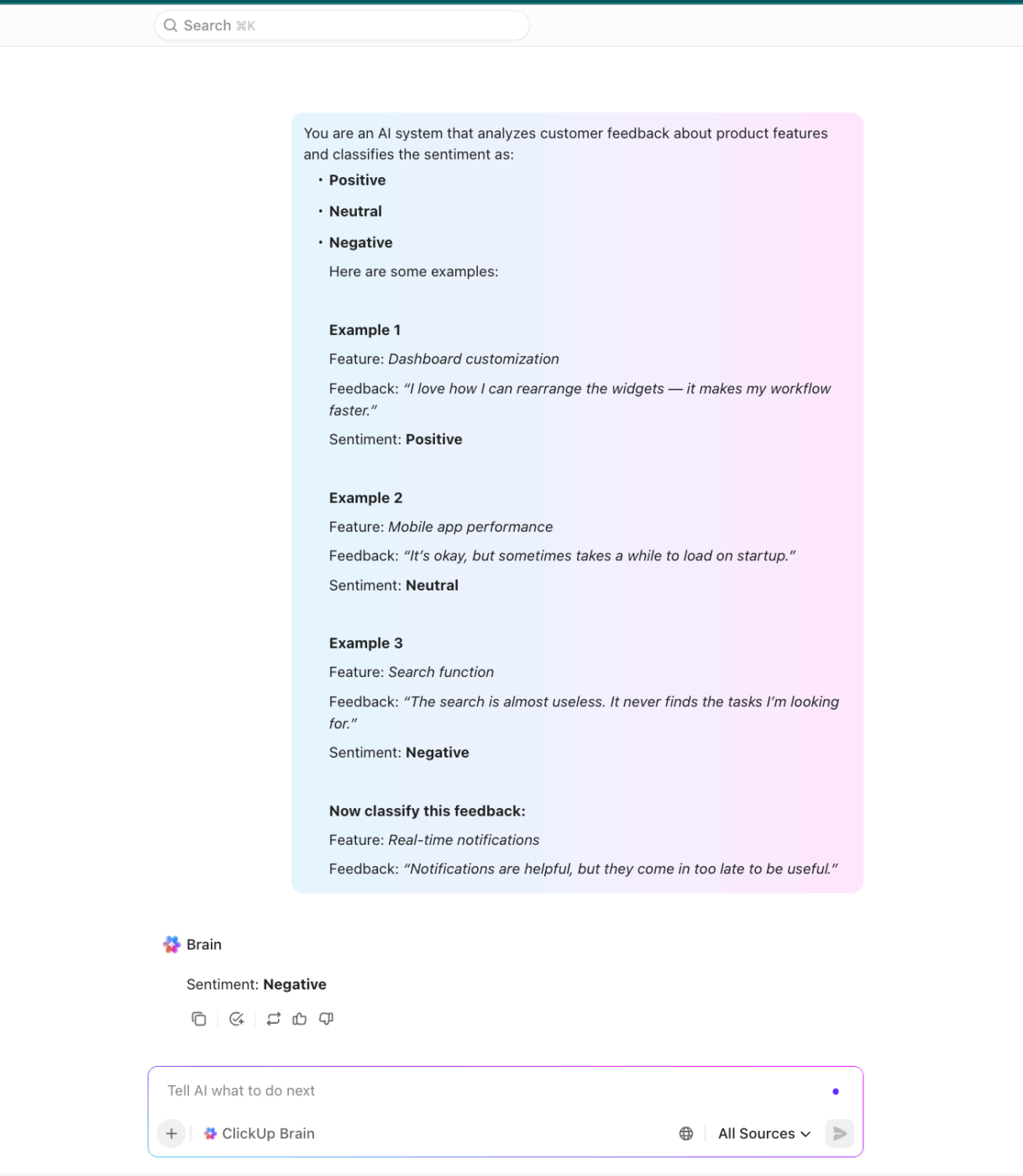

For example, consider this prompt we gave to ClickUp Brain:

Notice how the AI immediately produced the ad copy without being shown any examples of what a witty copy looks like? That’s zero-shot prompting at work.

💡 Pro Tip: Use the Zero-shot prompting technique when you need to get something done fast without needing it to be perfect.

For example, writers can use it for creative writing and generate a quick first draft that they can refine later.

Or use this technique to ask factual questions or generate summaries.

Sander Schulhoff, also known as the “OG prompt engineer,” highlights that the few-shot prompting technique can improve accuracy from 0% to 90% in controlled tests involving classification accuracy.

Unlike zero-shot, few-shot prompting requires you to give examples to the AI before asking it to complete a similar task. These ‘shots’ demonstrate the format or logic the model should follow to provide the expected answer.

For instance, let’s say you want AI to classify social media comments for sentiment analysis. Instead of directly asking it to ‘analyze sentiment,’ you can guide AI using labeled examples first, like below:

As you can see above, the examples served as instructions to help the AI system understand how to label customer feedback.

💡 Pro Tip: Few-shot prompting works best when your examples are short and clear. If you overload the AI with too many examples or give conflicting ones, the output is bound to be affected.

The right way: Stick to 3-5 simple, clear, and consistent examples for short text tasks; longer-form tasks may require fewer but richer examples.. That way, the model will be able to generate the desired output.

📌 Quick Note: Google researchers created the Nano Banana Prompting Guide to teach LLMs how to mimic specific behaviors using just a few carefully chosen examples.

It shows that even tiny, high-quality samples can dramatically boost the model’s accuracy, proving that the quality of examples often matters more than quantity.

In the chain of thought prompting technique, you essentially tell the AI: ‘Don’t just give me the answer. Walk me through how you got there.’

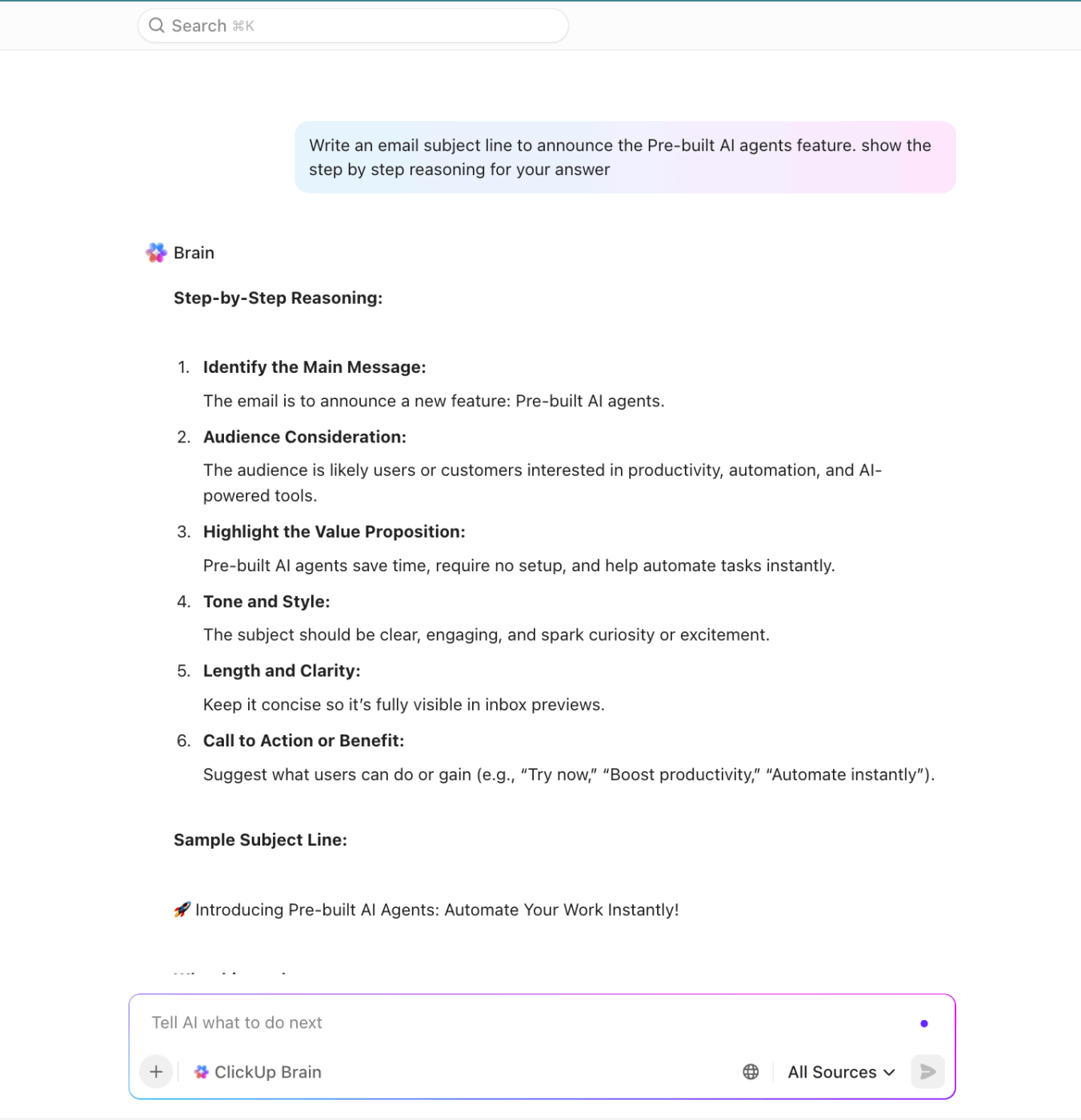

Let’s say you want to draft one email subject line to announce a new feature in your productivity app: task prioritization. Here’s how you can use chain-of-thought prompting to generate a relevant email subject line:

By asking AI to explain its complex reasoning process, you can look at the steps it followed and pinpoint exactly where the AI might have gone wrong when brainstorming the email subject line.

Not only does this help you trust the final answer more, but if you want to reprompt, you can do so with clearer instructions.

💡 Pro Tip: Generating a step-by-step thought process is time-consuming. For tasks where speed is critical, the overhead of chain of thought prompting can be a major disadvantage.

Moreover, the reasoning path generated by an AI doesn’t always reflect its true internal process. As you can see in the above example, the AI provided us with a ‘summary’ of its reasoning, and not the actual step-by-step breakdown. This can create a false sense of transparency, especially in more complex tasks.

So, rely on chain-of-thought prompting only for problems that truly need structured reasoning (e.g., multi-step math, logic puzzles, or analytical breakdowns). For straightforward or time-sensitive tasks, a direct prompt is more effective.

When you ask AI a question, it usually takes one reasoning path and gives you the most likely answer. But what if that path isn’t the best one?

That’s exactly what self-consistency prompting tackles. In this, you ask AI to generate multiple reasoning paths to pick the most reliable and relevant one.

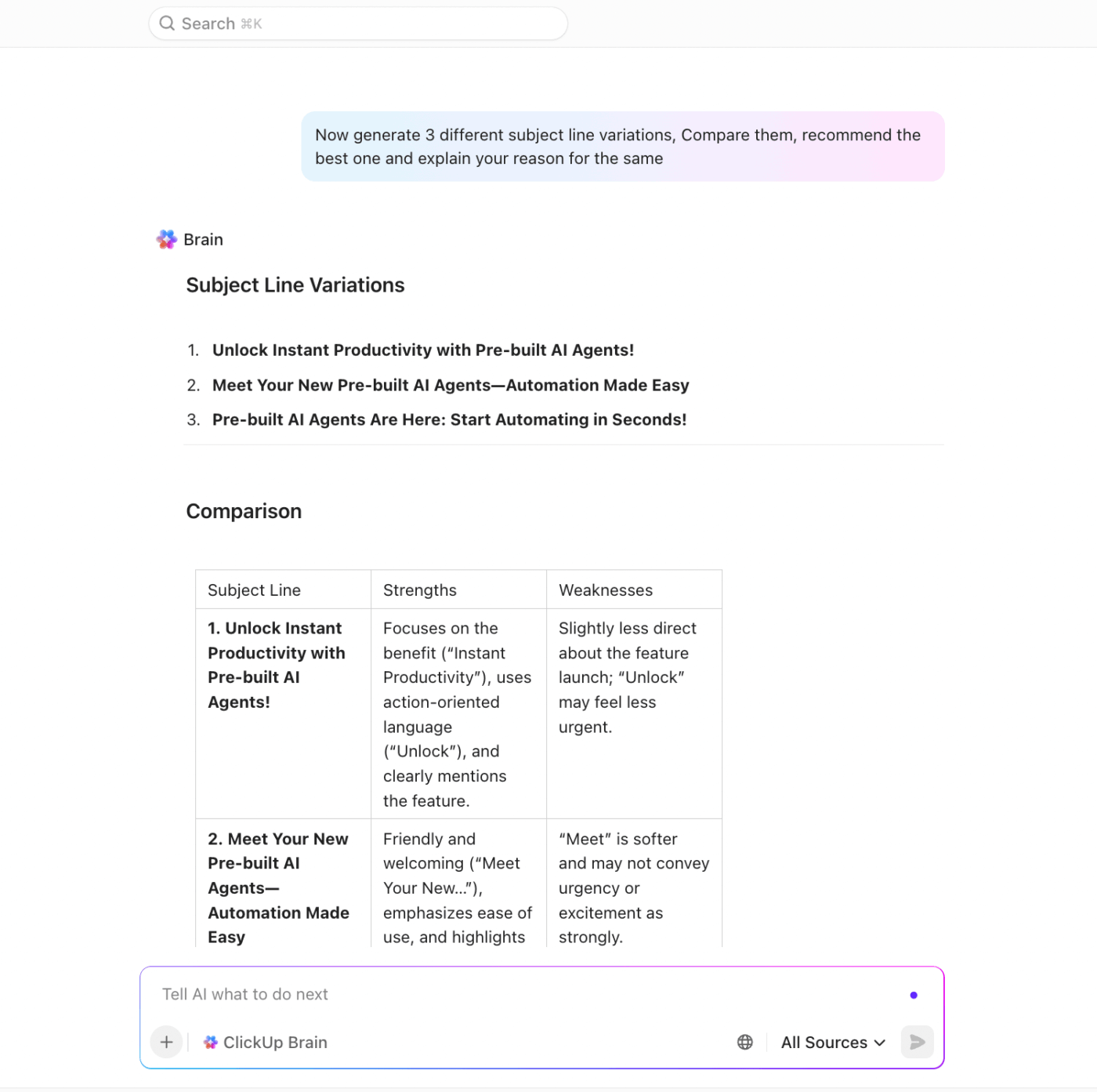

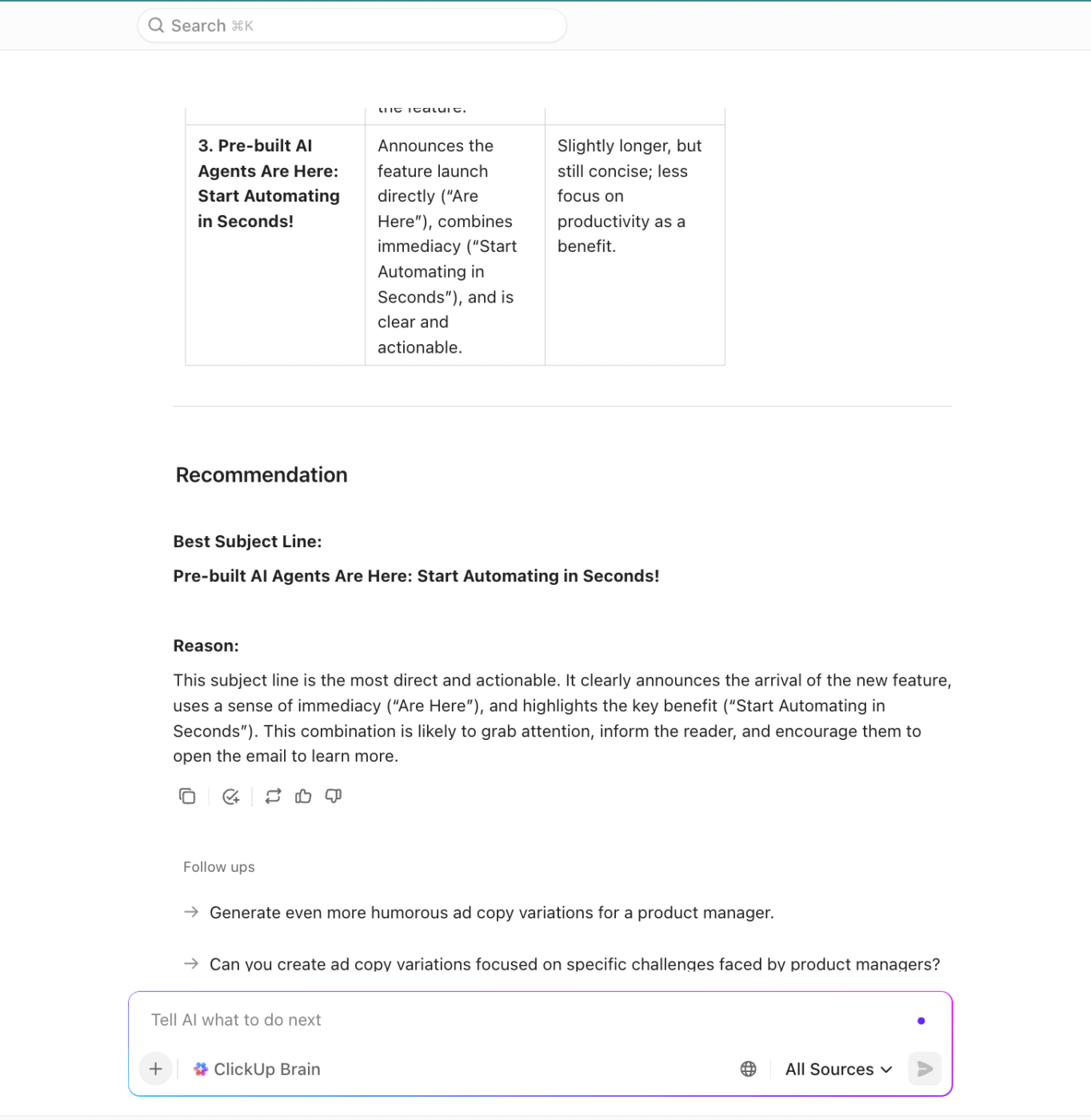

Let’s use the same email subject line example to understand this. Instead of asking AI to generate a subject line and explain how it got there (like we did in CoT), we asked it to generate multiple subject lines and identify the best option in one go:

If instructed, AI can compare multiple generated options and select the strongest one.

💡 Pro Tip: To get the best results, add one final instruction to your self-consistency prompt: ‘Explain why the chosen answer is the best.’

This forces the AI to audit its reasoning and justify its conclusions, producing a more transparent and reliable answer.

📮 ClickUp Insight: 47% of our survey respondents have never tried using AI to handle manual tasks, yet 23% of those who have adopted AI say it has significantly reduced their workload.

This contrast might be more than just a technology gap. While early adopters are unlocking measurable gains, the majority may be underestimating how transformative AI can be in reducing cognitive load and reclaiming time. 🔥

ClickUp Brain bridges this gap by seamlessly integrating AI into your workflow. From summarizing threads and drafting content to breaking down complex projects and generating subtasks, our AI can do it all. No need to switch between tools or start from scratch.

💫 Real Results: STANLEY Security reduced time spent building reports by 50% or more with ClickUp’s customizable reporting tools—freeing their teams to focus less on formatting and more on forecasting.”

Instead of generating several complete answers and then picking one, the tree of thoughts prompting forces the AI to break the problem into steps. At every step, the AI will generate possibilities and evaluate them to find the best one before generating the response.

Sounds complex? Sounds complex? Let’s revisit our email subject line example with a small tweak to the prompt.

Example Prompt:

Role & Task: You are a senior product marketer. Use Tree of Thoughts to create email subject lines announcing our Pre-built AI Agents feature.

Constraints

Process (ToT)

Output Format (no hidden chain-of-thought):

Here, we asked the AI system to consider constraints, define the process, and even the output format.

💡 Pro Tip: The Tree of Thoughts works best when each decision point is clear and independent. So, if you include multiple steps within a single decision point (e.g., asking AI to identify the audience and benefit in the same step), the branches get messy and the output loses focus.

👀 Did You Know? When using the Tree of Thoughts framework, GPT-4’s success on the “Game of 24” task jumps from just 4% with standard chain-of-thought prompting to 74% using Tree of Thoughts.

That 70-point leap happened without changing the model itself, just the prompting method. It shows how your prompt can matter as much as what model you use.

In this prompt engineering technique, you break the task into smaller subtasks (with logical sequences), creating an iterative process. Each step builds on the last, and the output from one stage becomes the input for the next.

Let’s revisit our email subject line example (one last time) and use prompt chaining to see how it affects the output. We’ll first ask the AI to identify the target audience:

Example Prompt:

Goal: Write an email subject line to announce Pre-built AI Agents

Step 1: Extract key benefits

List 5 core benefits of our new Pre-built AI Agents for product and operations leaders.

(Output: faster setup, instant automation, fewer dependencies, standardization, quicker launches)

Step 2: Generate angles

Suggest 5 messaging angles for an email subject line based on these benefits.

(Output: speed, ease, productivity, reliability, innovation)

Step 3: Write subject lines

Write 3 subject lines per angle. Keep under 55 characters.

(Output: “Pre-built AI Agents — Ready When You Are” etc.)

Step 4: Pick the best

Score these on clarity and relevance. Return the top 3 with preheaders.

By chaining the prompts, you’re essentially guiding AI through the same process you’d follow manually:

Extract key benefits ➡️ Generate messaging angles ➡️ Write a subject line ➡️ Choose the best option

💡 Pro Tip: Use prompt chaining to reduce AI’s ‘cognitive overload’. By breaking a big task into smaller steps, you guide the AI through the process, making the final output more polished and aligned than a single zero-shot prompt.

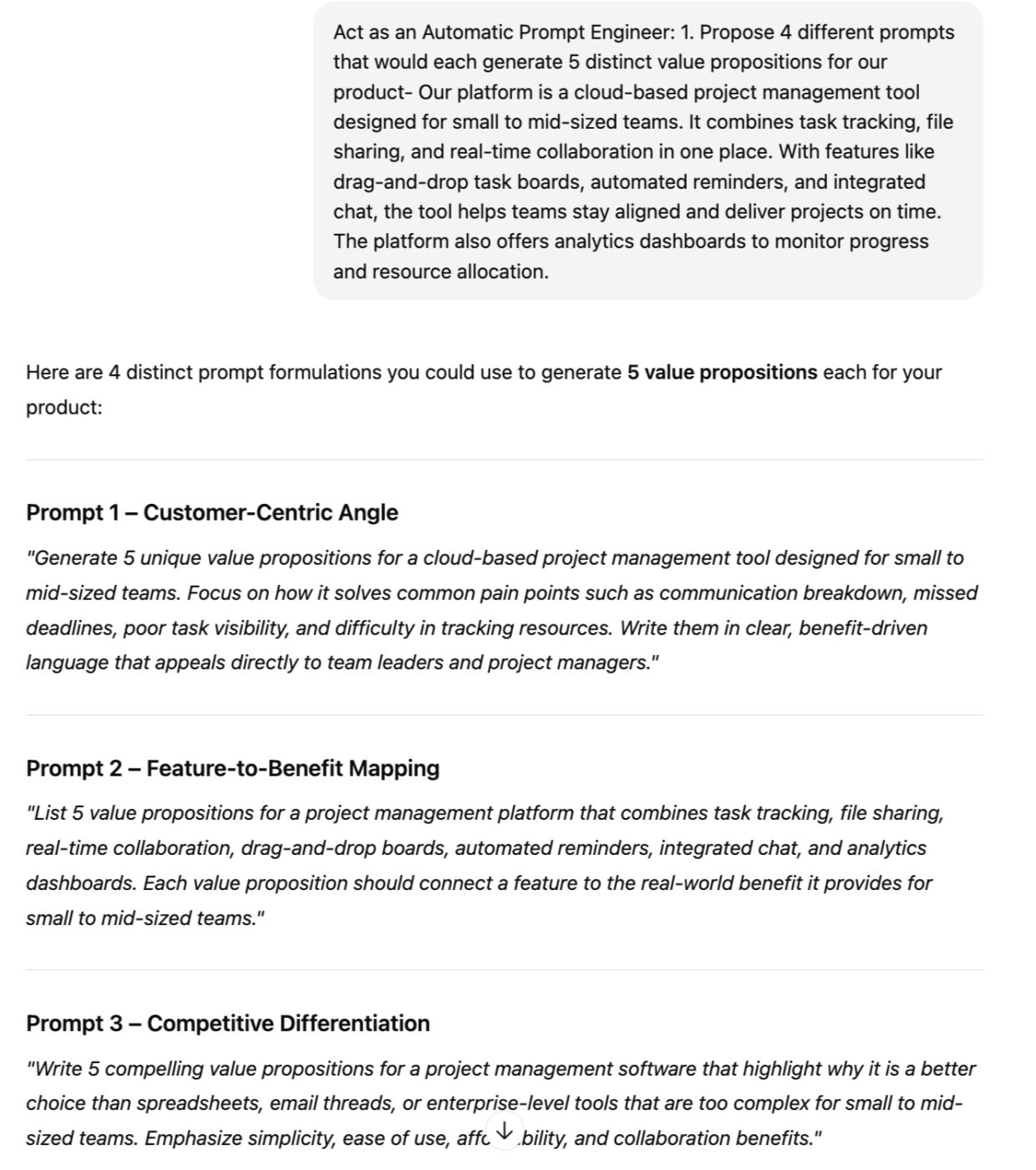

APE is an advanced technique where a large language model helps you generate and refine new prompts optimized for the same AI model. Think of it as the AI’s way of saying, ‘Tell me what you want, and I’ll figure out the best way to ask the question that will get you the ideal answer.’

In the APE prompting technique, you ask the AI to:

For example, let’s say you’re preparing to launch a new feature called ‘Custom Dashboards’ for your SaaS product. You want to create a compelling messaging guide for your team. However, you’re struggling to articulate the message in a way that would resonate with your readers.

In such a case, you can ask AI to generate a detailed prompt for itself:

Example Prompt: You are an Automatic Prompt Engineer.

Task: Create a prompt that will help generate a messaging guide for our new feature, Custom Dashboards.

Your steps:

The AI will then give you a list of prompts that you can refine and run in order to create a high-quality messaging guide:

💡 Pro Tip: Create a scoring rubric to evaluate different prompts generated by the AI. You can share this rubric with the model and ask it to score each individual prompt accordingly. This will make it easier for you to assess prompt options based on your criteria.

As per a research paper titled Large Language Models are Human-Level Prompt Engineers, “We show that APE-engineered prompts can be applied to steer models toward truthfulness and/or informativeness, as well as to improve few-shot learning performance by simply prepending them to standard in-context learning prompts.”

While ‘ReAct’ sounds like what you’d do when you spill coffee on your laptop, in prompt engineering, it’s short for Reason + Act. This is another advanced prompting technique, where the AI model alternates between thinking (reasoning) and doing (taking action).

Instead of giving a final answer immediately, the AI is prompted to:

This process is repeated in a loop until the AI can confidently arrive at a well-supported answer.

Let’s say you’re planning to launch a new ‘dashboards’ feature and you want to understand what your competitor is saying about a similar feature on their website. For this example, assume we’re your competitor and you want to know about ClickUp Dashboards in detail.

With ReACT, you’d structure your prompt something like this:

Example Prompt: You are a competitive product marketer using the ReACT (Reason + Act) approach.

Your task: Research and summarize how ClickUp positions its Dashboards feature on its website.

Follow this loop until done:

Finally, provide a structured summary with:

This prompt guides the AI through a logical, step-by-step process without veering off topic. Now, let’s see how AI responded to this prompt:

💡 Pro Tip: ReACT prompting works best when the AI can access reliable online information and make accurate observations. If the ‘Act’ step pulls in noisy or stale data, the reasoning that follows will inevitably be flawed

When AI pauses to explicitly gather or construct a body of knowledge first, it tends to be more accurate and consistent.

This is the principle of Generate Knowledge Prompting, wherein you give multiple prompts to AI so that it can first surface relevant facts before using them to generate a relevant response.

Sounds confusing?

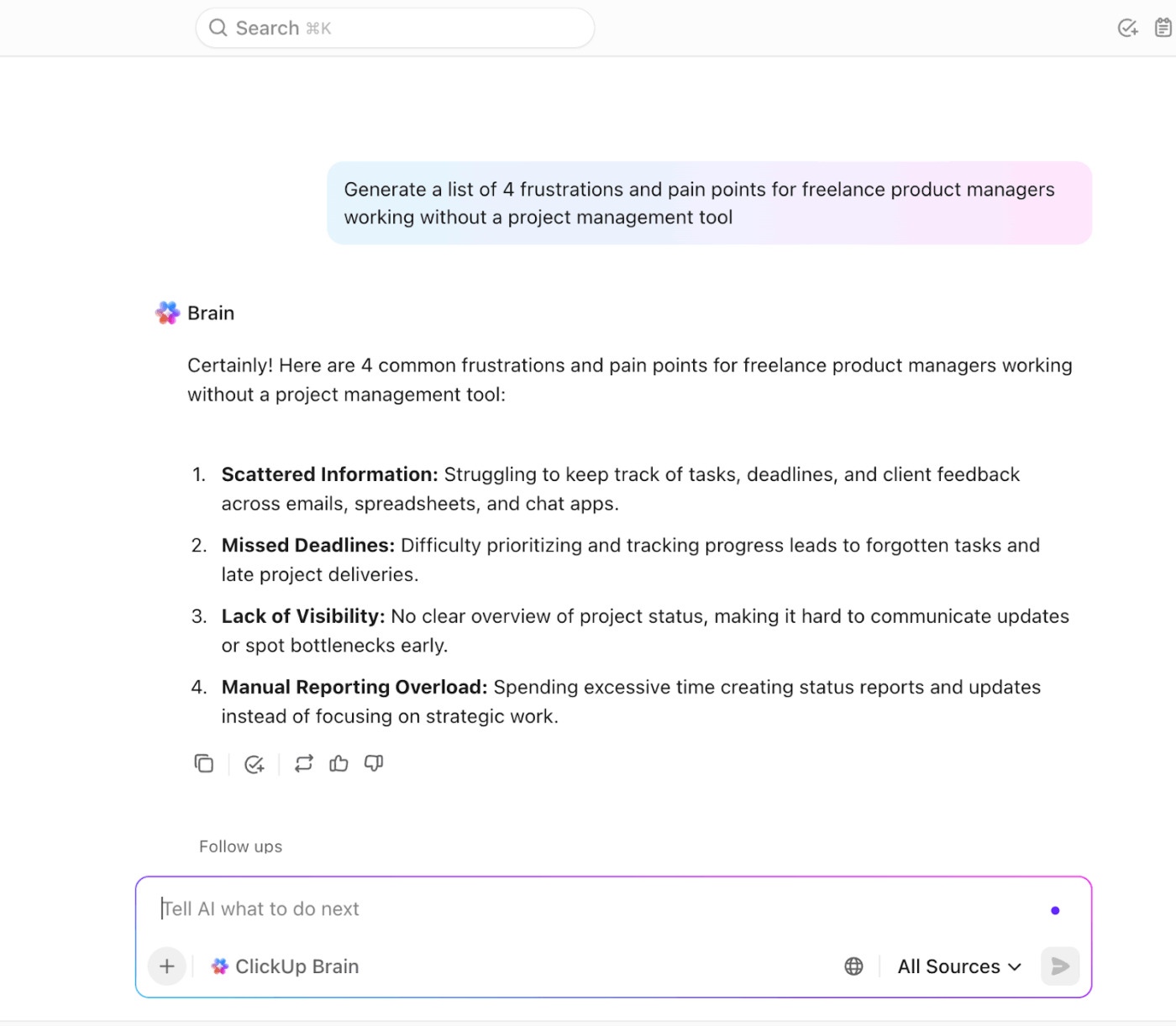

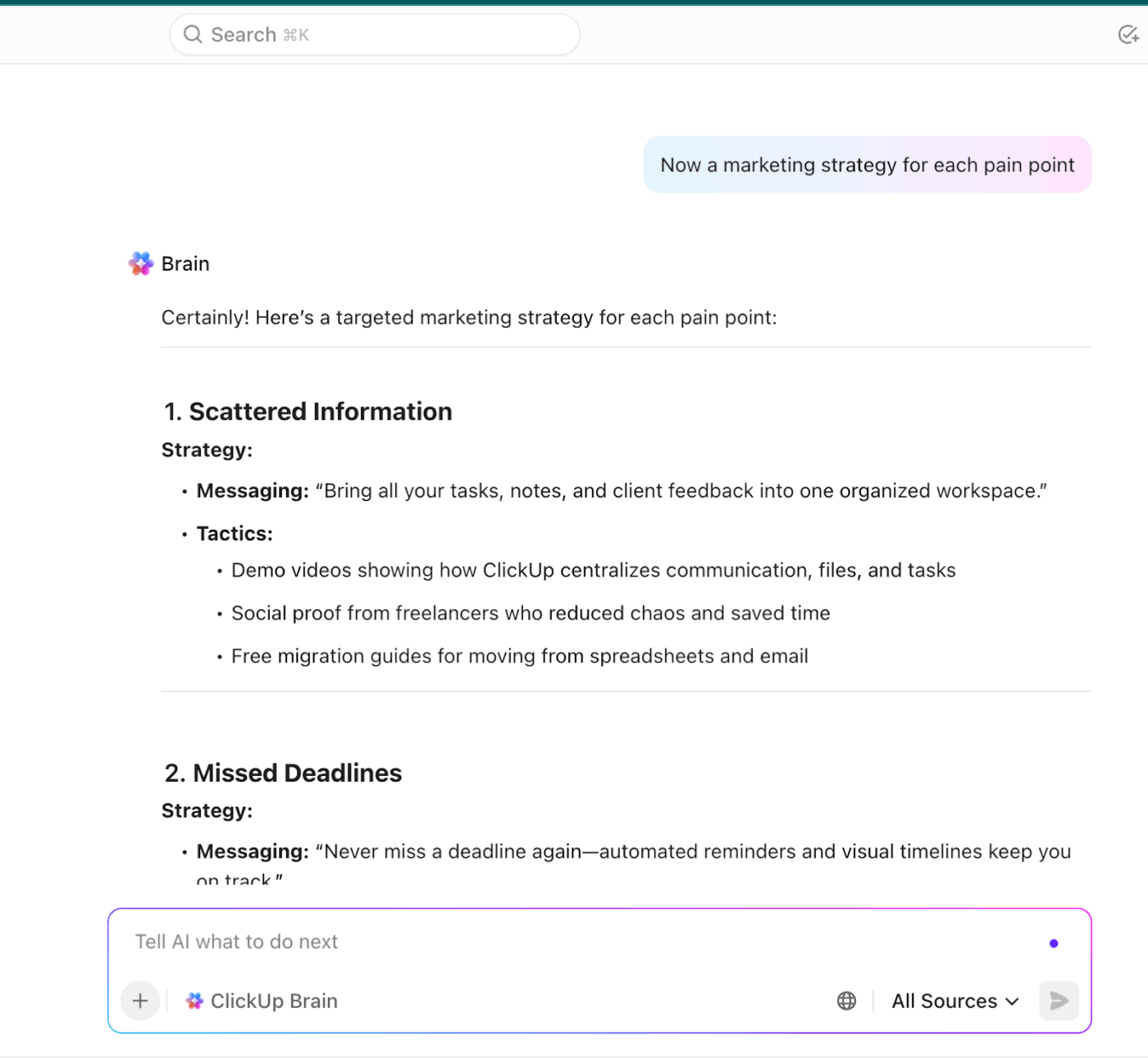

Consider this example: You’re launching a new project management tool for freelancers. You need to create a marketing strategy, but you’re not sure which pain points to focus on to make your message resonate.

Using Generate Knowledge Prompting, you can first tell AI to give you a list of relevant insights about your target audience’s frustrations:

Using this generated insight as the input for your next prompt, you’ll guide the AI to suggest an ideal marketing strategy:

The final output is, thus, built on a transparent and concrete logic.

💡 Pro Tip: Use Generate Knowledge Prompting when you need a well-researched and authoritative AI response. This is perfect for writing articles, creating detailed reports, or even preparing for a presentation where data accuracy is critical.

Active prompting is a technique that turns AI into an active learner.

Instead of you guessing which examples (or shots) the AI needs to learn from, provide it with a diverse set of examples, and the AI identifies the most challenging or ambiguous ones on its own. It then asks you to provide the correct answer for only those specific cases in order to train itself.

To understand this easily, imagine you want to create a framework that will help your sales team handle common customer objectives for a new product feature.

You already have a list of raw customer feedback and objectives, and you want to train AI to write effective, on-brand responses the sales team can reuse.

Example Prompt: You are a senior product marketing strategist researching user problems.

Task: Generate 4 clear frustrations or pain points experienced by freelance product managers working without a project management tool.

Context: They juggle multiple clients, work remotely, and often handle projects alone without dedicated support teams.

Constraints:

Output format:

💡 Pro Tip: Store successful prompts with notes on what worked and why. This builds an internal library of “prompt patterns” you can reuse and adapt across tasks, just like reusable code modules.

Ready to put your prompt engineering skills to use?

Let’s look at the common prompt engineering examples that you can immediately apply at work.

If you work in content, you’re basically running a creative assembly line. It’s tiresome, but not when you know how to craft effective prompts.

Instead of telling AI to ‘create a blog outline on [topic],’ you can break this process into sub-steps and carry them out sequentially:

Example Prompt: Give me 5 topic ideas for a blog on beating Monday blues. It is for mid-level managers and also share the frameworks you’ve used for each title.

Next, break the topic into H2, H3, and H4 tags and tell me what all I should cover under each.

Take 3-4 meta-titles and meta-descriptions from your previous articles, and use them as examples or ‘shots’ to train the AI on writing a meta-description.

If you’ve got an underperforming blog that you want to optimize for search engines, simply feed it into AI and ask the model to ‘mine’ it for keywords that you may have missed. Once the AI generates this list (i.e., generates knowledge), you can instruct it to incorporate the generated knowledge naturally into the text.

While the right prompt can help you create a great blog or social media post, it’s still a hassle to switch between tools to generate content and edit/format it for the publisher. ClickUp offers a smart way out.

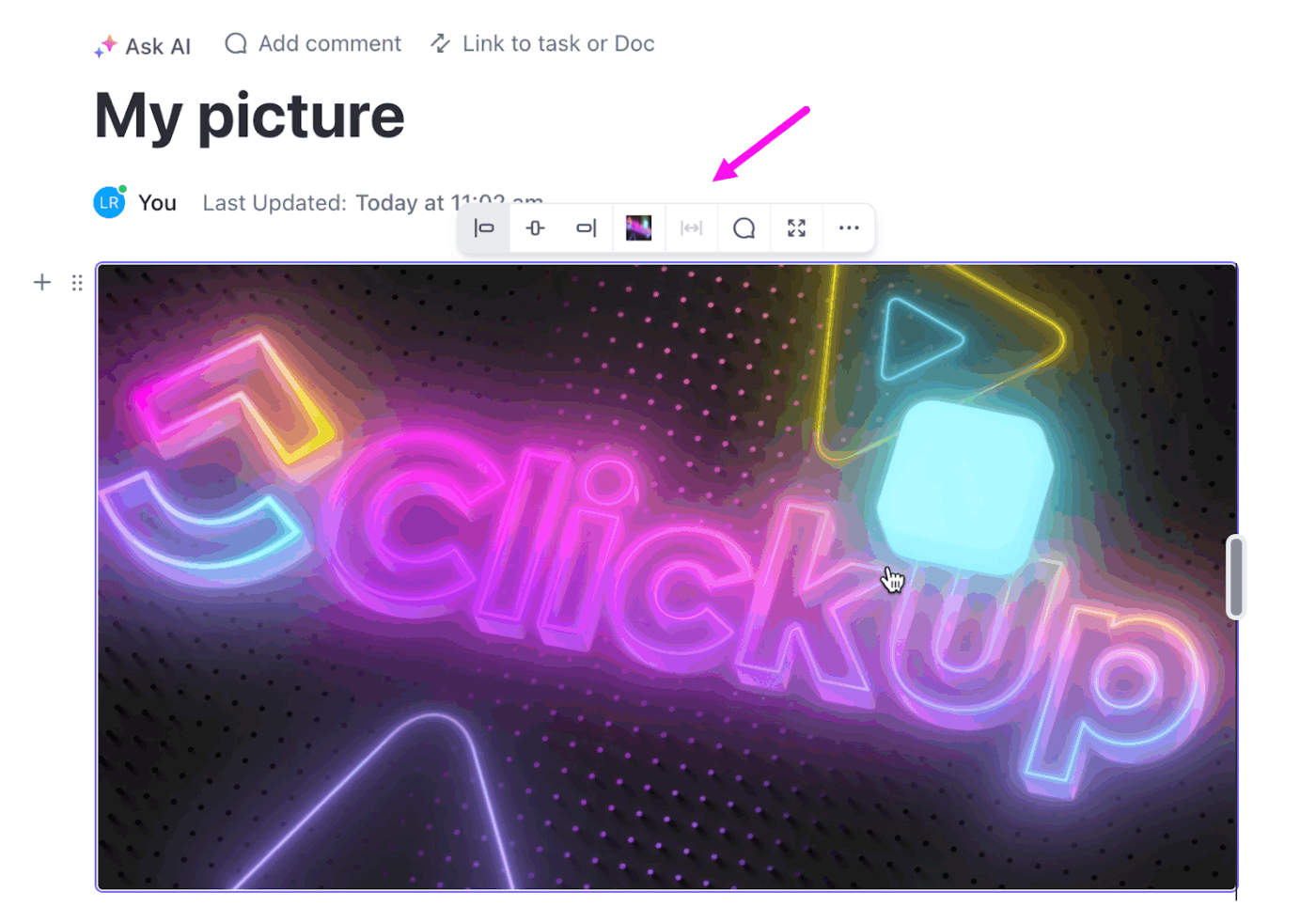

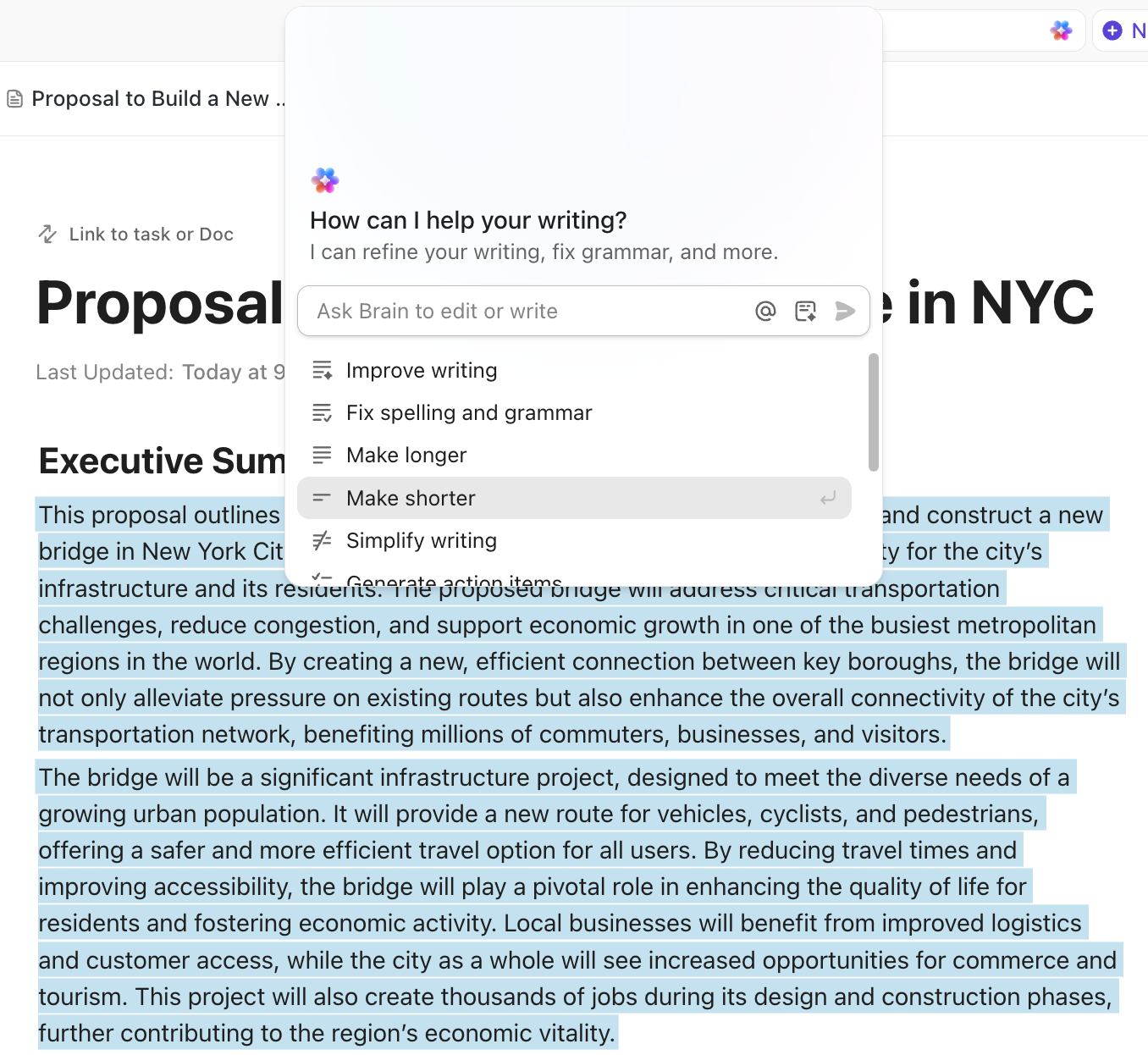

You can use ClickUp Docs to write your content, which contains a built-in extension for ClickUp Brain.

This means you can give prompts to AI, refine your content, and format it with visuals (images, tables, infographics, GIFs) all within your document.

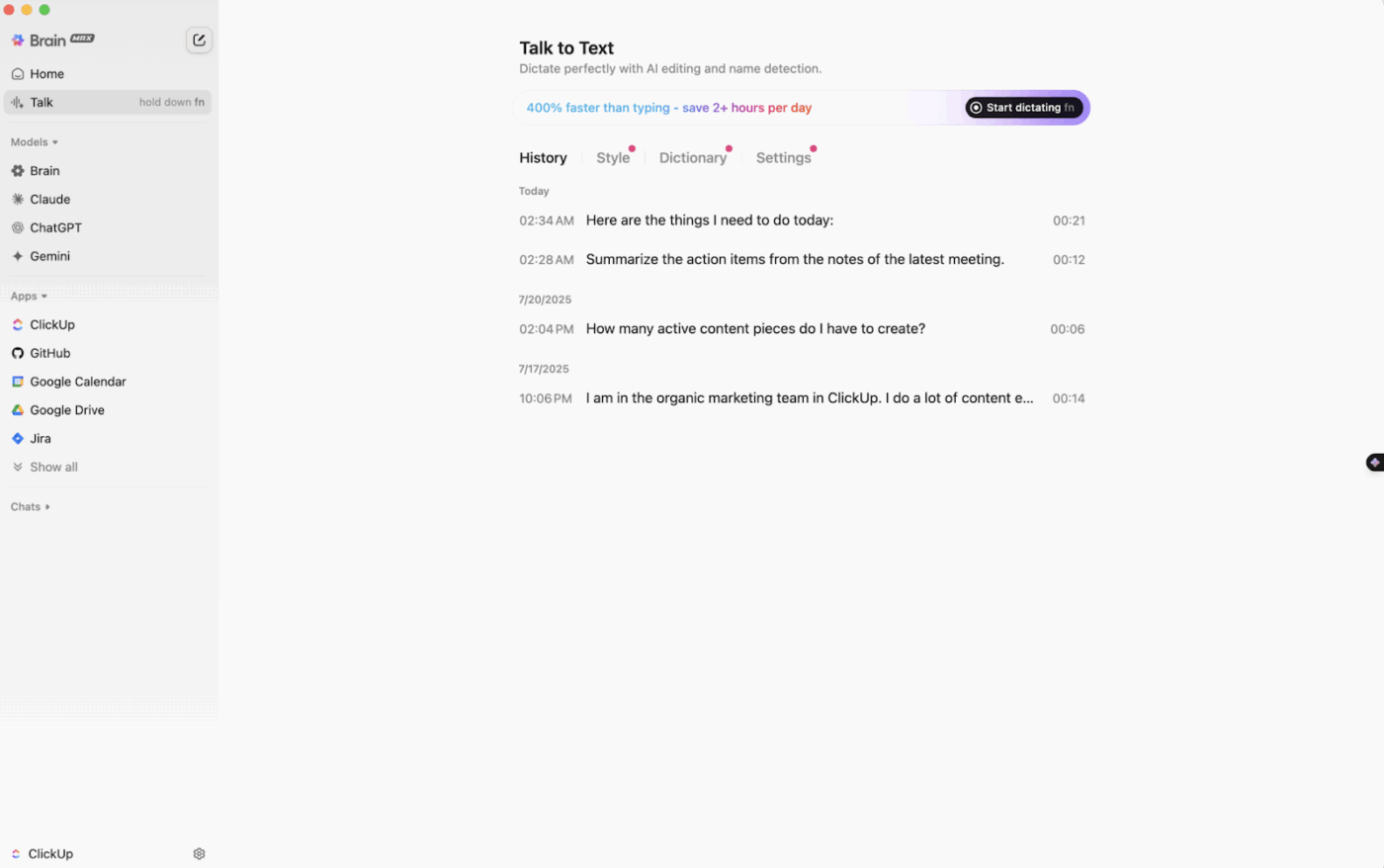

Keep your ideas moving without breaking flow. ClickUp Brain MAX helps you capture and refine thoughts directly in your Docs—turning quick sparks into organized outlines or next steps. And when typing slows you down, its Talk-to-Text lets you simply speak your ideas; they appear instantly on the page, keeping your brainstorm fast and frictionless.

This makes it effortless to capture ideas, dictate outlines, or draft content prompts in real time without breaking momentum. Once the raw draft is down, you can refine it using prompt chaining, few-shot prompting, or any other technique you’ve learned.

📚 Read More: AI Image Prompts to Create Stunning Visuals

📌 Did You Know? 86% of marketers save over an hour every day by using AI to spark new content ideas.

That’s 5+ hours a week reclaimed for strategy, storytelling, and higher-value tasks.

The result? Faster campaigns, less burnout, and more space for the kind of creativity that truly connects with audiences

Going back and forth with AI to ship new features or squash bugs is not really the assistance you need in life. Prompt engineering can make that process a lot less soul-draining:

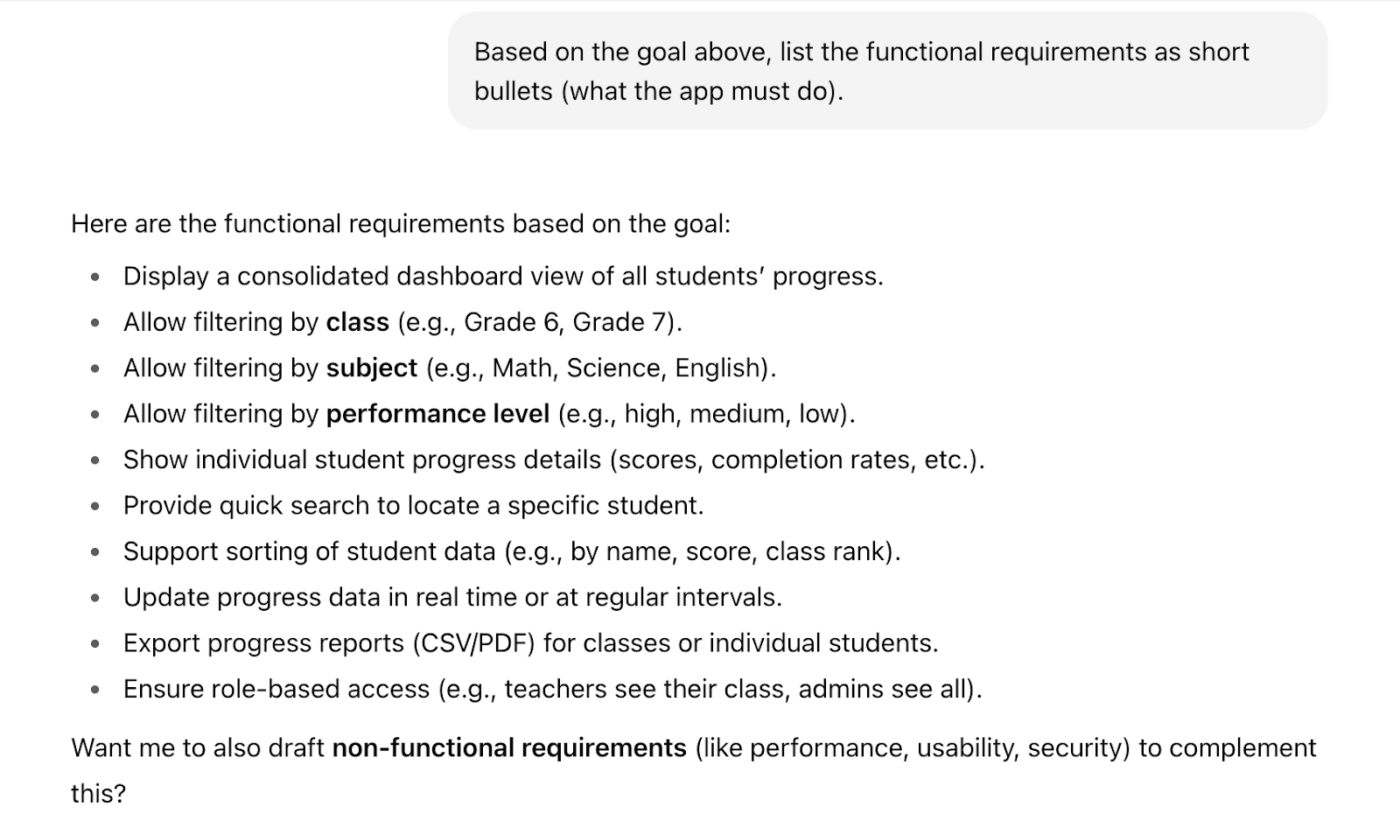

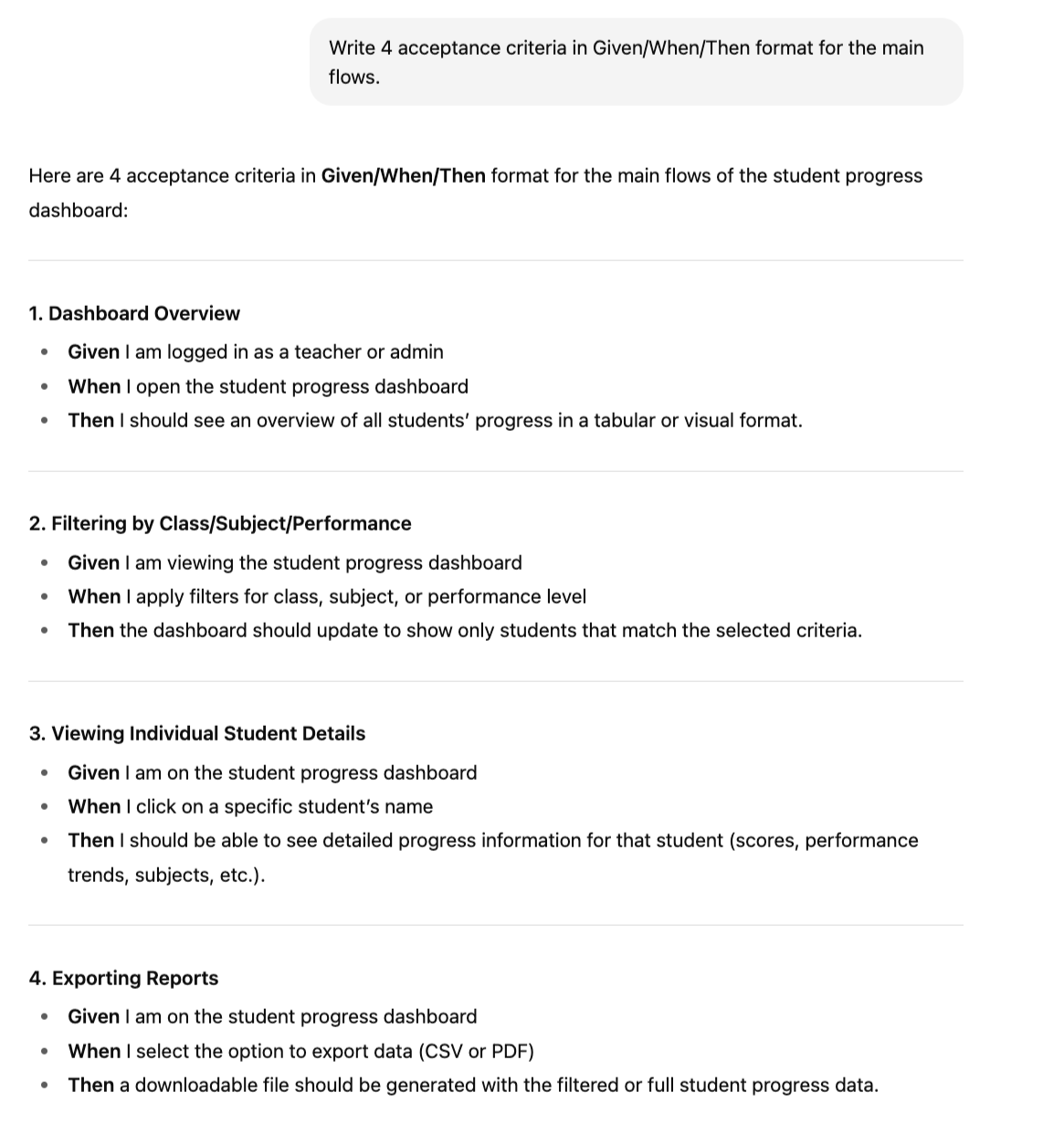

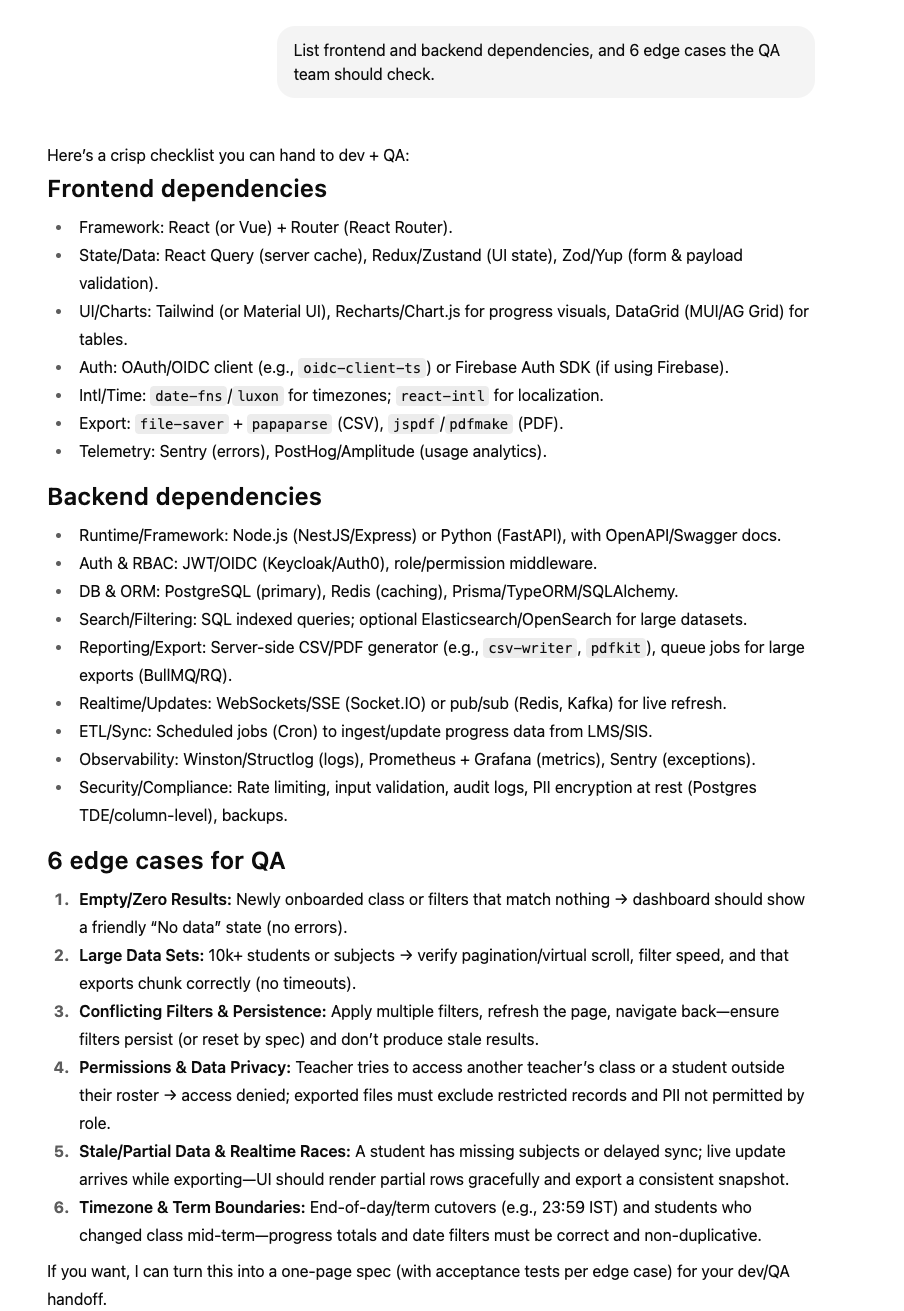

You can use prompt chaining to prepare a feature specification document step-by-step, so that developers can build from it without getting confused. Here’s how:

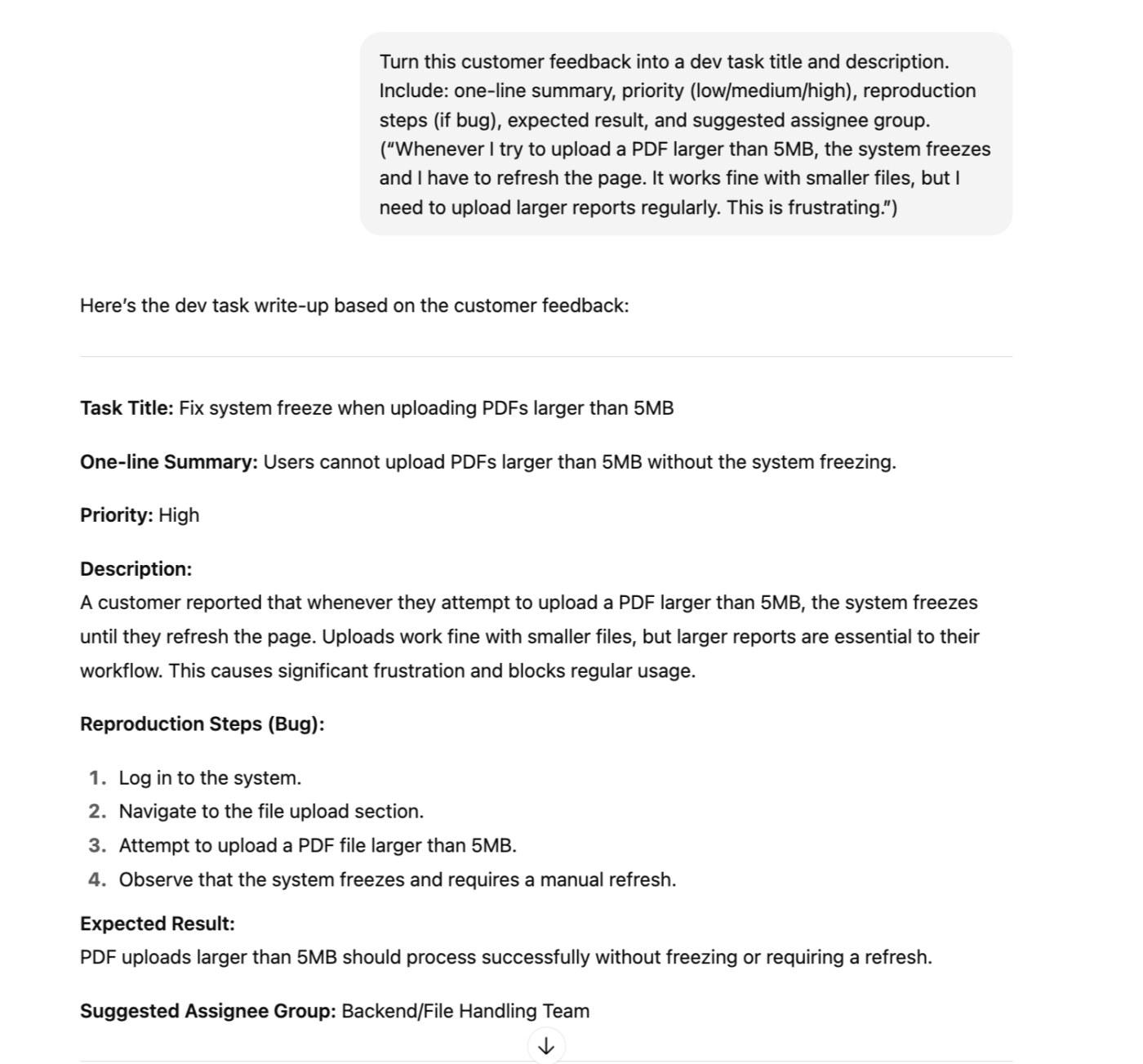

Just copy-paste the customer feedback and ask the AI to turn it into a developer task with a clear title and description:

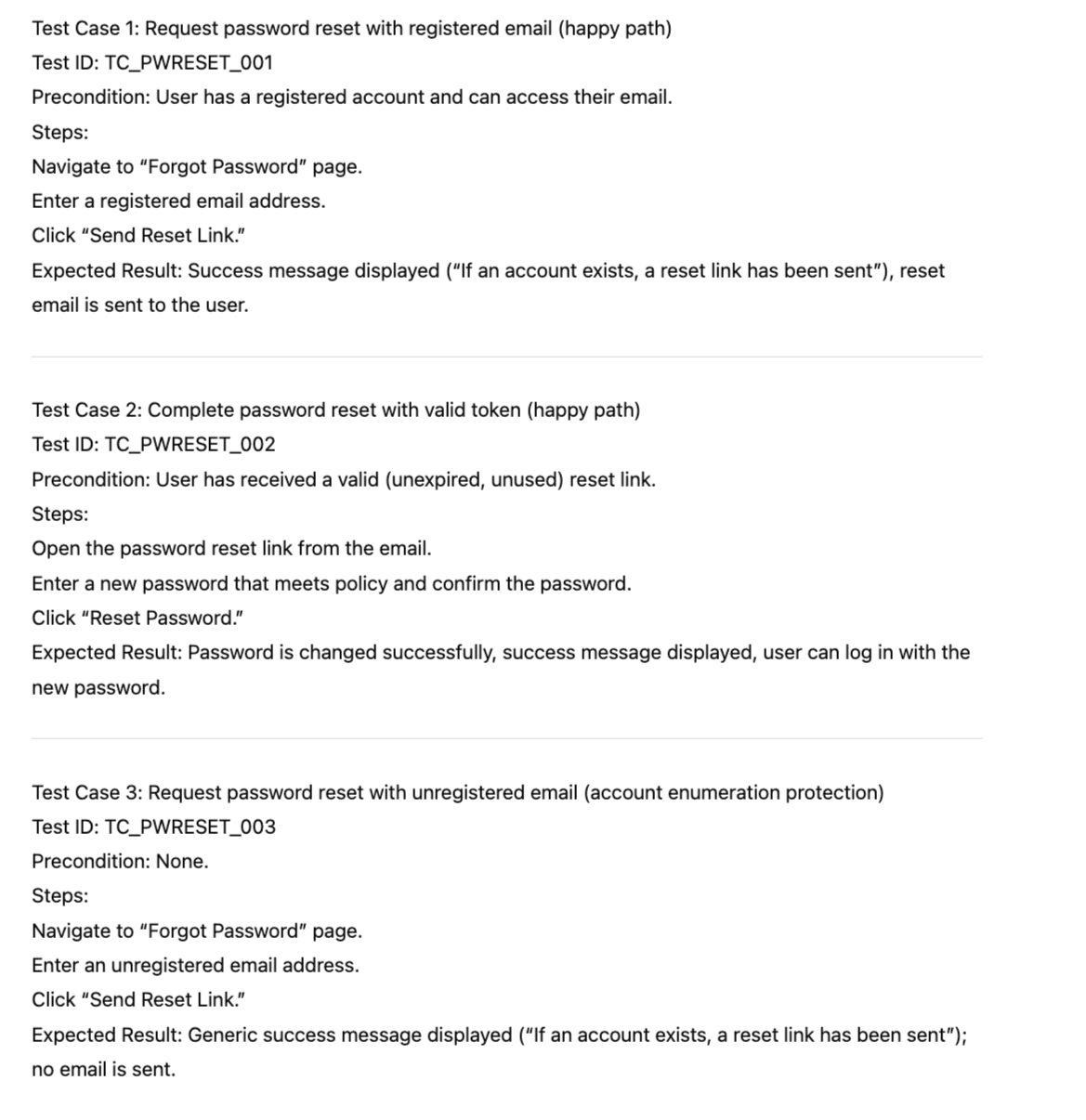

Give 4-5 examples of well-written test cases so that the AI model learns your style instantly and produces the desired test case:

If you’re still using multiple tools for AI-assisted tasks, ClickUp Brain is all you need, especially if you work in product or software development.

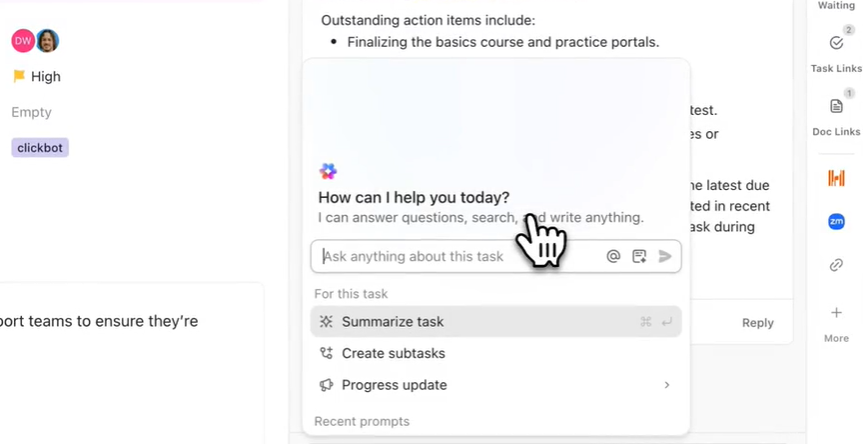

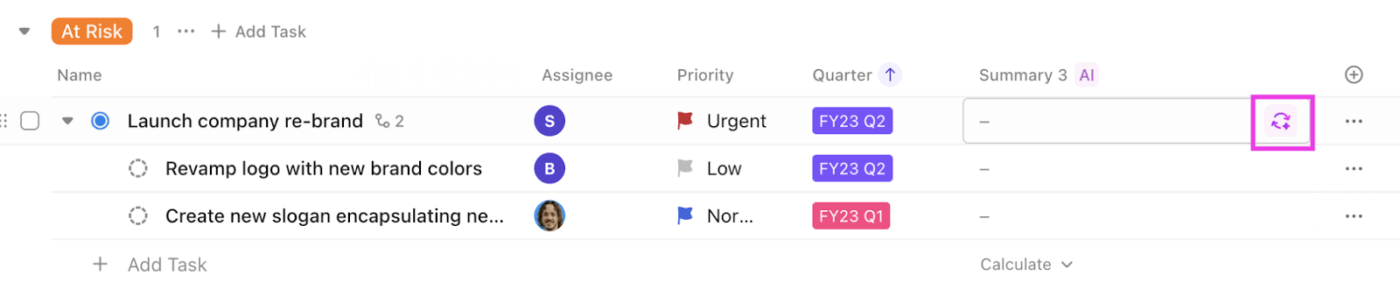

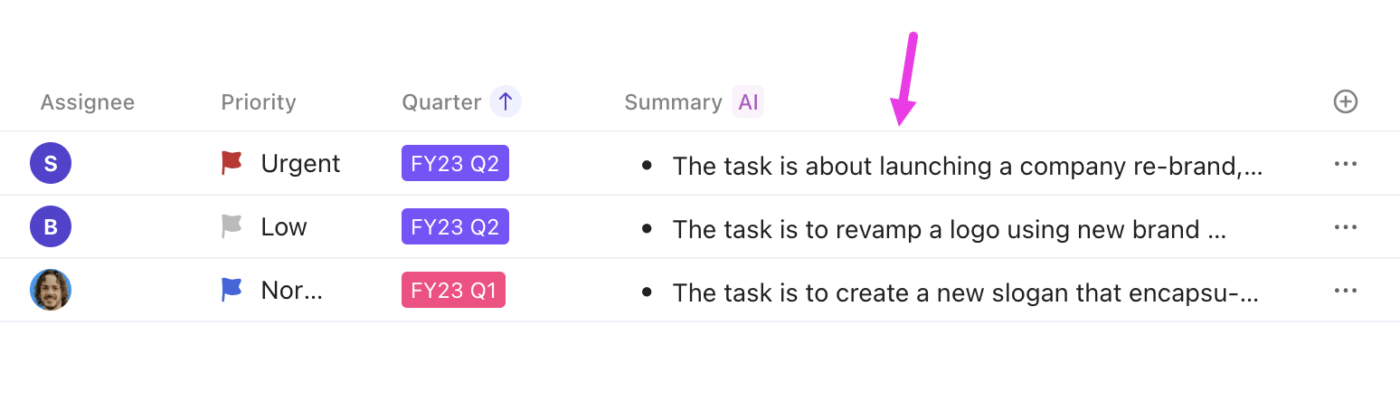

It can help you generate concise summaries of bug reports directly within a task. All you need to do is open the bug task assigned to you, click on the AI Summarize button, and wait a few seconds for the AI to produce a quick summary, highlighting the core issue and action steps required.

Similarly, you can use ClickUp Brain to draft clear acceptance criteria for user stories, features, and bug fixes. The writing assistant software will automatically fetch and analyze the task contents (description, comments, attachments) and suggest the acceptance criteria in a checklist/bullet format.

Want to see it in action? Watch this quick video on how to write an effective bug report with AI assistance.

📌 Did You Know? A survey by Canva found:

Benefits include faster prototyping, idea generation, innovation, and lower costs.

Personalization is what matters the most for sales and marketing teams. But offering that at scale is a tedious task. Let’s see how prompt engineering techniques can speed up this process:

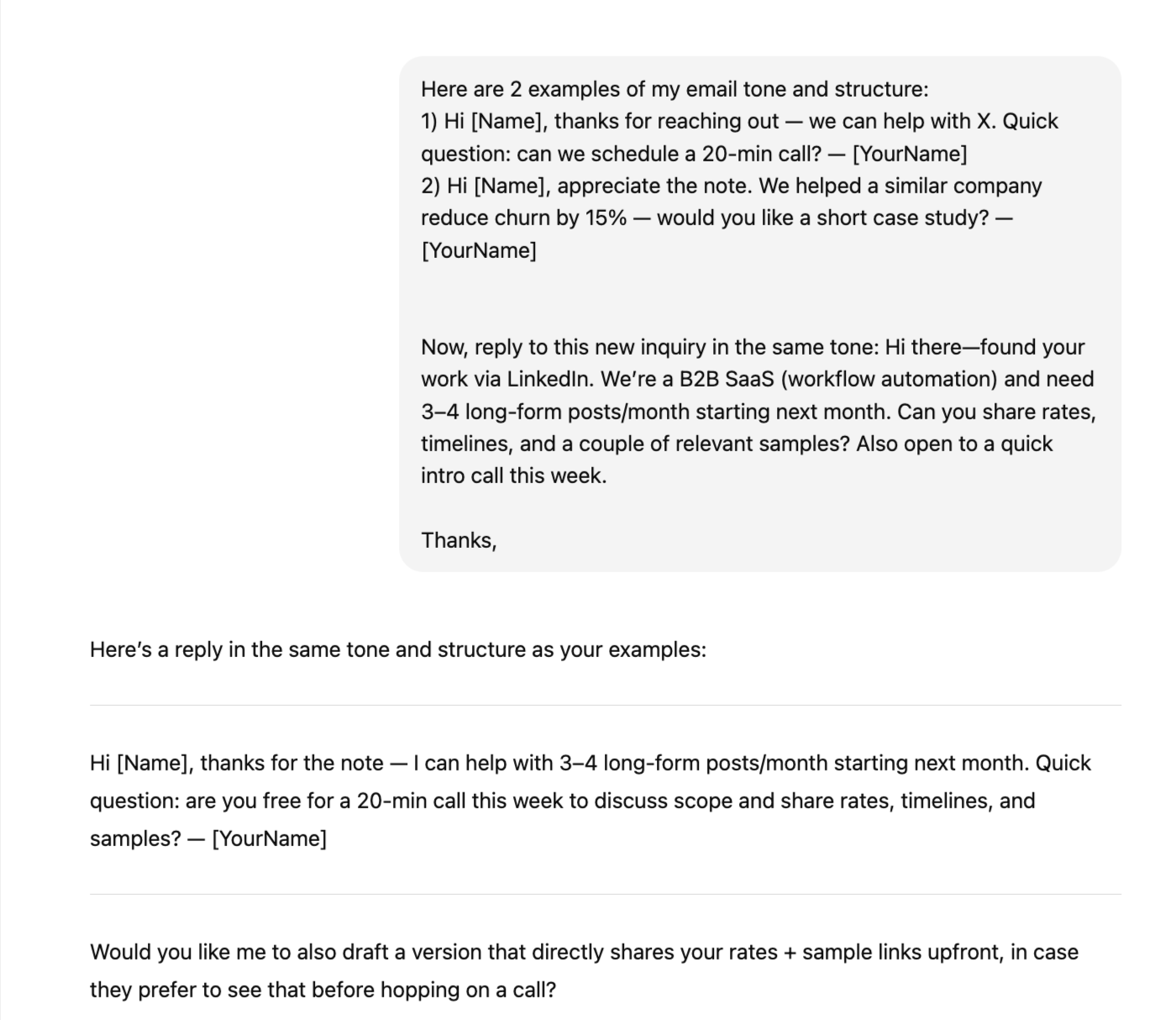

Show AI a few examples of how you’d reply to a customer’s or lead’s email, and it will draft a reply to the latest email exactly like you would:

Need help drafting a strong value proposition? Instead of spending time on honing your prompt, simply ask AI to:

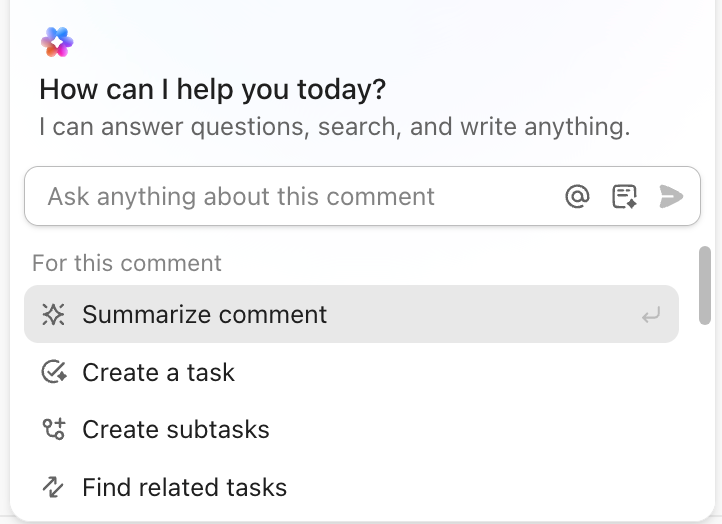

Need to generate outreach copies and client call summaries within seconds? With ClickUp Brain, you can use the AI assistant across all ClickUp features, such as ClickUp Docs, ClickUp Tasks, and even ClickUp Comments.

To draft outreach copy, simply open ClickUp Docs and use AI to write your email/lead message. You can edit it, choose a tone, improve or expand the draft with one click, or use it as is.

And if someone drops a call note in the comments, you can call Brain (by typing @brain in the comment/reply field) and ask it to summarise the call note in the comment.

📚 Read More: Writing Prompt Examples

📌 Did You Know? Nearly 20% of marketers allocate more than 40% of their marketing budget to AI-driven campaigns, and 34% report significant improvements in marketing outcomes due to AI

If you’re in operations, you’re likely burdened with time-consuming tasks such as writing SOPs or creating internal documents. But fret not, try the prompts given below to ease your workload smartly:

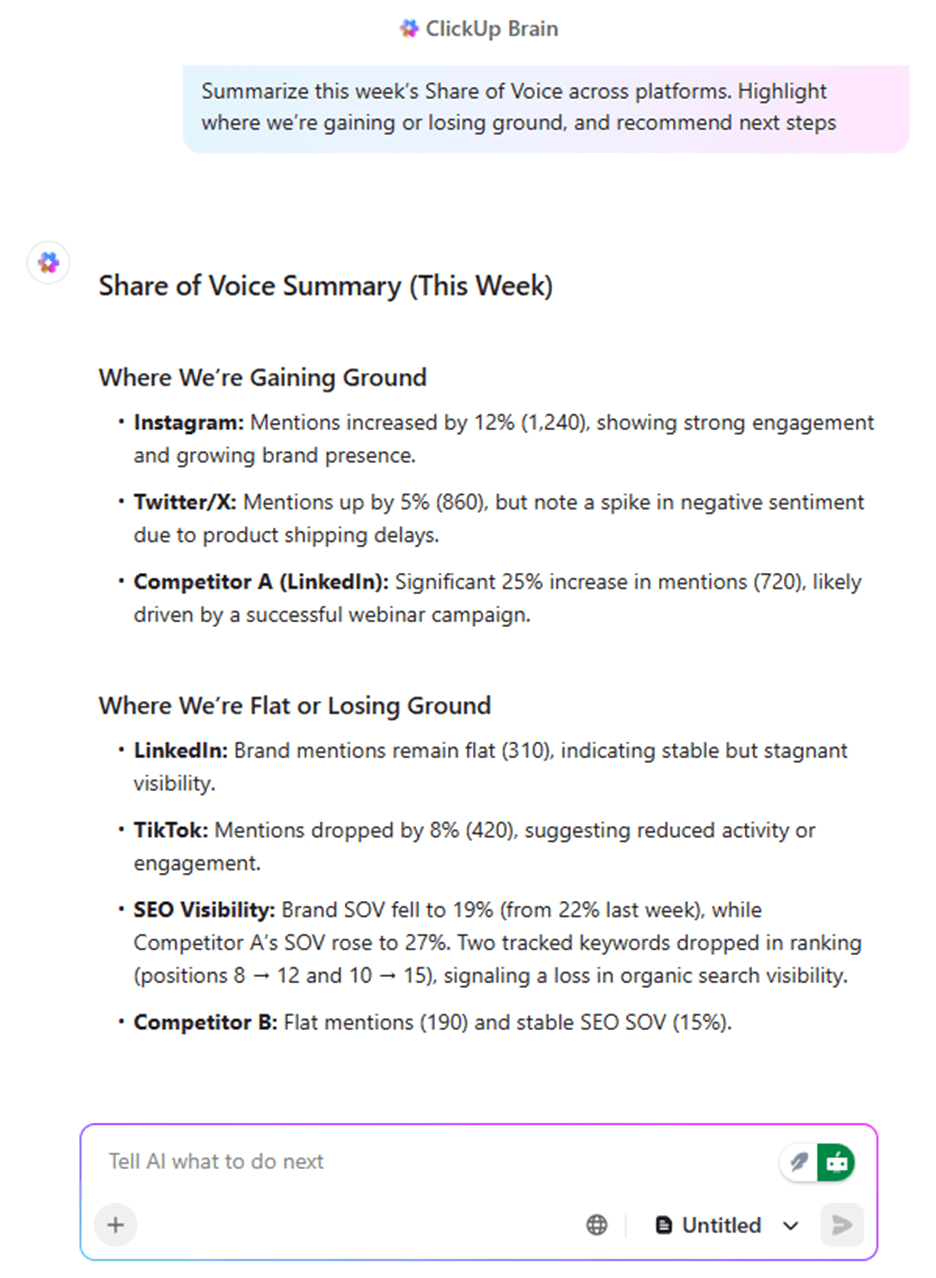

Don’t have an automated tool to generate meeting recaps? No worries! Paste the meeting transcript into the AI chat and ask it to extract the key points (summaries or action items).

To enhance the accuracy of the output, you can further tell AI to try a few versions of the summary and pick the best one.

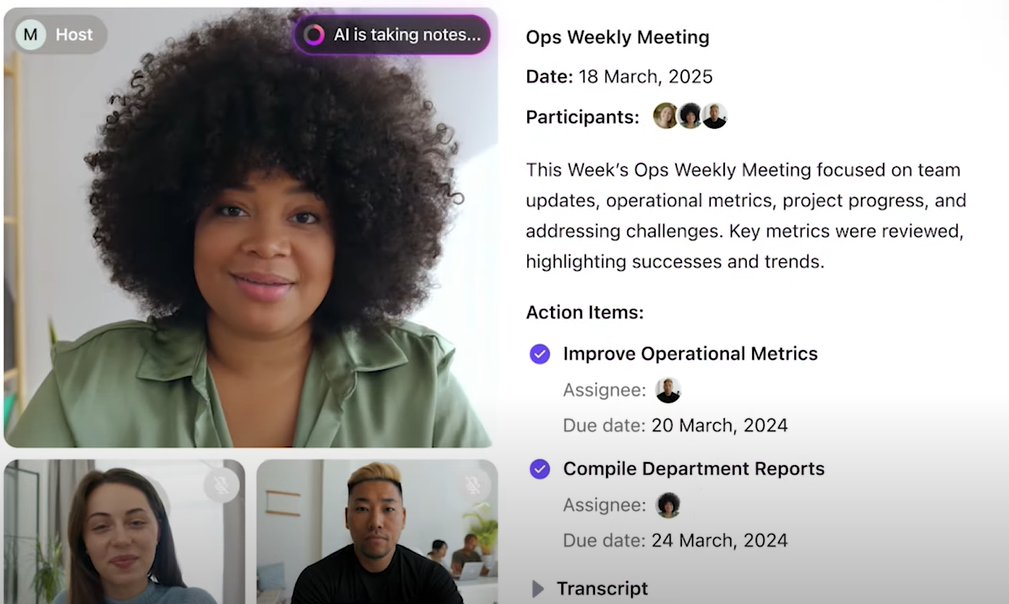

And if you’re looking for a more seamless and automated way to handle meeting notes, ClickUp’s AI Notetaker is designed just for that. This powerful tool can automatically join your meetings, whether they’re scheduled or ad hoc, and transcribe the entire conversation in real time.

It can summarize key points, highlight decisions made, and even extract actionable tasks or follow-ups.

If you want to learn more about how to use AI for taking meeting notes, check out the video below:

It can be overwhelming to create an internal document (like one on ‘remote work policy’) on the first try. In such cases, it’s best to run an active prompt and refine as you go to get the perfect output:

Example Prompt: Draft an internal doc explaining our remote work policy. Keep it under 800 words. List down the eligibility, expectations, equipment policy, and a cybersecurity section.

Watch this video to learn how AI can streamline your documentation process and save hours of manual work:

‘Write an SOP on X’ might not give you the best results. Instead, you can determine exactly what needs to be included first. Once AI gives you that list, tweak it, and then hand it back to the model to build the full SOP.

Example Prompt

Step 1: You are a process documentation expert. Identify all the key steps, tasks, tools, and approvals involved in creating an SOP for [X process]. Include who is responsible for each step, what tools they use, and the key success criteria to mark that step as complete.

Step 2: Using this list of steps, roles, tools, and criteria, write a detailed Standard Operating Procedure for [X process]. Include sections for Title, Purpose, Scope, Step-by-step Procedure, Roles & Responsibilities, Tools/Resources, and Approval & Review Guidelines. Use clear, actionable language so anyone can follow it without prior training.

Even if this looks easy, we understand that it can be frustrating to draft AI writing prompts from scratch whenever you need to generate an SOP (because the same prompt might not suit every SOP).

But what if there were a magic button in your workspace, which, when clicked, would generate whatever SOP you wanted? That’s exactly what you can achieve using Clickup’s AI Fields.

It’s a custom field, powered by ClickUp Brain, that you can add to your task or list. You can set the prompt to something like ‘Draft an SOP based on the task description and comments.’ And every time you click on it, it will automatically generate SOP content, depending on the task’s contents.

💬 What CickUp users are saying:

ClickUp is extremely versatile and allows me to create solutions for practically any business case or process. The automations and AI Agents are also super powerful! I can set up automatic actions via logic or via AI prompts to perform just about any action imaginable in ClickUp. Lastly, the pace of product updates is incredible—there are truly significant feature updates every month, and the company is clearly invested in growth.

A few small habits in how you write prompts can mean the difference between getting a ‘wow, that’s perfect’ output and staring at a block of text, wondering what went wrong.

That said, let’s take a look at some common prompt engineering mistakes and how you can optimize your prompts:

Writing a prompt like ‘write a blog post’ or ‘summarize this’ leaves much to the AI’s interpretation. Result? A blog that is overly generic or a summary that falls short of your expectations.

Fix: Create effective prompts with clear directions and context. For example, when writing a blog post, consider defining the tone you want to follow, your target audience, the post’s length, and its purpose.

Here’s an example:

❌ Bad Prompt: ‘Write an email about the new ‘Custom Dashboards’ feature.’

✅ Good Prompt: ‘Write an internal email to our sales team announcing the new ‘Custom Dashboard’ feature for our productivity tool [tool name]. The email should be concise, highlight the top three key benefits for a salesperson (e.g., proving ROI, closing deals faster), and include a call-to-action to a training video. Use a confident and encouraging tone.’

Cramming too many details or tasks into one monster prompt can also lead to muddled results. AI will either get confused or try to do everything at once (and do it badly).

Fix: Break your initial prompt into smaller steps and run them in sequence. For example, first ask for an outline. If it’s good, ask AI to write content for each section. Next, instruct it to polish for tone, and so on.

❌ Bad Prompt: ‘Generate 10 SEO keywords for a blog post titled, ‘How To Implement a Quality Management System. Suggest an SEO-friendly outline using these keywords and then write a 100-word introduction for the blog.’

✅ Good Prompt: Generate 10 SEO keywords for a blog post titled, ‘How To Implement a Quality Management System.’ The target audience for this blog post is business owners, CEOs, and top-level management.

Now, using the generated keywords, create a detailed, SEO-friendly outline for this blog post. Make sure the <H> tags have keywords placed naturally and are not overdone.

Write a 100-word introduction for this blog, keeping the generated outline and SEO keywords in mind.

Most large language models are stateless and don’t retain information unless you explicitly include it in the current prompt. This often results in responses that ignore your earlier context or contradict your previous instructions.

Fix: Restate key context, constraints, and goals in every new prompt so the model has all the information it needs to respond accurately.

❌ Bad Prompt: ‘Now write the introduction based on the outline we discussed earlier.’

✅ Good Prompt: Using the blog outline we created earlier (Introduction, Benefits, Use Cases, and Conclusion), write a 100-word introduction. Make it conversational, and hook the reader by highlighting a common pain point that our productivity tool solves.

A good prompt can save minutes; a shared prompt library can save hours (since everyone uses it). Here’s how you can build one:

Use ClickUp Docs to organize your most effective prompts that team members can use later. You can organize these prompts by department and further by task type (e.g., content creation, market research, data analysis, etc.).

For each prompt, include the following:

For common tasks such as summarizing meeting notes or optimizing a blog, you can create standard prompting strategies that everyone must use. You can include exact AI prompt templates and instructions for when/how to use them to generate responses in the desired style.

This ensures that every team member follows the same best practices when prompting, guaranteeing a consistent output quality.

Encourage your team to not only use this prompt library but also help improve it. To do so, you must:

There will be times when AI produces subpar or unexpected outputs. To help your team diagnose and fix issues, consider adding a troubleshooting section that discusses common AI prompting mistakes and their solutions.

This could look something like this:

Problem: The output is too generic

Why it happens: AI tends to fall back on its most common training data, which can lead to safe, but generic or uninspired responses

The solution: Add constraints or specific instructions to nudge the AI in the right direction

Example: ‘Keep it under 100 words’

📚 Read More: How to Become a Prompt Engineer

By learning basic and advanced prompt engineering techniques, you can stop wasting time on trial and error and start getting results that actually move your work forward.

With ClickUp, AI becomes part of your workspace. It combines task management with automation and collaboration, so that you can get work done without jumping between tools.

So, let’s ditch the old way of using AI as a sidekick you have to call. It’s time to get you an AI assistant that is already a part of your team.

Sign up for ClickUp today and see what happens when AI is literally just one click away!

The best tool depends on what task you want AI to perform. However, the most value is achieved when AI is integrated into the platform you already use for planning and delivering work. ClickUp Brain, for example, is widely and deeply integrated into the ClickUp workspace so that you can access the AI assistant from any screen. In fact, you can even switch between Brain, ChatGPT, Gemini, Claude, etc., to choose the best AI model for your work.

Yes! You can store your best-performing prompts in a shared ClickUp document or even turn them into custom AI fields for instant reuse. This way, anyone can simply click on that field, and the AI assistant will run your pre-set prompt. Highly recommended for repetitive tasks that thrive on consistency and are time-sensitive.

Large language models are not search engines. They’re not like Google, where you enter a search query and the engine will give you the same result every time. Instead, LLMs answer your queries based on the data and patterns they’ve learned during their training, which is why the same prompt can produce different results each time.

In the zero-shot prompt engineering technique, you simply tell AI the task it needs to perform, without any supporting examples of the expected outcome. Contrary to this, few-shot prompting requires you to include a few examples to guide AI in a particular direction. For example, providing a sample email reply so that AI could generate something similar to it.

© 2026 ClickUp