Output from a large language model (LLM) is broadly a function of two things: the data it’s been trained on and the prompts we use to instruct it to perform an operation.

As conversations around explainable AI systems become mainstream, more and more of us are curious about deciphering the LLM black box where prompts are fed in and from which outputs magically appear.

Chain of Thought (CoT) prompting is a tool that can help. This Natural Language Processing (NLP) technique transforms LLMs from machines that simply spit out perfect (or sometimes, hallucinatory) answers into logical reasoning partners.

Instead of stopping at opaque outputs, CoT prompts LLMs to reveal their thought processes step-by-step, leading to clear and understandable explanations for the steps they used to arrive at those outputs.

Intrigued?

This article explores the CoT prompting technique. We’ll see how it works, its different types, and the practical applications that are pushing the boundaries of AI.

Whether you’re an AI developer, an NLP enthusiast, or simply curious about the future of intelligent systems, get ready to explore the power of reason-driven AI.

- Understanding Chain-of-Thought Prompting

- Chain-of-Thought Prompting Techniques

- Practical Application of Chain-of-Thought Prompting

- Chain-of-Thought Prompting Variants

- Learning and Improving with Chain-of-Thought Prompting

- Learning and Improving with Chain-of-Thought Prompting

- ClickUp: Your Gateway to CoT-powered Project Management

Understanding Chain-of-Thought Prompting

Chain-of-thought (CoT) prompting is a technique for improving the reasoning abilities of large language models (LLMs).

Here’s the gist of it:

- The problem: LLMs are good at finding patterns in massive amounts of data, but they often struggle with complex reasoning tasks. They might give the right answer by chance, but not necessarily because they understand the underlying logic

- The solution: CoT prompting addresses this by guiding the LLM through the reasoning process step-by-step or using a chain. This ‘chain’ typically consists of intermediate steps, justifications, or evidence used to arrive at the final output. We do this by providing examples that show how to break down a problem and solve it logically

By seeing worked-out examples, the LLM learns to follow a similar process when solving new problems. This can involve identifying key information, applying rules, and drawing conclusions.

So, CoT isn’t just about transparency.

You can build more reliable and trustworthy AI systems by understanding the ‘how’ behind an LLM’s answer with this prompt engineering technique. This can improve tasks like generating clear explanations, tackling complex reasoning problems, and manipulating symbols—all with the added benefit of human-like reasoning.

The theory behind the technique

CoT prompting builds on the principle that complex problems are often best tackled by breaking them into smaller, more manageable pieces. This aligns with human reasoning—we don’t jump straight to conclusions; we consider different aspects and connections before forming an answer.

By prompting the LLM to follow a similar path, CoT encourages a more deliberate reasoning process. This not only improves the accuracy of the final answer, especially for complex tasks but also offers a window into the LLM’s internal workings.

A look-back on CoT prompting

The concept of CoT prompting is relatively new. While the underlying principles of breaking down problems have been explored in AI research for decades, the formalization of CoT prompting, with its focus on revealing LLM reasoning, is a recent development.

A seminal work in 2022 by researchers at Google AI laid the groundwork for this exciting field. This research demonstrated the effectiveness of CoT prompting in tasks like solving math word problems. The ability to follow the LLM’s thought process, showing the steps and equations used, significantly improved performance compared to traditional prompting methods.

This initial success has prompted further research and exploration of CoT prompting’s potential across various NLP domains.

Chain-of-Thought Prompting Techniques

CoT prompting doesn’t follow a one-size-fits-all approach. For AI developers and NLP practitioners eager to make the most of CoT prompting, here’s a breakdown of the key techniques you can use:

Automatic Chain-of-Thought (Auto-CoT)

This technique offers an exciting prospect—an AI that identifies patterns in pre-provided examples and uses those patterns on new problems. This can be seen as a form of AI “referencing itself” to adapt its prompts to new situations, creating a sense that it is prompting itself to some degree.

Auto-CoT builds upon zero-shot prompting (explained below).

The LLM first tackles a task by creating question-answer pairs that demonstrate the reasoning process for a similar problem. These pairs then become prompts for future tasks, allowing the LLM to learn by creating its own study guide.

Here’s a simplified example (assuming the pre-trained model and tokenizer are loaded):

Zero-Shot Chain-of-Thought (Zero-Shot CoT)

Efficiency is the name of the game here. Zero-Shot CoT uses pre-defined prompts that guide the LLM through the reasoning process without requiring specific training examples.

These prompts often involve asking the LLM questions that encourage it to break down the problem and explain its thought process. This example will help you understand it better:

While convenient for quick implementation, Zero-Shot CoT might not always be as effective as methods with more tailored examples.

CoT vs. Few-Shot prompting

Both techniques involve providing examples but with a crucial distinction. Few-shot prompting focuses on giving the LLM a handful of relevant examples of the desired output (e.g., solutions to similar problems). Look at this example:

CoT prompting, however, goes a step further by explicitly showcasing the reasoning steps that lead to the output. This additional information empowers the LLM to internalize the problem-solving process

CoT vs. Standard prompting

Standard prompting methods typically prioritize solely getting the LLM to the desired answer. They might provide context or rephrase the question but don’t explicitly ask the LLM to reveal its reasoning process. Here’s an example:

CoT prompting, on the other hand, prioritizes not just the answer but also the ‘how’ behind it, leading to a more transparent and interpretable outcome. This focus on reasoning makes CoT prompting a powerful tool for developers building trustworthy AI systems.

Note

- These are simplified examples – real-world implementations might involve data preprocessing and handling model outputs

- Experiment with different prompt formats and models to find the best fit for your specific task

- Consider utilizing libraries like prompt-engineering for more advanced CoT prompting techniques

Practical Application of Chain-of-Thought Prompting

CoT prompting can be a powerful tool to improve the reasoning abilities of LLMs in various areas. Here’s how it can be applied to:

Arithmetic reasoning

Instead of just providing the answer, CoT prompting can guide the LLM in showing the steps involved. This can be adapted to address potential errors in the LLM’s reasoning. For instance, in the following example, the code constructs an LLM prompt to guide through subtraction steps.

It retrieves the LLM’s answer (number of remaining apples). The code checks for a nonsensical answer (negative apples). If an error is detected, a new prompt is created to revisit the steps and guide the LLM toward the correct solution. This error correction mechanism helps the LLM identify and rectify mistakes in its reasoning process.

Commonsense reasoning

CoT is also effective in commonsense reasoning tasks. This is because CoT prompts can help LLMs understand cause-and-effect relationships. This example demonstrates interactive sentiment analysis with explanations.

The CoT prompt provides context about the weather (rain) and the window state (open), guiding the LLM toward a commonsense conclusion.

The LLM leverages its knowledge about rain-damaging furniture and the ability to close windows to prevent that from happening.

This example demonstrates how to use CoT to reason about cause-and-effect relationships (commonsense reasoning task) and take appropriate actions.

Sentiment analysis

In sentiment analysis, CoT prompting can improve the AI coding model’s ability to interpret complex statements accurately. That is, CoT prompting can help analyze sentiment beyond just positive or negative. This example demonstrates interactive sentiment analysis:

The code identifies sentiment words. It prompts the LLM to analyze sentiment toward “acting.” The response is retrieved, sentiment is extracted (assuming a specific LLM response format), and an explanation with reasoning is included. A follow-up prompt analyzes sentiment toward the ‘plot’, and then sentiment, explanation, and overall review details are returned.

Caveat: While sarcasm detection is an active research area, current LLMs might struggle with complex sarcasm.

Remember, these are simplified examples. In practice, your responses might vary depending on the model, training data, and prompt construction.

Chain-of-Thought Prompting Variants

Suppose you’re building a cutting-edge AI for sentiment analysis. You don’t just want it to classify a restaurant review as positive or negative—you want it to understand the emotional undercurrents truly.

That’s where CoT prompting techniques like Automatic CoT and Zero-shot CoT come in. It’s like equipping your AI with a detective’s reasoning toolkit, allowing it to explain its decisions and establishing a new level of transparency in how it arrives at an answer.

Automatic CoT uses machine learning to craft effective prompts, while Zero-shot CoT relies on simple heuristics to guide the AI’s reasoning without any pre-training examples.

Chain-of-thought prompting, whether in its automatic or zero-shot variant, significantly enhances the capabilities of sufficiently large language models by promoting structured and logical problem-solving.

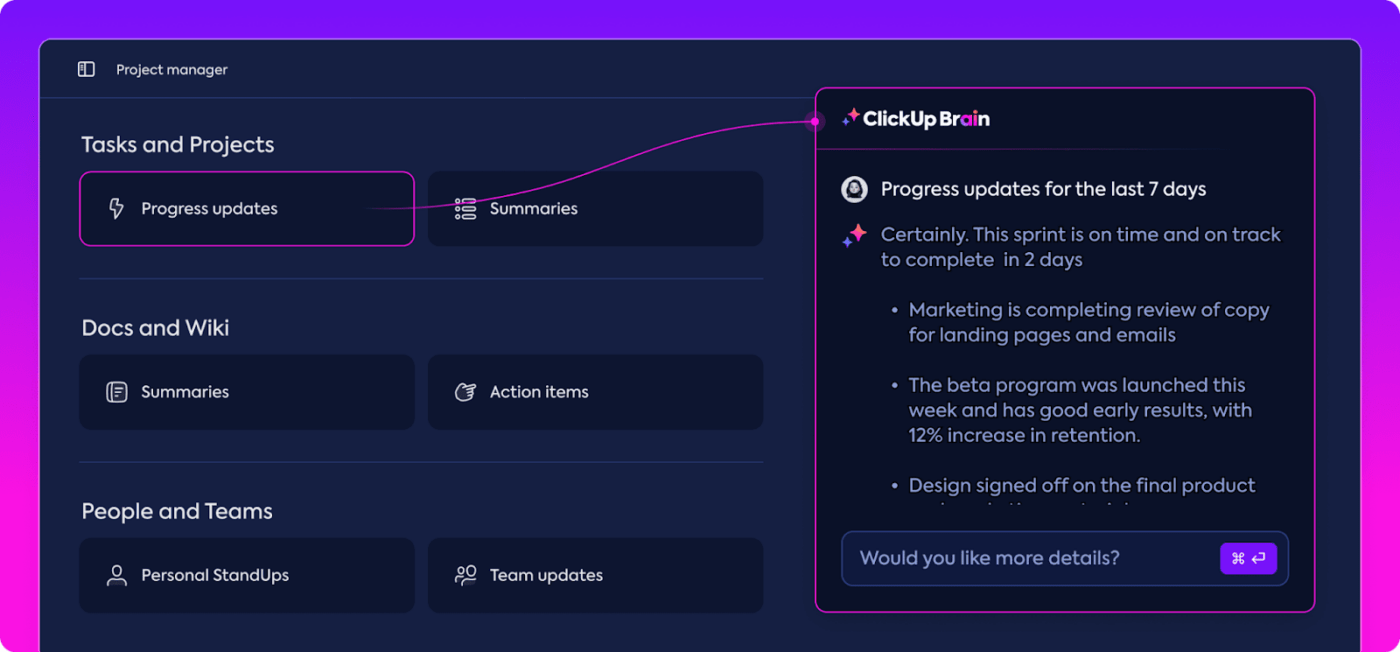

Tools like ClickUp Brain harness this power to deliver sophisticated, user-friendly solutions for complex tasks.

At its core, ClickUp Brain leverages advanced AI techniques, potentially including chain-of-thought prompting, to enhance the problem-solving and reasoning abilities of large language models and offer structured solutions for complex tasks.

Integrated seamlessly into the ClickUp project management platform, ClickUp Brain provides much-needed user assistance, enhancing developer productivity by:

- Breaking down complex reasoning tasks: When asked to plan a project, ClickUp Brain considers dependencies and priorities of relevant ClickUp Tasks to generate a step-by-step breakdown of the process. You can also ask it to explain its reasoning for the suggested approach

- Enhancing problem-solving: For problems requiring detailed analysis, ClickUp Brain can simplify the reasoning process to provide problem-specific conclusions, recommendations, and solutions

- Streamlining code reviews: Imagine ClickUp Brain analyzing code commits and suggesting potential bugs or inefficiencies. It could then use CoT prompting to explain its reasoning, highlighting specific lines of code and outlining the thought process behind its suggestions. This can streamline code review processes and improve code quality

- Automating documentation generation: ClickUp Brain can be prompted to automatically generate clear and concise ClickUp Docs from code comments, meeting notes, and project discussions, saving developers valuable time and effort

- Improving debugging: ClickUp Brain could assist developers by analyzing error messages and suggesting potential solutions. Using CoT prompting could not only provide the solution but also explain the thought process behind it, guiding developers through the debugging steps and helping them understand the root cause of the issue

- Improving decision-making: By generating intermediate steps and recommendations for a specific issue, ClickUp Brain helps users understand the logic behind suggestions (alternative solutions), aiding in better decision-making

Also read: AI Tools for Developers

Learning and Improving with Chain-of-Thought Prompting

To optimize CoT prompts, you can rely on several techniques:

Focus on the balancing act

Don’t hold the AI’s hand through every step. Provide a roadmap, not a script. Here’s a bad CoT prompt for sentiment analysis in restaurant reviews: “Is the word ‘happy’ present? If yes, positive sentiment. If no, negative sentiment.”

This is overly simplistic and doesn’t leverage the AI’s capabilities. Here’s a better approach: “Analyze the reviewer’s word choices. Are there indicators of joy, excitement, or satisfaction like ‘delicious,’ ‘wonderful,’ or ‘highly recommend’?

Conversely, are there words suggesting disappointment, frustration, or anger like ‘inedible,’ ‘terrible,’ or ‘avoid at all costs’?” This allows the AI to use its knowledge of language to understand the sentiment behind the words.

Clear communication is key

Ditch the technical jargon. Instead of saying, “Perform Named Entity Recognition (NER),” use something the AI can understand clearly: “Identify key details in the text, like the name of the restaurant, specific dishes mentioned, or people involved.” This ensures the AI focuses on the information relevant to the task.

Show, don’t tell

When training detectives, you wouldn’t just say, “Identify the culprit.” You’d show them a few examples. The same goes for CoT prompts. Don’t just tell the AI to “identify emotions.”

Provide the AI with real-world examples where the prompt breaks down the text, analyzes word choices, and considers context, all leading to a final sentiment classification.

For instance, you could provide the AI with a positive review that mentions “delicious food” and “friendly service,” where the prompt highlights these positive phrases. You could also provide a negative review with words like “cold fries” and “slow service” and show the AI how these contribute to the negative sentiment.

Self-consistency: Why your AI shouldn’t be fickle

Imagine your AI confidently classifying a review as positive because it mentions “great value,” then backtracks later when it encounters sarcasm like “great value…for cardboard pizza.” Not a good look, right?

The same goes for CoT prompts. Ensure the intermediate reasoning steps you provide logically lead to the final answer. Double-check your prompts, and if the AI’s response contradicts the chain, refine it iteratively. Consistency is key to building a reliable AI partner.

Beyond input-output: Unveiling the reasoning power

Traditional AI training is often a black box. You feed it data (a movie review), and it spits out a classification (positive/negative). But CoT lets you peek behind the curtain.

The AI reveals its thought process and steps to reach the answer (identifying sentiment based on word choice and context). This transparency builds trust and allows you to diagnose potential biases or errors in the reasoning chain.

CoT with response_format: Structuring the thought process

Imagine your detective presenting a jumbled mess of evidence in a case. CoT’s response_format parameter is like a handy organizer that labels every piece and helps solve the jigsaw puzzle faster.

You can instruct the AI to present its reasoning steps in a clear format, like a bulleted list or a numbered sequence. This makes the entire process easier for developers—just like a well-structured case file for a judge.

You can even highlight crucial steps, emphasizing specific evidence that significantly impacted the AI’s decision.

The future of CoT: It’s just the beginning

CoT prompting is fairly new for NLP and AI. Here’s a glimpse into the exciting future:

- Automating CoT prompt generation: Imagine an AI assistant that writes your CoT prompts for you! This would save developers and researchers significant time and effort

- Tackling super-complex reasoning: With even more advanced CoT techniques, AI systems could tackle problems that would leave even the most skilled NLP engineers scratching their heads. Think of an AI that can debug complex code line by line, explaining its reasoning at each step or analyze financial markets with a clear and transparent chain of thought

CoT can empower AI systems to learn and improve continuously. By analyzing their own reasoning steps and outcomes, AI models can identify weaknesses and refine their approaches over time. This paves the way for a new generation of “lifelong learning” AI systems that constantly evolve and improve.

Learning and Improving with Chain-of-Thought Prompting

Self-consistency is important when using Chain-of-Thought prompts, as it ensures that the answers stay consistent and make sense.

This makes you think more deeply and clearly about the questions, which leads to better ideas and insights.

- Chain-of-thought (CoT) techniques are better than traditional input-output prompts because they encourage exploring interconnected ideas and concepts. In other words, such non-linear thinking leads to more nuanced and holistic responses, enabling deep-topic research and creative problem-solving

- Speaking of ideas, another Chain-of-Thought (CoT) technique called ‘response_format’ adds versatility to the prompting process for even better output. You can specify how you want the AI to respond. It could be narrative storytelling, bullet points, or even diagrams

- Regardless of the choice, the resulting CoT prompts are clearer and more organized, which is a great combination for better brainstorming, problem-solving, and learning new things

The trifecta of clarity, creativity, and coherence is the agenda for chain-of-thought prompts.

And when you throw in ‘response_format’, you’re set up for next-level ideation—the kind you never expected was even remotely possible.

That’s the potential of CoT, which you (kind of) know by now. But what you don’t is another ace up CoT’s sleeve—flexibility.

Yes, chain-of-thought prompting isn’t rigid; it’s surprisingly adaptable. So, don’t hold back.

Can’t digest what the LLM spat out?

Switch it up! Try something new. The “my way or the highway” doesn’t apply to CoT. You’re the person behind the wheel of the AI engine, so what you say goes.

So keep your mind open, experiment, and have a little fun. In time, you’ll get what you asked for.

Also read: Mastering Prompting Concepts: 10 Best Prompt Engineering Courses to Make the Best of LLMs

ClickUp: Your Gateway to CoT-powered Project Management

By now, you’ve hopefully gained a solid understanding of thought prompting and its potential to transform our interactions with AI.

This innovative technique empowers large language models to not only answer questions but also reveal their reasoning.

As CoT continues to evolve, the possibilities for simplifying complex, symbolic reasoning tasks and fostering deeper human-AI collaboration are boundless.

Ready to harness the power of CoT in your workflow? Look no further than ClickUp!

ClickUp seamlessly integrates AI features like ClickUp Brain, allowing you to leverage CoT for enhanced project management. It streamlines your decision-making by providing clear, step-by-step solutions, facilitating a deeper understanding of the reasoning behind each suggestion.

Enhance your projects with ClickUp. Sign up for a free ClickUp trial today and experience the future of AI-powered project management.

Questions? Comments? Visit our Help Center for support.