ChatGPT Voice vs. Whisper AI: Key Differences Explained

Sorry, there were no results found for “”

Sorry, there were no results found for “”

Sorry, there were no results found for “”

OpenAI, the frontrunner in AI innovation, has consistently been delivering tools that transform human-computer interaction.

ChatGPT Voice Mode and Whisper AI are from the same company, but tackle voice processing from opposite angles.

While the former facilitates real-time conversations, the latter is an automatic speech recognition model that transcribes audio into text.

With this ChatGPT Voice vs. Whisper AI guide, let’s break down their distinct capabilities and see how each technology fits into modern voice-powered workflows.

As a bonus, we recommend another tool, the in-house favorite, that converts transcriptions into actions.

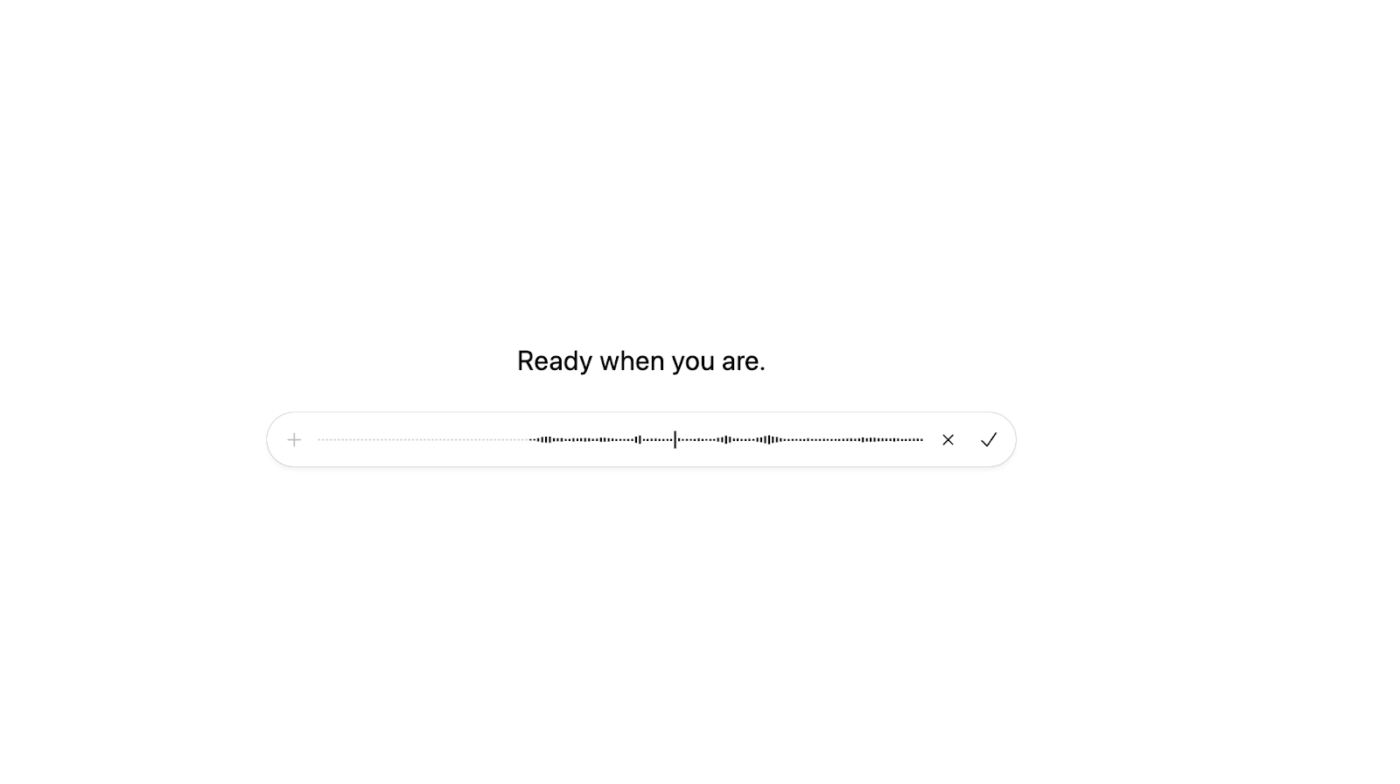

ChatGPT Voice Mode is a ChatGPT feature that lets you hold spoken conversations with an AI chatbot in real-time. With its hands-free interaction, you can continue Voice conversations in the background while using other apps or even with your phone screen locked.

Use it to get quick answers to your questions, brainstorm ideas, or simply learn about a topic with natural back-and-forth conversations.

Voice supports over a couple of dozen languages and offers nine distinct output voices.

Voice Mode shifts from conventional text-to-speech chatbots toward conversational and emotionally aware interactions. Here are some of its features that make it stand out.

Advanced Voice Mode in ChatGPT can adjust mid-conversation if you interrupt while it’s responding. This makes it much easier to add new details or ask a follow-up question without waiting.

Instead of prematurely jumping in, voice also allows you to take longer pauses to collect your thoughts.

💡 Pro Tip: Always follow the 3-Second Rule when using any voice technology. When you pause for 2-3 seconds after asking a complex question, it gives AI time to process the context and deliver more thoughtful responses.

ChatGPT’s context retention works across voice and text interactions. When you switch between text and voice within the same thread, you don’t need to feed in details again; it picks up nuances and knows what you are referring to.

Unlike tools like Siri and Alexa, which have smaller retention windows, ChatGPT Voice Mode maintains context throughout your session (even if it runs for hours).

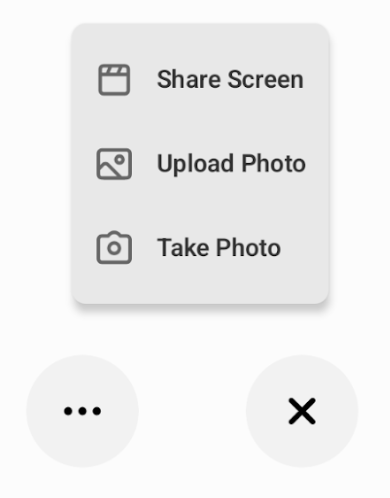

On ChatGPT mobile apps, you can combine voice commands with visual content. This advanced setting lets you share your screen, upload videos, or point your camera directly at objects. This visual-voice combination opens up practical problem-solving scenarios.

For example,

👀 Did You Know? LLMs are increasingly offering massive context windows. Claude gives ~200K tokens, GPT-4-turbo up to 128K, and Gemini ~2 million tokens.

📚 Read More: Top Free Screen Recorder No Watermark Tools

(It is included with the different ChatGPT plans and not priced separately)

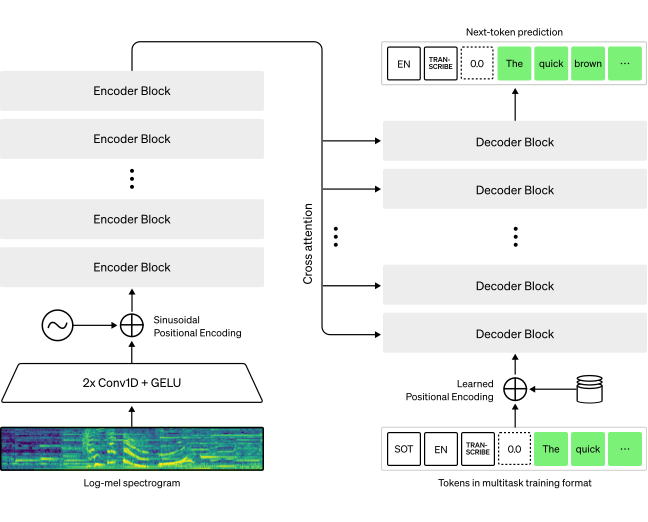

Whisper is an automatic speech recognition (ASR) system that converts spoken audio or recorded files into written text. Trained on 680,000 hours of multilingual and multitask supervised data, this open-source model focuses purely on transcription accuracy.

With one-third of its pre-training data being multilingual, Whisper can recognize and transcribe over 99 languages with remarkable precision. The system demonstrates robust performance even for poor-quality audio with multiple speakers and background noise.

Here are Whisper’s key features that make it a standout speech-to-text transcription technology.

Whisper is an open-source speech-to-text transcription software with no licensing fees. Since it is open source, you can access the complete codebase and modify it as per your specific needs for deployment.

The tool also provides comprehensive documentation. Developers can examine how the model processes audio, understand its decision-making logic, and troubleshoot issues directly in the source code.

❗Caution: Whisper has been reported to invent medical conditions or treatments, false side-effects, racial or demographic statements, sometimes violent content, and even random phrases like “Thank you for watching!” to fill up silences in the input.

Whisper can be deployed locally and on the cloud, allowing users to transcribe audio files without an internet connection. It is useful for companies that need complete data privacy and compliance with GDPR.

However, local Whisper deployment requires significant computational resources, particularly a high-performance GPU for optimal processing speeds.

⚡ Template Archive: Don’t let your transcriptions gather digital dust. Use prebuilt meeting notes templates that automatically transform your transcribed conversations into structured, actionable formats your team can immediately use.

Whisper allows you to train its speech-to-text model for specific use cases and datasets. However, this is a resource-intensive process. To customize the model, you must prepare a dataset of sounds to train on, along with an explanation.

The fine-tuning feature is helpful for industries that require product-specific vocabulary, such as transcription for the medical field, legal documentation, or customer support calls.

🧠 Fun Fact: Whisper is trained on 680,000 hours of audio data, equivalent to 77 years of continuous listening. From podcasts to lectures and conversations to interviews, Whisper is trained on diverse, multilingual audio scraped from the web.

Whisper lets you build low-latency, multimodal experiences. Its pricing for 1 million API tokens includes:

📮 ClickUp Insight: Only 10% of our survey respondents use voice assistants (4%) or automated agents (6%) for AI applications, while 62% prefer conversational AI tools like ChatGPT and Claude.

The lower adoption of assistants and agents could be because these tools are often optimized for specific tasks, like hands-free operation or specific workflows.

ClickUp brings you the best of both worlds. ClickUp Brain is a conversational AI assistant that can help you with a wide range of use cases. On the other hand, AI-powered agents within ClickUp Chat channels can answer questions, triage issues, or even handle specific tasks!

📚 Read More: Best Wispr Flow Alternatives

ChatGPT Voice Mode allows natural back-and-forth interactions through spoken conversations. On the other hand, Whisper is purely a speech-to-text transcription system designed to convert audio into written text.

While one is known for conversational dialog, the other performs transcription across multiple languages.

Here’s a quick overview of the main differences between the two:

| Features | ChatGPT Voice Mode | Whisper AI |

| Interaction model | Two-way conversational dialog with voice responses | One-way speech recognition for text conversion |

| Language support | Supports 30+ languages with native voice synthesis | Recognizes and transcribes 99+ languages accurately |

| Response type | Generates voice responses plus conversation transcript | Produces written text output only |

| Resource intensity | Cloud-based processing with minimal local requirements | Requires a high-performance GPU for optimal local processing |

| Training | Pre-trained conversational model, not customizable | Fine-tunable model for domain-specific terminology |

| Background noise handling | Good performance in conversational environments | Accurate even with poor audio quality |

| Integration complexity | Simple API integration with usage-based pricing | Integrating Whisper AI requires a complex setup for local deployment |

| Multiple speaker support | Designed for single-user interaction | Advanced voice recognition technology that can distinguish and transcribe multiple speakers |

| Setup | Plug-and-play solution; can be used directly in ChatGPT as well | Requires manual setup on Cloud or local applications |

ChatGPT Voice Mode processes your voice inputs and responds with a voice output. It is multimodal, understands your natural language, and can handle interruptions and cut through background noise.

You also get the conversation transcript in your ChatGPT thread; however, the accuracy of this transcript varies.

Whisper, on the other hand, functions as a one-way speech recognition system. It converts audio files or live speech into accurate written text.

🏆 Winner: ChatGPT Voice Mode stands out for real-time conversational capabilities, while Whisper is limited to transcription-only use.

⚡ Template Archive: Voice conversations often generate scattered to-dos and project ideas that get forgotten. Use task list templates to capture these spoken commitments and transform them into organized, trackable workflows with clear priorities.

ChatGPT Voice Mode can build conversations on earlier discussions within the same thread. It picks up on implied meanings and understands nuanced requests by referencing information shared earlier in the conversation. This contextual awareness creates seamless dialogue experiences.

Whisper, however, lacks understanding of conversational context since it operates as a transcription-only tool. It processes each audio segment independently without maintaining memory of previous interactions.

While it accurately converts speech to text, it doesn’t interpret meaning or relationships between separate audio files or conversations.

🏆 Winner: ChatGPT Voice Mode wins for its ability to build on past context and sustain meaningful dialogue.

ChatGPT Voice Mode excels in real-time conversational processing. It processes speech input and generates voice responses with minimal latency.

Whisper, however, can handle pre-recorded files in batch processing. In other words, it only processes the file after the recording is complete. Compared to other alternatives, Whisper’s processing time is comparatively slower. This tradeoff prioritizes transcription accuracy over speed.

🏆 Winner: ChatGPT Voice Mode is better for real-time interactions, while Whisper suits post-meeting documentation.

ChatGPT Voice Mode is ideal for interactive tasks and problem-solving discussions where you need an AI assistant to think and respond in real time. It suits those looking for quick but reliable answers to problems.

However, Whisper is useful when you want to create written records from audio content and dictated text. It is primarily used for transcribing voice memos and providing accessibility features for people with impaired hearing. Its strength lies in documentation and archival purposes.

🏆 Winner: There is no clear winner; it depends on your goal. Choose ChatGPT Voice Mode for interactive dialogue and Whisper for documentation and archival needs.

ChatGPT Voice Mode is available across all ChatGPT pricing tiers; however, free users get limited access. It has an open API that developers can integrate into applications, with usage-based pricing through OpenAI’s platform.

Whisper offers more flexible pricing through OpenAI’s API and is one of the most cost-effective tools for transcription needs at $0.006 per minute of audio. However, deploying the local model is more economical for organizations that require frequent processing.

🏆 Winner: Depends on how you plan to use them. ChatGPT Voice Mode suits conversational, on-demand usage, while Whisper is more cost-efficient for large-scale transcription pipelines.

🌟 Bonus: While ChatGPT Voice Mode and Whisper focus on real-time conversation and transcription, they don’t offer built-in workflow automation.

Autopilot agents (like the ones in ClickUp) can be prebuilt or custom-built to act automatically based on specific triggers, something neither ChatGPT Voice nor Whisper can do natively.

Here’s why this matters:

To conclude the debate, we took it to Reddit. Here are some user opinions on both tools.

While ChatGPT Voice Mode initially garnered an extremely positive response, users (at large) are experiencing frustration with its new updates. According to one of the users,

I used to look forward to using it (ChatGPT Voice Mode) to unpack my week at the end of a long work week, or deep dive into a technical topic, or just free form chat. The conversations used to feel natural and enjoyable. Now it’s annoying as hell. Short responses, being curt. No matter what I’m talking about, it steers the conversation in such a way that there’s nowhere to go. The conversation just falls flat. Like a person that’s annoyed with you, has something else to do, and is just trying to appease you real quick before it has to leave.

Another user also shared a similar viewpoint on the evolving Advanced Voice Mode. According to the thread,

Advanced Voice is the only voice model actually going backwards as time moves on. If we look back at the original demos, it was FULL expressive mode, extremely lifelike. After the latest update, especially, it can’t whisper, it can’t do accents. It has one, slightly bored, corporate help desk mode.

Whisper requires extensive setup, and even then, there are occasional glitches while processing large files. According to a user,

I’ve been using the Whisper’s large model for a year and a half or so, and while it’s amazing when it works, it still begins to experience hallucinations and doesn’t really recover until it’s reloaded.

Neither ChatGPT Voice Mode nor Whisper comes without tradeoffs. It’s better to understand where they lag, so there aren’t any surprises while using them in real scenarios.

💡 Pro Tip: Use ClickUp Brain MAX for voice-to-text that goes beyond transcription.

While ChatGPT Voice Mode and Whisper handle voice in isolation, ClickUp Brain MAX transforms speech into structured, contextualized knowledge inside the same platform where your team already works. Here’s how it outpaces both:

Neither ChatGPT Voice Mode nor Whisper AI fully closes the loop from spoken conversations to actionable knowledge.

ClickUp, the everything app for work, bridges the gap. It allows you to capture, process, and act on conversations. Let’s walk through the key features of ClickUp that make this possible.

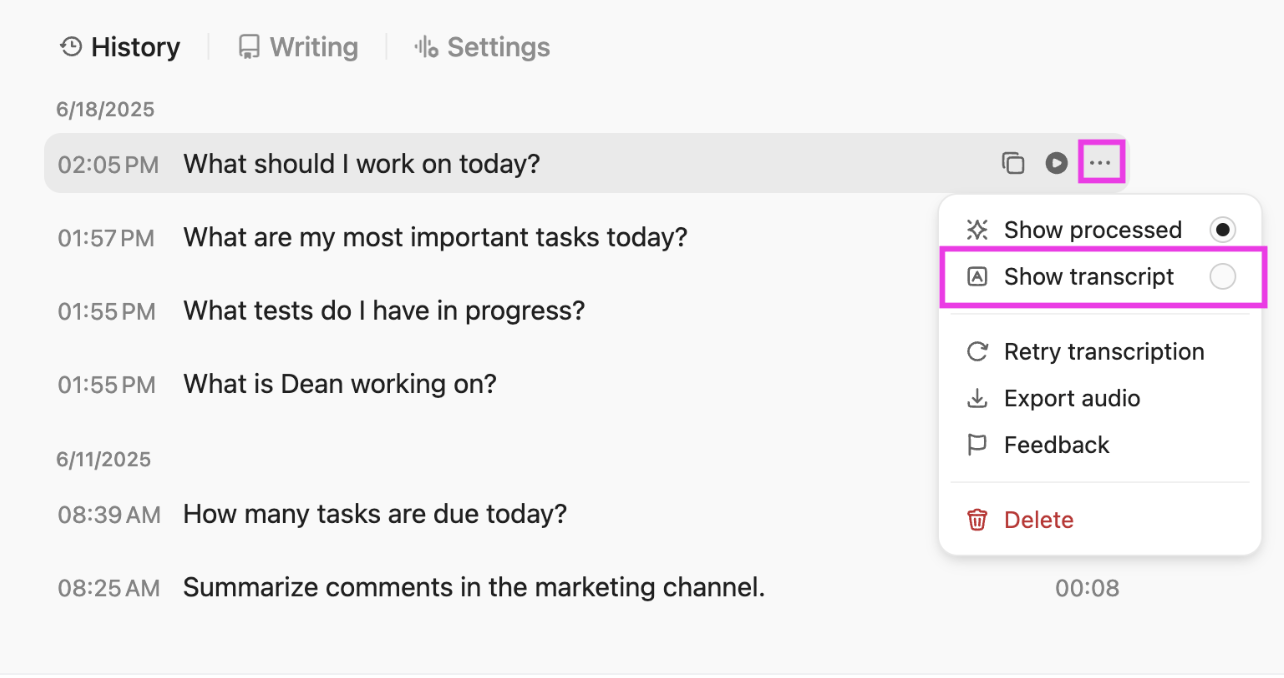

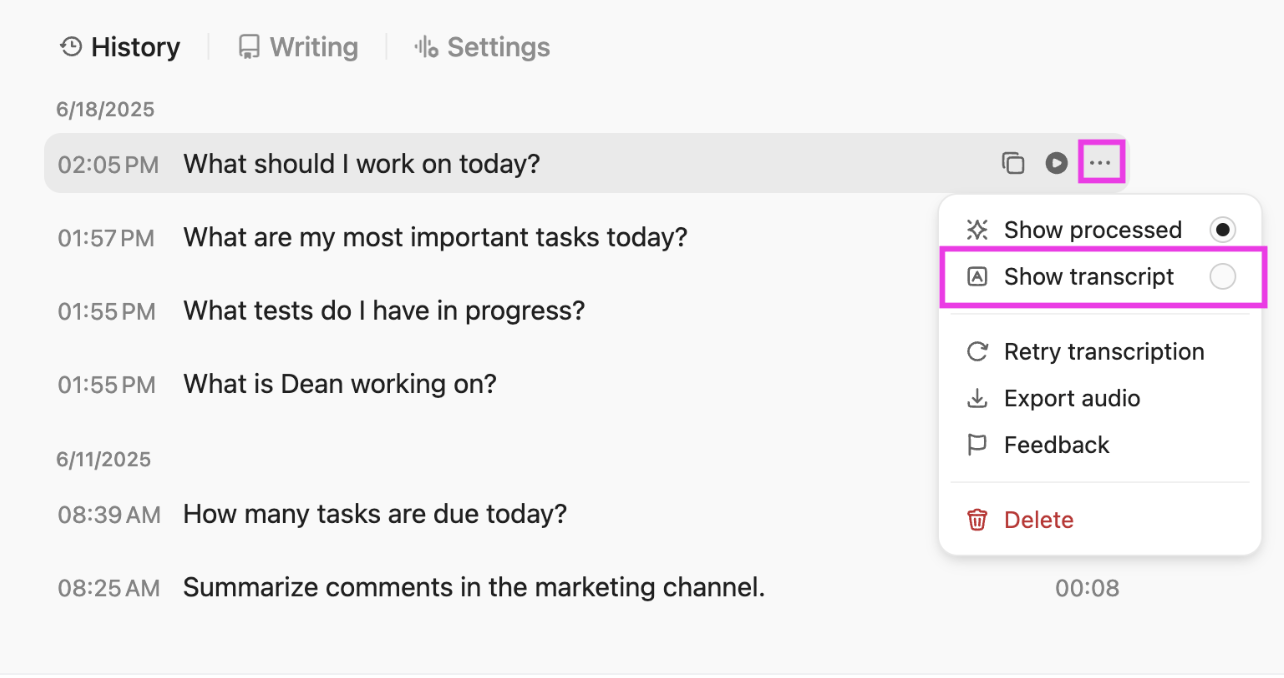

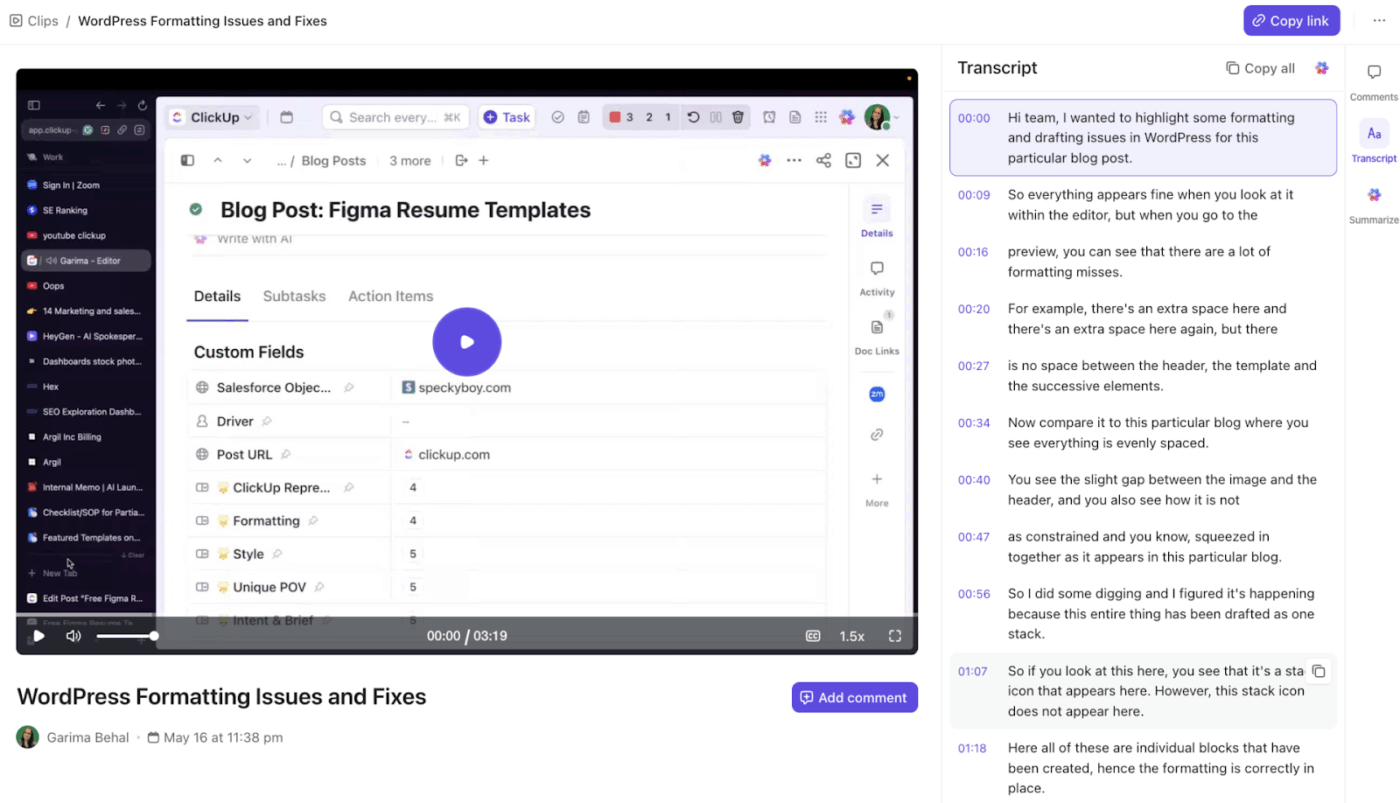

You don’t need to configure external APIs or deploy separate AI transcription tools to transcribe hour-long meetings. When using ClickUp, you get that functionality built in with ClickUp AI Notetaker.

Allow it to join your meetings, and it will transcribe the meeting audio into text, identify speakers, and add timestamps, so you can follow along with the conversation.

With ClickUp AI, you get transcription support across meetings, voice notes, and screen recordings. It turns audio from any workflow into searchable and actionable text.

The additional features that give you an edge over ChatGPT Voice or Whisper AI include:

💡 Pro Tip: ClickUp AI Notetaker tags action items, deadlines, and decisions made during the meeting and organizes them under ClickUp Docs.

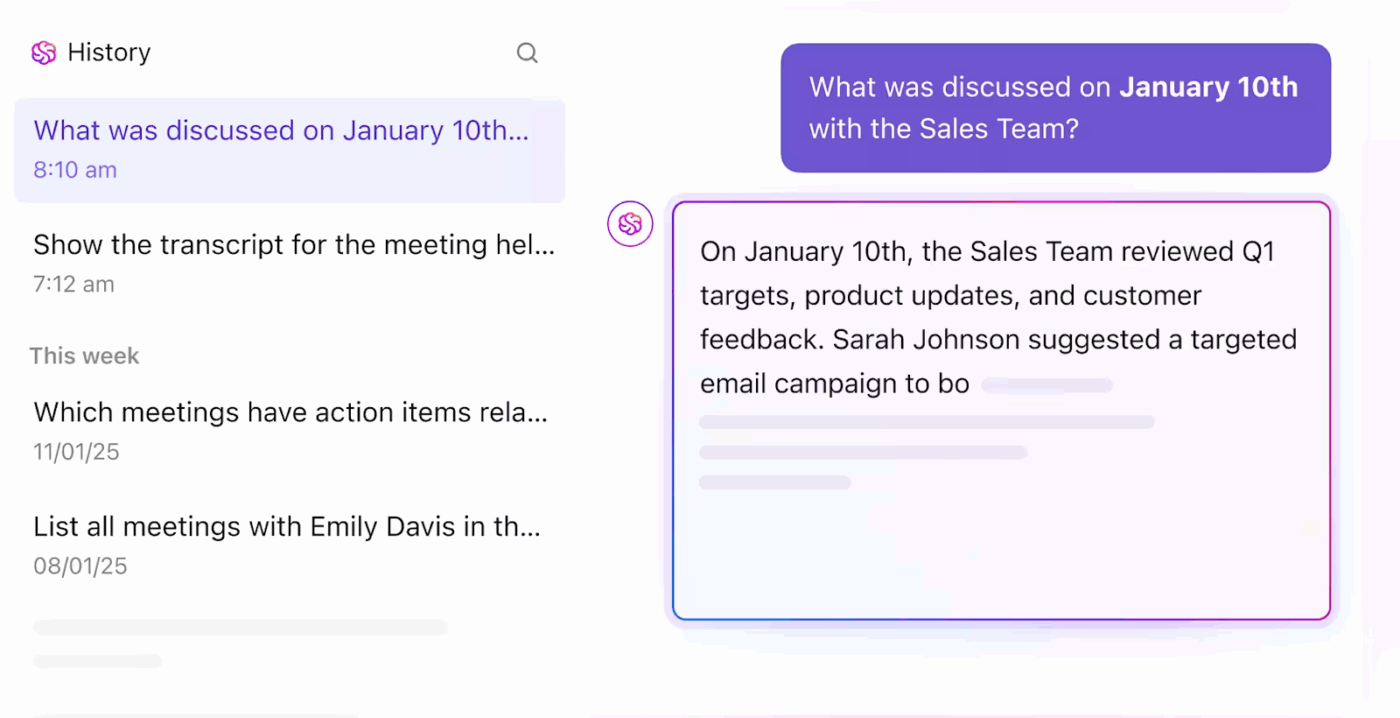

While ClickUp’s AI Notetaker transcribes your meetings, ClickUp Brain, the built-in AI assistant, adds a powerful layer of intelligence to your notes.

We mentioned earlier how it can summarize transcripts or pull specific moments without manually searching the content. It can even read through the transcript and extract key takeaways.

ClickUp Brain can do a whole lot more:

Check this YouTube video for a more detailed overview of how ClickUp Brain transcribes voice and video:

🌟 Bonus: ClickUp Brain users can choose from multiple external AI models, including ChatGPT, Claude, and Gemini, for various writing, reasoning, and coding tasks, right from within their ClickUp platform!

Maximize project efficiency with the AI model of your choice with ClickUp!

We already discussed how ClickUp Notetaker makes notes from a video and stores them in ClickUp Docs.

Docs offers comprehensive document management capabilities that standalone dictation tools simply can’t match. Your work stays organized in a searchable Docs Hub so you can quickly find any information you need.

Here are the key voice-to-document capabilities that ClickUp Docs offers:

💡 Pro Tip: Use ClickUp Assign Comments to tag specific teammates directly inside your notes or Docs. You can convert feedback into trackable tasks, assign an owner to each item, and eliminate post-meeting follow-up confusion.

ClickUp’s integrated AI capabilities allow intelligent automation that siloed AI tools cannot achieve. And that’s why we believe it to be a better alternative to Voice and Whisper.

The speech-to-speech capabilities of ChatGPT Voice Mode and the transcription accuracy of Whisper have opened possibilities for hands-free productivity and multilingual communication. However, a significant gap still exists between AI assistance and actual work execution.

ClickUp, with its universal workspace approach, connects AI-powered voice-to-text capabilities directly to its project workflows. Here, your dictated ideas become assigned tasks, while meeting transcripts transform into collaborative project documents.

Combine this with all your tasks, documents, and chats in one place, and you can see why ClickUp is the one-for-everything AI solution you need.

Sign up for free now and transform how your team uses voice technology for actual project execution.

© 2026 ClickUp