Common AI Adoption Challenges And How to Overcome Them

Sorry, there were no results found for “”

Sorry, there were no results found for “”

Sorry, there were no results found for “”

Companies aren’t just experimenting with AI anymore. They’re racing to implement it, often without realizing how many AI adoption challenges are waiting just around the corner.

✅ Fact check: 55% of organizations have adopted AI in at least one business function, but only a tiny share are seeing significant bottom-line impact. AI adoption challenges may be a big part of why.

That gap between adoption and actual value usually comes down to execution. Misaligned systems, untrained teams, and unclear goals are all factors that add up fast.

The importance of AI in the modern workplace isn’t just about using new tools. It’s about building a smarter way of working that scales with your business. And before that happens, you need to clear the roadblocks.

Let’s break down what’s holding teams back and what you can do to move forward with confidence.

Struggling to turn AI ambition into actual business impact? Here’s how to overcome the most common AI adoption challenges:

✨ Streamline AI-driven execution with ClickUp and keep everything in one connected workspace.

You’ve got the tools. You’ve got the ambition. But somewhere between pilot testing and full-scale rollout, things start to break.

This is where most AI adoption challenges show up, not in the tech, but in the messy middle of execution.

Maybe your teams are working in silos. Or your legacy systems can’t sync with your new AI layer. Maybe no one’s exactly sure how success is being measured.

A few friction points tend to appear across the board:

The truth is, that AI systems don’t work in isolation. You need connected data, trained teams, and workflows that create space for intelligent automation.

Still, many organizations charge ahead without setting those foundations. The result? Burnout, fragmented progress, and stalled momentum.

So what exactly gets in the way of successful adoption and what can you do about it?

One of the most overlooked AI adoption challenges isn’t technical. It’s human despite what the numbers say about growing adoption rates (see the latest AI stats).

When AI is introduced into a team’s workflow, it often triggers silent resistance. Not because people fear technology but because they weren’t brought into the process. When tools appear without explanation, training, or context, adoption becomes a guessing game.

You might see polite agreement in meetings. But behind the scenes, teams continue using old methods, sidestepping new tools, or duplicating work manually. This resistance doesn’t look like protest, it looks like productivity slipping through the cracks.

A customer success team is asked to use a new AI assistant to summarize support tickets. On paper, it’s a time-saver. In practice, agents still write summaries manually.

Why? Because they aren’t sure if the AI summary covers compliance language or captures key details.

In product development, a team receives weekly backlog recommendations powered by an AI model. But the team lead skips them every time, saying it’s faster to use instinct. The AI outputs sit untouched not because they’re bad, but because no one explained how they’re generated.

Across roles, this pattern emerges:

Over time, that passive resistance scales into real adoption failure.

Telling people AI will help isn’t enough. You have to show how it supports their goals and where it fits into their process.

Teams adopt what they trust. And trust is earned through clarity, performance, and relevance.

💡 Pro Tip: Use ClickUp Dashboards to surface simple metrics like time saved or cycle time reduction on AI-assisted tasks. When teams see progress tied directly to their effort, they stop seeing AI as a disruption and start seeing it as leverage.

No matter how powerful your AI systems are, they’re only as trustworthy as the data they rely on. And for many organizations, that trust is fragile.

Whether you’re dealing with sensitive customer records, internal business logic, or third-party data integrations, the risk factor is real. One misstep in handling data can put not just your project, but your entire brand at risk.

For leaders, the challenge is balancing the speed of AI implementation with the responsibility of data security, compliance, and ethical guardrails. When that balance is off, trust breaks on both ends, internally and externally.

📖 Read More: How to Use AI in Leadership (Use Cases & Tools)

Even the most AI-forward teams pull back when privacy risks feel unmanaged. That’s not hesitation but it’s self-preservation.

When these issues aren’t addressed early, teams opt out entirely. You’ll hear things like “We’re not touching that feature until security signs off” or “We can’t risk exposing sensitive data to a black-box model.

Security and privacy aren’t afterthoughts, but they are adoption enablers. When teams know the system is secure, they’re more willing to integrate it into critical workflows.

Here’s how to remove hesitation before it becomes resistance:

📖 Also Read: A Quick Guide to AI Governance

People don’t need every technical detail but they do need to know that the AI they’re using isn’t putting the business at risk.

💡 Pro Tip: With tools like ClickUp Docs, you can centralize internal AI usage policies, data governance protocols, and model documentation. All this in a way that’s accessible across departments.

This is especially important when onboarding new teams into sensitive AI workflows.

When data privacy is visible and proactive, trust becomes operational and not optional. That’s when teams start using AI where it matters most.

One of the fastest ways for an AI initiative to lose momentum is when leadership starts asking,

“What are we actually getting out of this?”

Unlike traditional tools with fixed deliverables, AI implementation often involves unknown variables: training timelines, model tuning, integration costs, and ongoing data operations. All of this makes budgeting difficult and ROI projections fuzzy. Especially if you’re trying to scale quickly.

What starts as a promising pilot can quickly stall when cost overruns stack up, or when teams can’t tie AI outcomes to actual business impact.

AI rollouts tend to blur the line between R&D and production. You’re not just buying a tool, you’re investing in infrastructure, change management, data cleaning, and continuous iteration.

But finance leaders don’t sign off on “experiments.” They want tangible outcomes.

This disconnect is what fuels resistance from budget owners and slows adoption across departments.

If you’re only measuring AI success in hours saved or tickets closed, you’re underselling its value. High-impact AI use cases often show returns through decision quality, resource allocation, and fewer dropped priorities.

Shift the ROI conversation with:

When stakeholders see how AI contributes to strategic goals and not just efficiency metrics. The investment becomes easier to defend.

It’s tempting to go all-in on AI with big upfront investments in custom models or third-party platforms. But many organizations overspend before they’ve even validated the basics.

Instead:

💡 Pro Tip: Use ClickUp Goals to track the progress of AI initiatives against OKRs. Whether it’s shortening QA cycles or improving sprint forecasting, tying AI adoption to measurable goals makes spending more visible and justifiable.

AI doesn’t have to be a financial gamble. When implementation is phased, outcomes are defined, and progress is visible, the return starts to speak for itself.

Even the most sophisticated AI strategy will collapse without the internal knowledge to support it.

When companies rush to implement AI without equipping their teams with the skills to use, evaluate, or troubleshoot it, the result isn’t innovation but confusion. Tools go unused. Models behave unpredictably. Confidence erodes.

And the worst part? It’s often invisible until it’s too late.

AI adoption isn’t plug-and-play. Even tools with user-friendly interfaces rely on fundamental understanding. Like how AI makes decisions, how it learns from inputs, and where its blind spots are.

Without that baseline, teams default to either:

Both behaviors carry risks. In a sales team, a rep might follow an AI lead-scoring recommendation without understanding the data inputs, resulting in wasted effort. In marketing, AI-generated content may be pushed live without human review, exposing the brand to compliance or tone issues.

You can’t outsource trust. Teams need to know what the system is doing and why.

👀 Did You Know? Some AI models have been caught confidently generating completely false outputs, a phenomenon researchers call “AI hallucinations.”

Without internal expertise, your team might mistake made-up information for facts, leading to costly errors or brand damage.

You’ll start seeing signs quickly:

In some cases, AI tools even generate new work. Instead of accelerating tasks, they create more checkpoints, manual overrides, and error corrections—all because teams weren’t effectively onboarded.

You don’t need every employee to be a data scientist but you do need functional fluency across your workforce.

Here’s how to build it:

When training becomes part of your adoption strategy, teams stop fearing the tool and use it intentionally.

Even the best AI tool can’t perform if it’s isolated from the rest of your tech stack. Integration is about making sure that your data, workflows, and outputs can move freely across systems without delay or distortion.

Many teams discover this after implementation, when they realize their AI tool can’t access key documents, pull from customer databases, or sync with project timelines. At that point, what looked like a powerful solution became another disconnected app in an already crowded stack.

AI systems rely on more than just clean data—they need context. If your CRM doesn’t talk to your support platform, or your internal tools don’t feed into your AI model, it ends up working with partial information. That leads to flawed recommendations and broken trust.

Common signs include:

Even if the tool works perfectly in isolation, lack of integration turns it into friction, not acceleration.

Legacy systems weren’t built with AI in mind. They’re rigid, limited in interoperability, and often closed off from modern platforms.

This creates issues like:

Instead of a seamless experience, you get workarounds, delays, and unreliable results. Over time, this erodes team confidence in both the AI and the project itself.

Integration doesn’t have to mean expensive overhauls or full platform migrations. The goal is to make sure AI can interact with your systems in a way that supports day-to-day work.

Here’s how to approach it:

Adopting becomes natural when your AI solution fits into your existing ecosystem instead of floating beside it. And that’s when teams start using AI as a utility, not an experiment.

One of the most overlooked AI adoption challenges happens after deployment—when everyone expects results but no one knows how to measure them.

Leaders want to know if the AI is working. But “working” can mean a hundred different things: faster outputs, better decisions, higher accuracy, and improved ROI. And without clear performance indicators, AI ends up floating in the system, producing activity, but not always impact.

AI doesn’t follow traditional software rules. Success isn’t just about whether the tool is used rather it’s about whether the outputs are trusted, actionable, and tied to meaningful outcomes.

Common issues that show up include:

This creates a false sense of momentum where models are active, but progress is passive.

You can’t scale what you haven’t validated. Before expanding AI into new departments or use cases, define what success looks like in the first rollout.

Consider:

Use these to build a baseline before expanding the system. Scaling without validation only accelerates noise.

Many organizations fall into the trap of tracking volume-based metrics: number of tasks automated, time saved per action, and number of queries handled.

That’s a starting point but not a finish line.

Instead, build your measurement stack around:

These signals show you whether AI is being embedded and not just accessed.

A pilot that works in one department might fail in another. AI isn’t universal, it needs context.

Before scaling, ask:

Generative AI, for example, might speed up content creation in marketing—but break legal workflows if a brand voice or regulatory language isn’t enforced. Success in one area doesn’t guarantee scale-readiness in others.

💡 Pro Tip: Treat AI adoption like a product launch. Define success criteria, collect feedback, and iterate based on usage, not just deployment milestones. That’s how scale becomes sustainable.

AI systems can’t outperform the data they’re trained on. And when the data is incomplete, outdated, or stored in disconnected silos, even the best algorithms fall short.

Many AI adoption challenges stem not from the tools themselves, but from the messiness of the inputs.

It’s easy to assume your business has “lots of data” until the AI model needs it. That’s when problems surface:

The result? AI models struggle to train accurately, outputs feel generic or irrelevant, and trust in the system erodes.

You’ll start noticing signs like:

Even worse, teams may stop using AI entirely not because it’s wrong, but because they don’t trust the inputs it was built on.

You don’t need perfect data to get started, but you do need structure. Focus on these foundational steps:

💡 Pro Tip: Create a shared internal glossary or simple schema reference doc before launch. When teams align on field names, timestamp formats, and what “clean” looks like, you reduce model confusion. This also builds trust in the outputs faster.

As AI becomes more embedded in core business functions, the question shifts from

Can we use this model?

to, Who’s responsible when it misfires?

This is where governance gaps start to show.

Without clear accountability, even well-trained AI systems can trigger downstream risks like unreviewed outputs, biased decisions, or unintended consequences that no one saw coming until it was too late.

Most teams assume that if a model works technically, it’s ready to go. But enterprise AI success depends just as much on oversight, transparency, and escalation paths as it does on accuracy.

When governance is missing:

This doesn’t just slow AI adoption. It creates a risk that scales with the system.

You’ll see warning signs like:

For example: A generative AI tool recommends compensation ranges based on prior hiring data. However, the data reflects legacy biases. Without governance in place, the tool reinforces inequities and no one catches it until HR pushes it live.

👀 Did You Know? There’s something called black box AI. It’s when an AI system makes decisions, but even the creators can’t fully explain how it got there. In other words, we see the output but not the thinking behind it. 🤖

This lack of visibility is exactly why AI governance is essential. Without clarity, even the smartest tools can lead to risky or biased decisions.

You don’t need a legal task force to get this right. But you do need a structure that ensures the right people review the right things at the right time.

Start here:

Governance isn’t about adding friction. It’s about enabling safe, confident AI adoption at scale without leaving the responsibility up for interpretation.

📖 Read More: How to Create a Company AI Policy?

AI adoption falls flat when insights don’t turn into action. That’s where most teams hit roadblocks because the tech isn’t integrated into how the team already works.

ClickUp bridges that gap. It doesn’t just plug AI into your workflow. It reshapes the workflow so AI fits naturally enhancing how tasks are captured, assigned, prioritized, and completed.

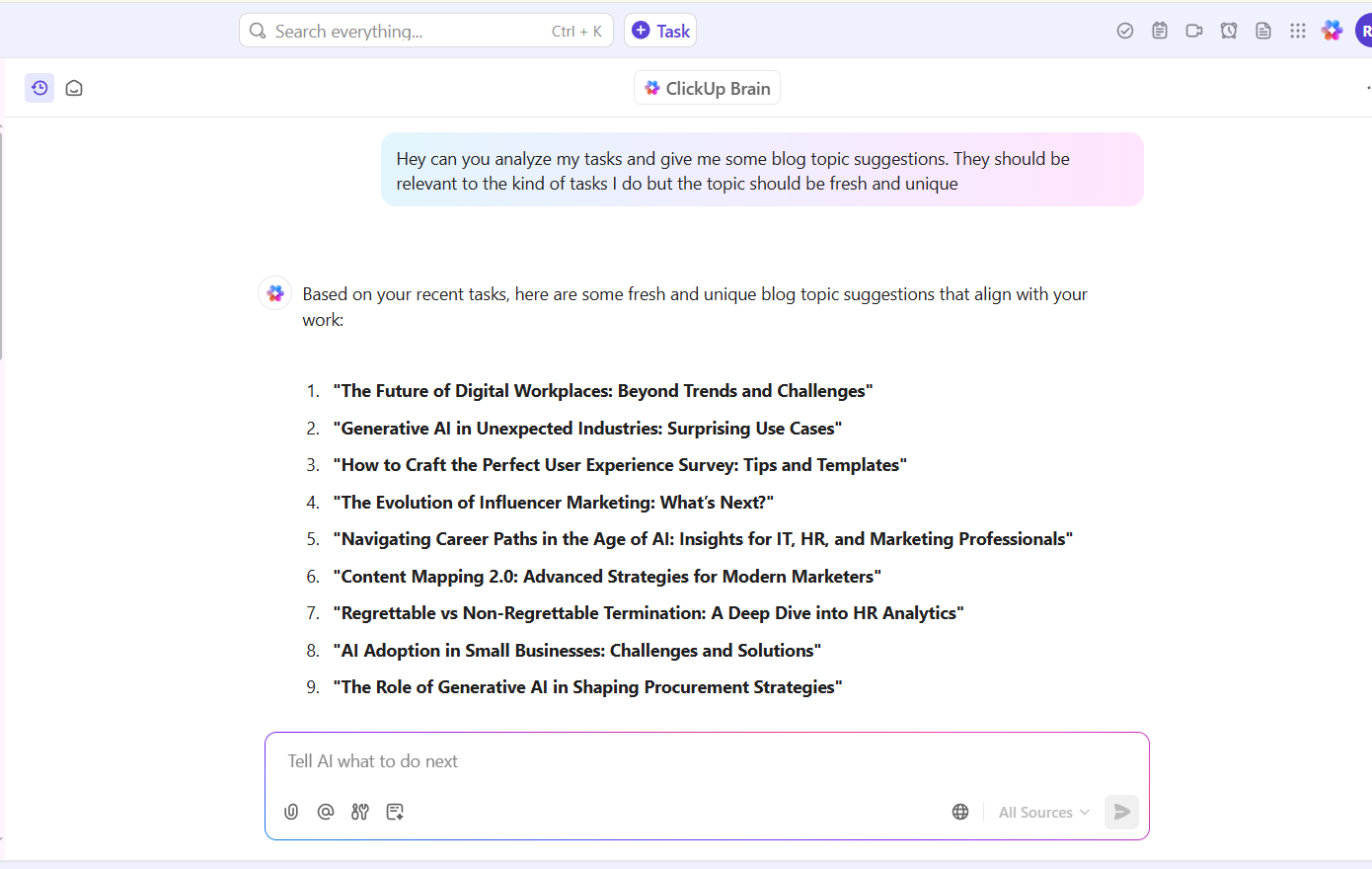

The early stages of AI adoption aren’t just about models or data. They’re about making sense of complexity fast. That’s where ClickUp Brain excels. It turns raw conversations, half-formed ideas, and loose documentation into structured, actionable work in seconds.

Instead of starting from scratch every time a new project kicks off, teams use ClickUp Brain to:

Let’s say your team holds a kickoff call to explore how generative AI could support customer success. ClickUp Brain can:

No more playing catch-up. No more losing ideas in chat threads. Just seamless conversion of thoughts into tracked, measurable execution.

And because it’s built into your workspace and not bolted on whether the experience is native, fast, and always in context.

Every AI-driven decision begins with a conversation. But when those conversations aren’t captured, teams end up guessing what to do next. That’s where the ClickUp AI Notetaker steps in.

It automatically records meetings, generates summaries, and highlights action items. Then links them directly to relevant tasks or goals. No need to follow up manually or risk forgetting key decisions.

This gives teams:

A lot of AI recommendations get stuck in dashboards because no one acts on them. ClickUp Automation ensures that once a decision is made, the system knows how to move it forward, without someone needing to nudge it.

You can set up automations that:

This removes the overhead from routine coordination and lets your teams stay focused on value-added work.

AI automations might sound like an intimidating pursuit. But if you understand the basics, it can increase your productivity massively. Here a tutorial to help you out 👇

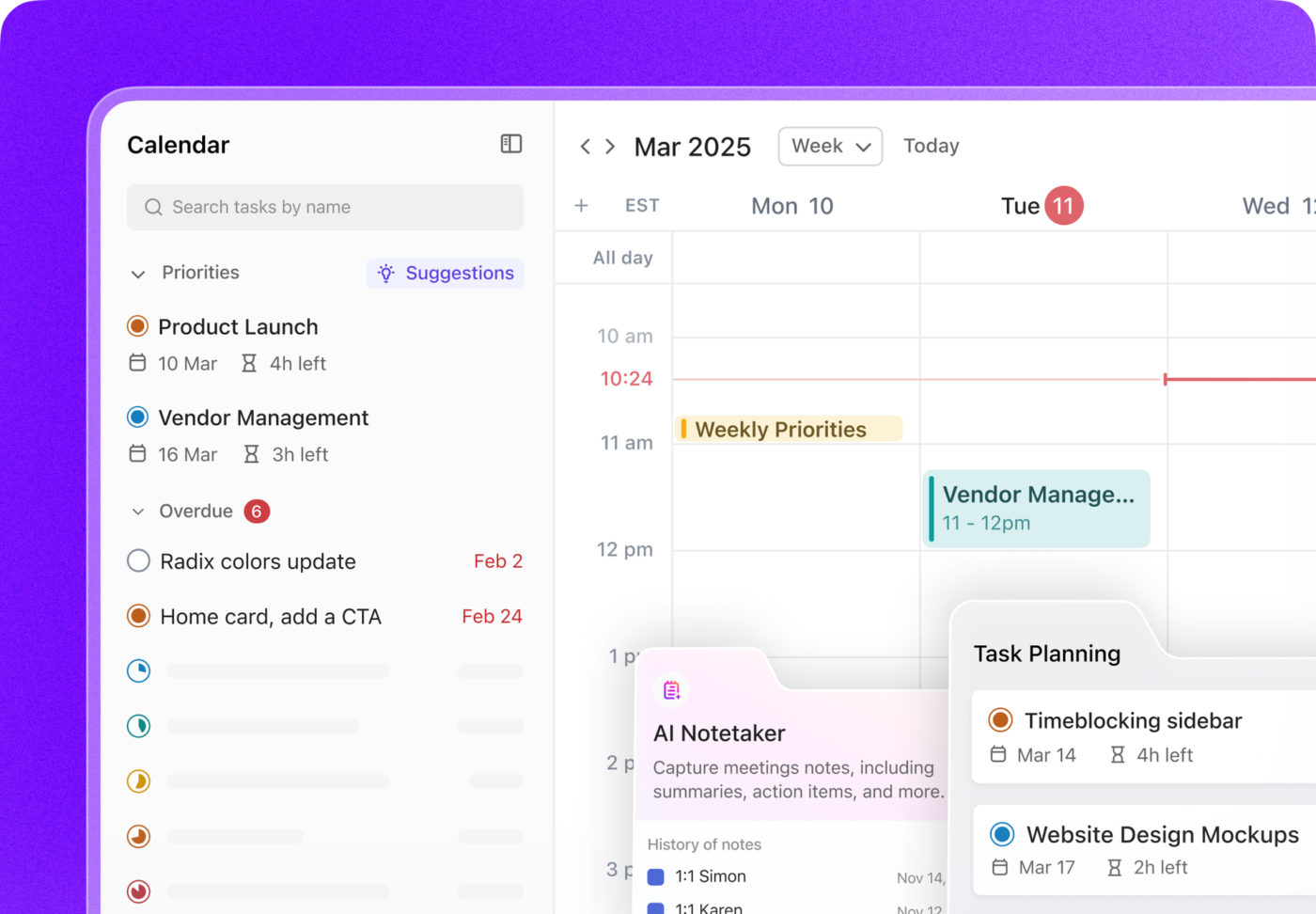

AI works best when teams can see the big picture and adjust quickly. That’s where ClickUp Calendars come in, giving you a real-time view of everything in motion.

From campaign launches to product milestones, you can plan, drag and drop reschedule, and sync across platforms like Google Calendar — all from one place. When AI generates new tasks or shifts timelines, you’ll immediately see how that affects your roadmap.

With color-coded views, filters, and team-wide visibility, ClickUp Calendars help you:

AI insights often raise questions and that’s a good thing. But switching between tools to clarify context creates drag.

ClickUp Chat brings those conversations right into the task view. Teams can react to AI-generated outputs, flag inconsistencies, or brainstorm follow-ups, all within the workspace.

The result? Less miscommunication, faster alignment, and zero need for extra meetings.

At the end of the day, AI is only valuable if it drives action. ClickUp Tasks give structure to that action. Whether it’s a flagged risk, a new insight, or a suggestion from ClickUp Brain. Tasks can be broken down, assigned, and tracked with full visibility.

And when you find a flow that works? Use ClickUp Templates to replicate it. Whether you’re onboarding new AI tools, launching campaigns, or reviewing QA tickets, you can build repeatability into your adoption process.

⚡ Template Archive: Best AI Templates to Save Time and Improve Productivity

Successfully adopting artificial intelligence means more than using AI tools. It is transforming how your teams tackle complex problems, reduce repetitive tasks, and turn historical data into future-ready action.

Whether you’re launching AI projects, navigating AI deployment, or exploring Gen AI use cases, aligning workflows with the right tools unlocks AI’s potential. From smarter decisions to faster execution, AI technology becomes a multiplier when paired with the right systems.

ClickUp makes that possible by connecting data, tasks, and conversations into one intelligent workspace built for scale—powering real results across your artificial intelligence initiatives.

Ready to bridge the gap between AI ambition and execution? Try ClickUp today.

© 2026 ClickUp