LLM Search Engines: AI-Driven Information Retrieval

Sorry, there were no results found for “”

Sorry, there were no results found for “”

Sorry, there were no results found for “”

Search engines have always been essential for finding information, but user behavior has evolved. Instead of simple keyword searches like ‘smartphones,’ people now ask more specific, personalized queries like ‘best budget phones for gaming.’

Meanwhile, large language models (LLMs) like ChatGPT transform search by acting as intelligent question-answering systems.

As they integrate with traditional search engines, they enhance information retrieval through retrieval-augmented generation (RAG), making results more precise and context-aware.

Rather than competing, LLMs and search engines work together to handle complex queries more effectively. In this article, we’ll explore how this integration is shaping the future of search.

Without further ado, let’s jump right in!

Large language models (LLMs) are advanced artificial intelligence systems that process and generate human language. They are trained on extensive text datasets, equipping them to handle tasks like translation, summarization, and conversations.

Some of the most popular examples of LLMs include GPT-3 and GPT-4, which are widely recognized for their ability to handle complex language-related queries.

Unlike traditional search engines that depend on keywords, an LLM-based search engine goes beyond surface-level queries. It understands the context and intent behind questions, delivering direct and detailed answers.

👀 Did You Know? 71% of users prefer personalization from the brands and businesses they choose.

LLM search engines offer advanced capabilities that redefine how internet users access and interact with information. Let’s examine their key features:

👀 Did You Know? With the increasing use of smart speakers and voice assistants, 50% of all searches are now voice-based. As large language models are integrated into these systems, voice searches will become even more accurate, providing faster access to information across multiple platforms—whether it’s files, tasks, or meeting notes.

As search technology advances, LLMs like GPT-4, BERT, and T5 are transforming how search engines process queries, personalize results, and refine rankings. Let’s explore how these models are redefining the future of search.

Search has evolved from simple keyword queries to semantic vector searches. Instead of searching for a specific term like ‘Mount Fuji,’ users can search for ‘mountains in Japan,’ and the system retrieves meaning-based results.

Rephrasing queries into questions—like “What are the famous mountains in Japan?”—can refine search accuracy. Large language models (LLMs) also enhance searches by triggering additional queries if confidence is low, using techniques like FLARE.

Chain-of-thought reasoning further improves searches by breaking tasks into logical steps, as seen in AutoGPT. Additionally, conversational search lets LLM-powered assistants refine queries in real time, ensuring more precise results throughout an interaction.

Contextual awareness is one of LLMs’ most powerful features. Unlike traditional search engines, which rank results by keyword matches, LLMs consider user intent, location, search history, and past interactions.

By fine-tuning with domain-specific data, LLMs personalize search results to recognize patterns and prioritize relevant content. For instance, a user frequently searching for vegan recipes will see plant-based options when looking for ‘best dinner recipes.’

LLMs also interpret multimodal queries, understanding both text and images for more accurate results. Additionally, they build longitudinal context, learning from ongoing interactions to suggest relevant queries proactively.

LLMs enhance search engines by dynamically re-ranking results to reflect user intent better. Unlike traditional keyword-based ranking, LLMs use attention mechanisms to analyze the full context of a query and prioritize relevant content.

For example, Google’s BERT (Bidirectional Encoder Representations from Transformers) update revolutionized search by understanding the context behind words like ‘apple’ (fruit) vs. ‘Apple’ (tech company).

LLMs also contribute to improved SERP (Search Engine Results Page) efficiency. By analyzing factors like click-through rate (CTR), bounce rate, and dwell time, LLMs can adjust rankings in real time, boosting results that provide high user engagement.

📖 Also Read: How to Search PDF Files Quickly

As AI-driven search evolves, several large language model search engines are gaining traction for their advanced capabilities. Perplexity AI provides direct answers with cited sources, making searches more interactive and informative.

You.com offers a customizable experience, allowing users to prioritize sources, integrate AI-generated summaries, and interact with AI assistants.

We all know we can turn to these search engines to retrieve information and get quick answers. But what about locating that one crucial file at work? Or pulling up a conversation full of data points for your next big presentation?

This is where ClickUp, the everything app for work, comes into the picture!

📮 ClickUp Insight: 46% of knowledge workers rely on a mix of chat, notes, project management tools, and team documentation just to keep track of their work. For them, work is scattered across disconnected platforms, making it harder to stay organized. As the everything app for work, ClickUp unifies it all. With features like ClickUp Email Project Management, ClickUp Notes, ClickUp Chat, and ClickUp Brain, all your work is centralized in one place, searchable, and seamlessly connected. Say goodbye to tool overload—welcome effortless productivity.

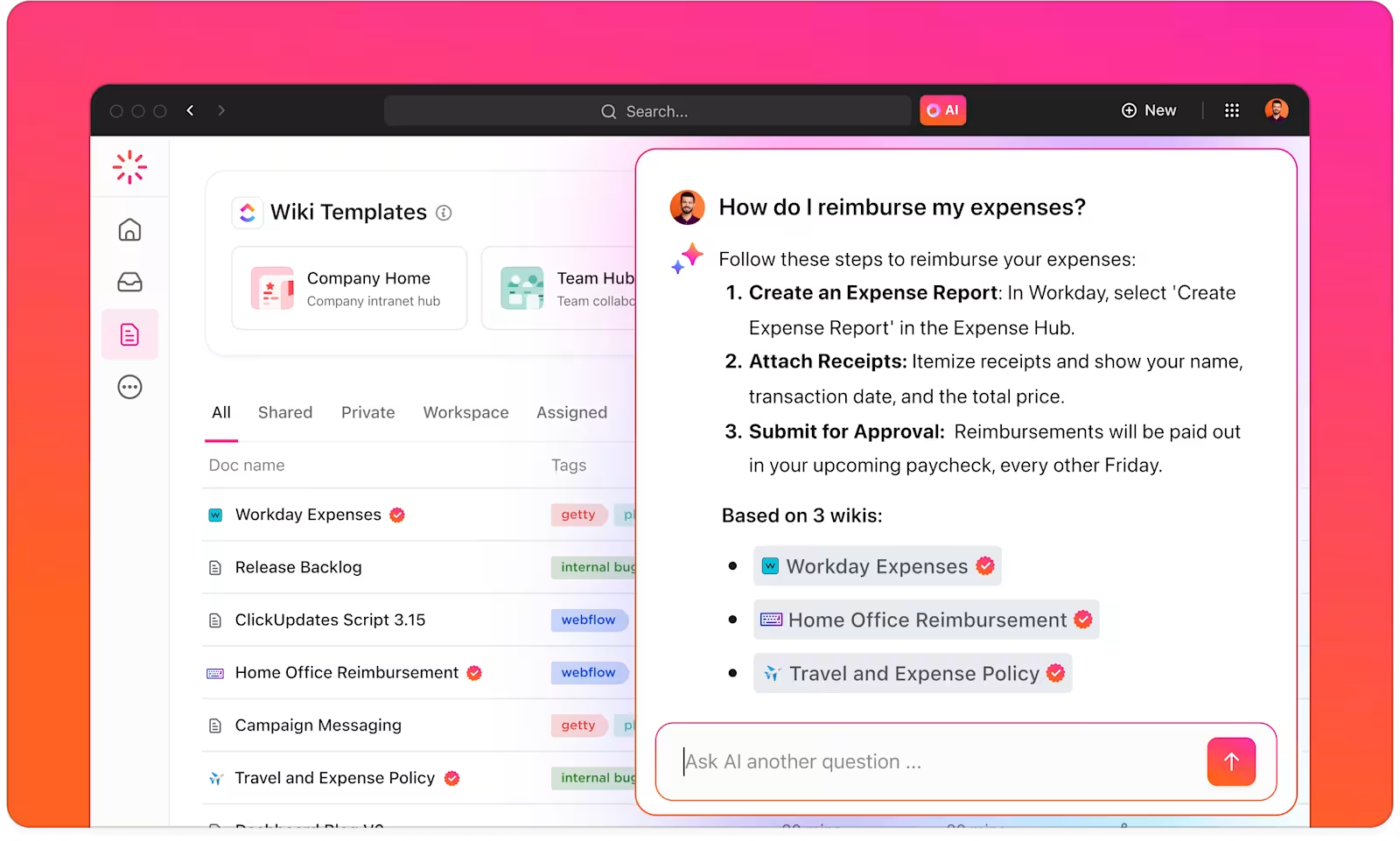

ClickUp Brain combines advanced search capabilities with comprehensive project management features, connecting tasks, files, team members, and projects all in one place. No more switching between apps or dealing with fragmented tools and information silos!

The costs of inefficiencies due to disconnected tools are staggering:

$420,000 annually: Organizations with 100 employees lose this amount each year due to miscommunication and disconnected tools

These inefficiencies lead to lost time, diminished morale, and increased operational costs. Fortunately, Connected AI turns these challenges into opportunities for smarter decision-making, faster information retrieval, and seamless execution.4

Here’s how ClickUp’s Connected Search transforms collaboration:

For example, when returning from time off, simply ask ClickUp Brain for updates on your projects. It will provide neatly organized bullet points with critical action items and discussions that occurred while you were away.

With ClickUp Brain, you have an intelligent knowledge manager that helps you find everything within your workspace and connected apps.

✨ Bonus: ClickUp Brain users can choose from multiple external AI models, including GPT-4o, o3-mini, o1, and Claude 3.7 Sonnet for various search, writing, reasoning, and coding tasks!

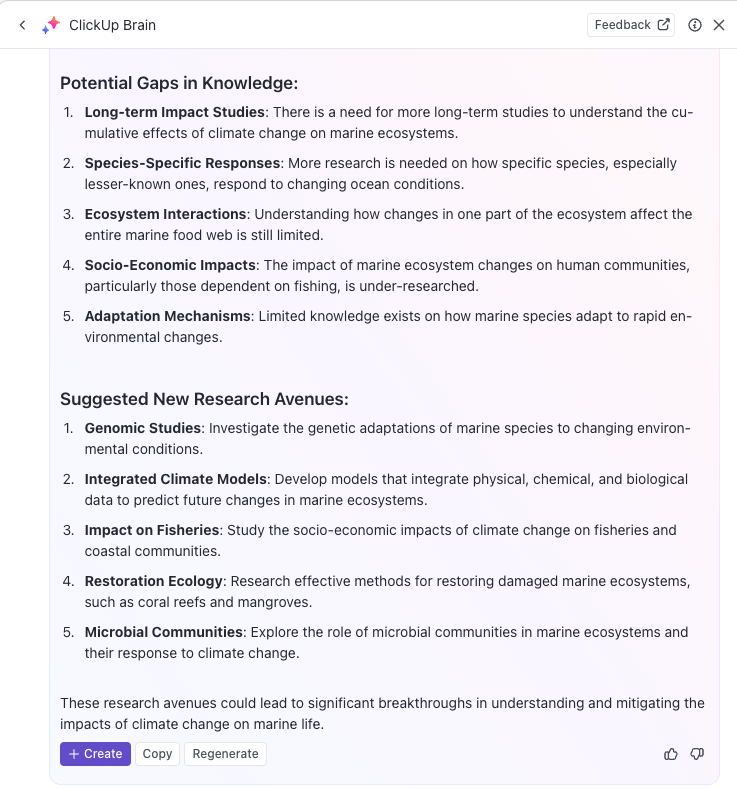

ClickUp Brain understands the context of your tasks and offers relevant suggestions based on your work preferences or primary activities. For content creators, for instance, it provides suggestions for content creation, brainstorming, and related tasks.

You can even use AI to automate repetitive tasks or update task statuses with simple prompts, allowing you to focus on deep work. If you’re looking for a powerful AI search engine to boost productivity, ClickUp Brain has you covered.

With ClickUp Brain, you can optimize your knowledge base by automatically categorizing, tagging, and organizing all relevant information.

For example, research teams can use ClickUp to create a centralized knowledge management system to store all insights, documents, and research findings in an easily accessible format.

Further, the versatility of ClickUp Docs supports the creation of wikis, document repositories, and knowledge-related task management.

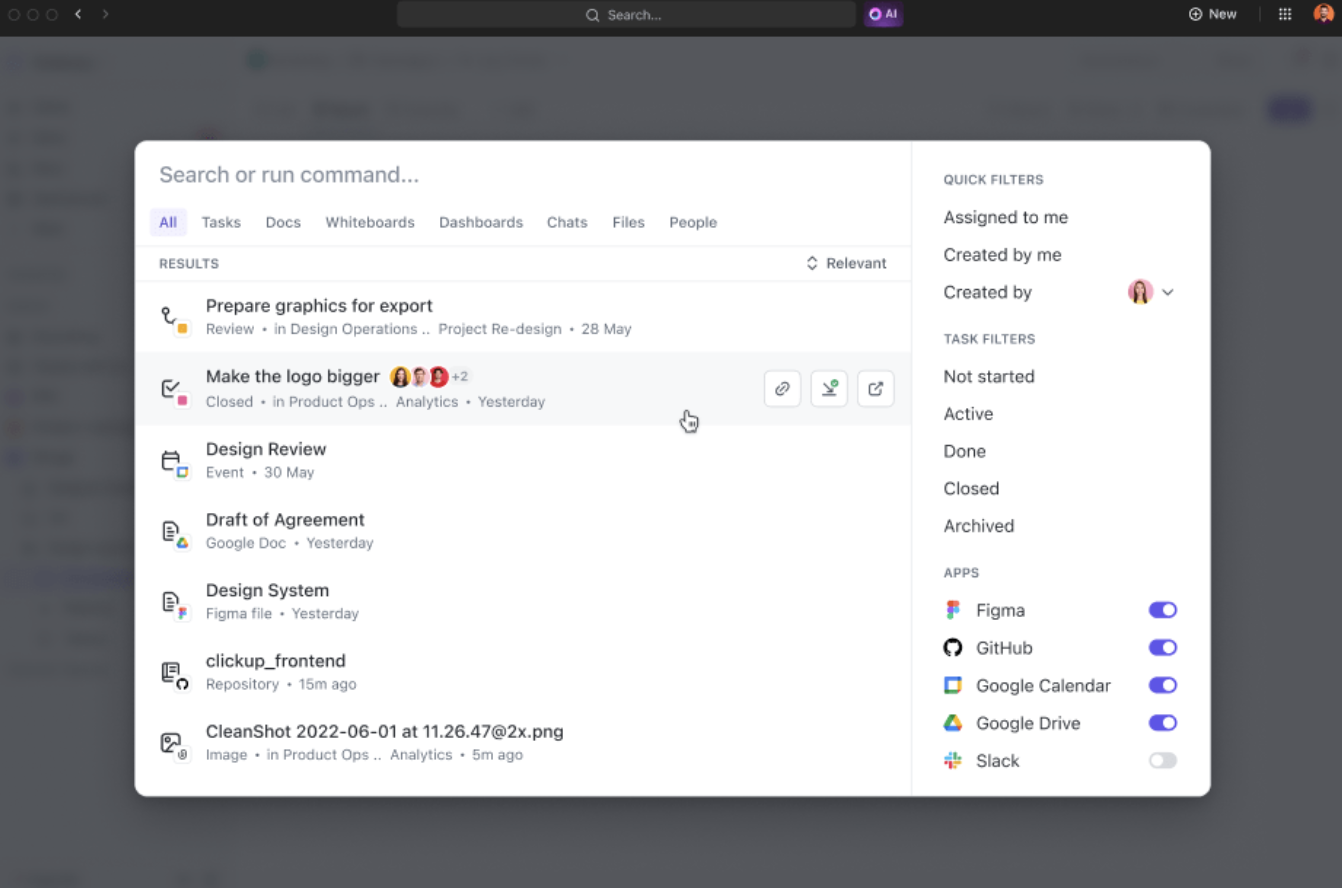

You can also organize information in various ways, using ClickUp Spaces, folders, and lists to structure content for quick retrieval. Your team can easily find and access the right data when needed without wasting time searching through multiple platforms.

In addition, the platform helps track tasks and projects via ClickUp Tasks based on insights gained from LLM search engines. You can integrate AI-powered search results directly into your task and project-tracking workflows, making it easier to implement the knowledge you’ve discovered.

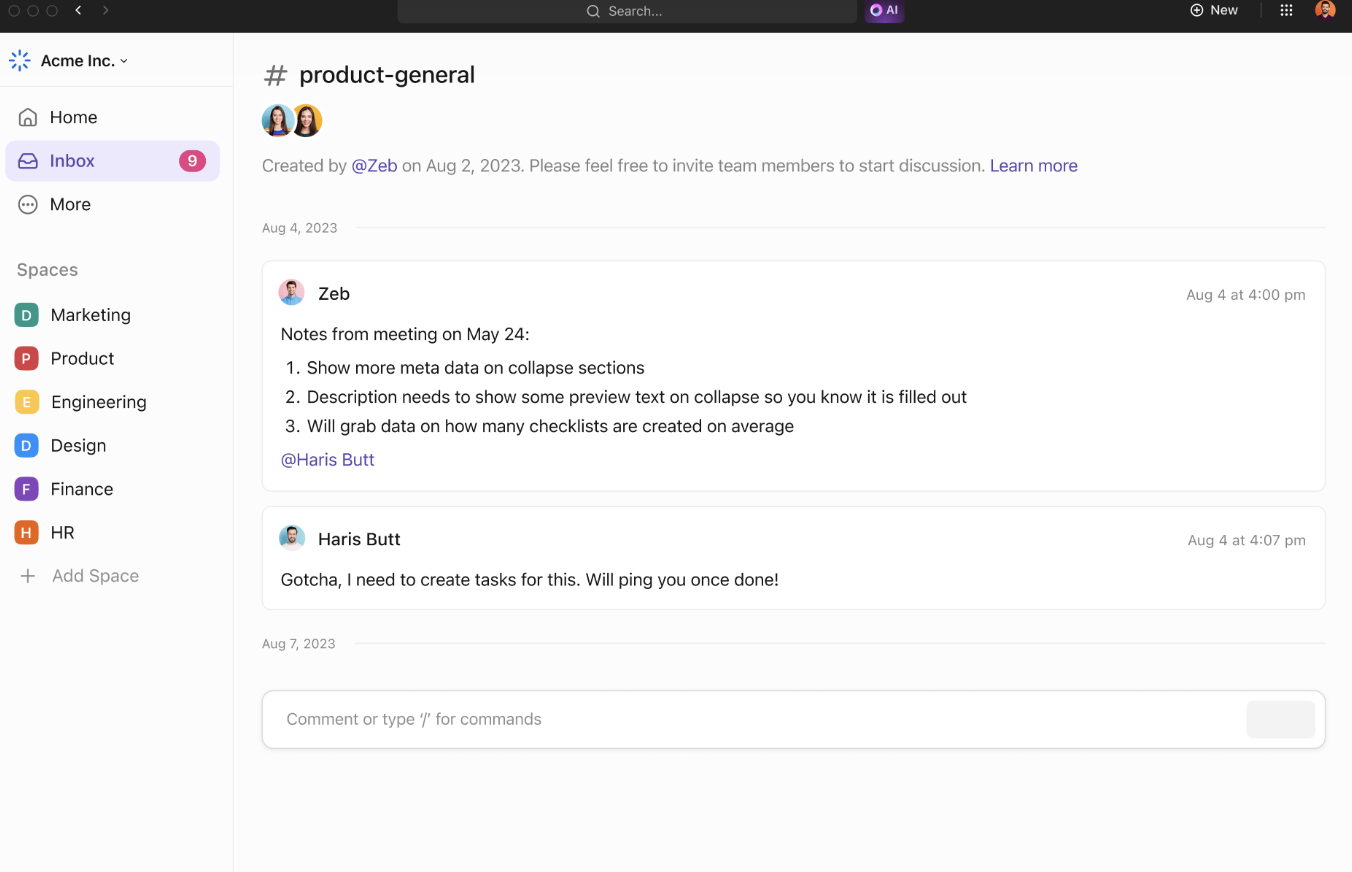

ClickUp’s collaboration tools further support team efficiency and information sharing. ClickUp Chat allows team members to discuss projects, share insights, and ask questions in real time.

Assigned Comments provide a clear way to communicate on specific documents or tasks, ensuring everyone stays informed on project updates.

Teams can collaborate by sharing ideas and visualizing concepts. Add notes, upload images, and embed links for better context. Use connectors to link ideas and highlight their relationships.

When your ideas are ready, convert them into trackable tasks from ClickUp Whiteboards to keep everything on schedule.

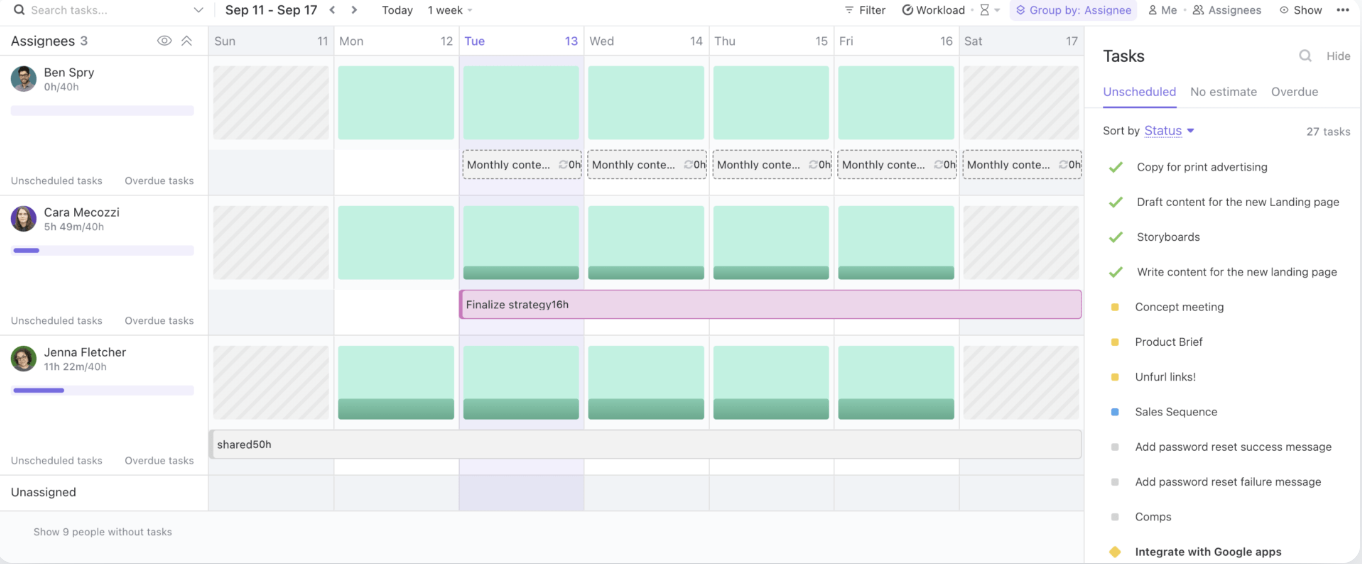

Finally, the ClickUp Workload View allows you to manage team capacity, track ongoing tasks, and allocate resources effectively.

By providing a centralized hub for knowledge sharing and collaboration, ClickUp streamlines workflows and improves team efficiency.

📖 Also Read: Best Document Management Software to Get Organized

When using LLM search engines, following best practices that maximize their potential while managing risks is essential.

These strategies will help you get the most out of your AI-powered tools, ensuring smooth implementation and long-term success:

LLM-driven search engines offer tremendous potential, but they also come with their own set of challenges. Below are some common AI challenges and practical solutions to overcome them:

LLM-driven search engines rely heavily on the quality and relevance of the data they process. Poor or outdated data can lead to inaccurate or irrelevant search results, impacting the user experience.

✨ By focusing on high-quality, up-to-date data, organizations can ensure that their LLM-powered search engine returns relevant and reliable results.

LLM models are often considered ‘black boxes,’ where the reasoning behind their output isn’t immediately apparent to users. This lack of transparency can make it difficult for users to trust the results they receive.

✨ By incorporating explainability features into the search engine, organizations can give users insights into why specific results were returned.

LLMs can inherit biases from the data they are trained on, which may lead to skewed or unethical results. If the training data is not diverse or representative, the search engine might reflect those biases, impacting decision-making and fairness.

✨ Auditing and updating the training data regularly is essential to identifying and mitigating these biases. Additionally, incorporating diverse datasets and monitoring outputs ensures the search engine produces more balanced, fair, and ethical results.

Integrating LLM-driven search engines with existing workflows and applications can be daunting, mainly when dealing with legacy systems or multiple data sources. The complexity of connecting these new tools with established platforms can slow down implementation.

✨ With ClickUp’s Connected Search, you can quickly find any file, whether it’s stored in ClickUp, a connected app, or your local drive.

Hallucinations refer to instances where the model generates factually incorrect, fabricated, or irrelevant information. This happens because the model, rather than pulling directly from indexed sources, sometimes ‘hallucinates’ data based on patterns learned during training.

✨ Advanced techniques like prompt engineering and high-quality training data enhance LLM reliability. Fine-tuning with domain-specific data reduces hallucinations, while knowledge graph integration ensures fact-based, accurate search results.

The next generation of LLM-driven search promises even greater precision, adaptability, and responsiveness, particularly in handling complex and dynamic user queries. These systems will adapt dynamically to evolving user needs, learning from prior interactions and real-time data.

For example, in an enterprise setting, an LLM could interpret a request like, ‘Find last quarter’s customer churn analysis’ and return not just the raw file but synthesized insights, relevant trends, and actionable takeaways.

Industries dependent on managing vast and intricate data sets stand to gain the most:

Integrating multimodal search capabilities—combining text, voice, and image recognition—will further expand the utility of LLMs.

For example, a team collaborating on a product launch could instantly upload images, annotate with voice inputs, and retrieve related documents and reports. This level of adaptability makes LLMs crucial in ensuring seamless access to diverse data formats.

Platforms like ClickUp, combined with LLM-powered search, offer a robust solution for organizing and accessing files, optimizing workflows, and driving decision-making efficiency.

As AI-driven search engines powered by LLMs continue to evolve, they are revolutionizing how businesses retrieve information from web pages and manage data.

With their ability to understand context, deliver more accurate results, and integrate seamlessly with enterprise workflows, LLMs are paving the way for smarter, faster, and more efficient operations.

And when finding an AI search engine seamlessly integrated with your workflow, nothing beats ClickUp Brain. Whether you need to quickly locate a file or task, brainstorm ideas, or even draft an email, ClickUp Brain’s powerful AI capabilities can handle it all.

Integrating with ClickUp’s comprehensive project management tools ensures everything is within reach, helping you stay organized, save time, and make data-driven decisions faster.

So what are you waiting for? Sign up for ClickUp today and get more done with ClickUp Brain!

© 2026 ClickUp