How Developers Can Streamline Code Reviews Across Teams

Sorry, there were no results found for “”

Sorry, there were no results found for “”

Sorry, there were no results found for “”

Code reviews: the only place where ‘LGTM’ can mean ‘looks good to me’… or ‘please merge this before I rethink everything.’

When code reviews work, bugs get squashed before they frustrate your users, teams stay aligned, and knowledge spreads faster during a production outage.

And when they don’t work? Your pull request sits there for days. Reviewers sometimes vanish completely or leave cryptic comments like ‘hmm’ and disappear again. One team demands detailed explanations for every semicolon, another team approves anything that compiles, and nobody can agree on basic standards.

In this blog post, we’ll understand how developers can streamline code reviews across teams to escape this mess and ship things people can use.

We’ll also explore how ClickUp fits into this workflow. 📝

Standardized reviews catch issues consistently regardless of who’s doing the reviewing. The code review checklist catches security vulnerabilities, performance issues, and breaking changes systematically.

Here are some benefits that compound over time. 📈

🔍 Did You Know? The term ‘pull request’ only became popular after GitHub launched in 2008. They introduced the Pull Request Template (PRT) to help developers edit the pull request in a relevant, consistent way. Before that, developers used email threads or patch files to propose and discuss changes.

Cross-team code reviews fail when organizational boundaries create confusion about responsibility, interrupt focused work, or introduce conflicting expectations.

Here’s what typically breaks down:

🚀 ClickUp Advantage: ClickUp Brain gives the development team the missing context that slows most code reviews. Since the AI-powered assistant understands your workspace, it can explain why a certain function exists or what a piece of logic is meant to handle.

Suppose someone flags a line in your checkout flow. The AI for software teams can tell them it’s part of an edge-case fix from a previous sprint, pulling relevant documents and tasks from the workspace for added context. That way, reviewers spend less time guessing intent.

📌 Try this prompt: Explain the purpose of the retry logic in the checkout API and tell me if it’s linked to a past bug or feature update.

Code reviews often slow down developer productivity when multiple teams are involved. Here’s how developers can streamline reviews across teams and keep productivity on track. 👇

Stop writing ‘Fixed bug in payment flow’ and start explaining what was broken and why your fix works. You also want to:

When a reviewer can understand your change in two minutes instead of 20, you get faster, better feedback.

💡 Pro Tip: When suggesting changes, explain why a change matters. This creates a knowledge trail that reduces repeated questions and helps future reviewers.

Add a CODEOWNERS file that auto-tags the right people.

Put a table in your README: ‘Auth code changes → @security-team required, @backend-team optional.’ When someone opens a PR touching five teams’ code, they know exactly who to wait for and who’s just there for knowledge sharing.

Deadlines don’t pause just because someone’s busy, so it helps when the whole team treats review responsiveness as part of the normal workflow.

Haven’t heard back in 24 hours? Ping them. If it’s been over 48 hours, escalate it to their lead or find another reviewer. And if a reviewer leaves ten philosophical comments, you can ask them to ‘jump on a quick call in 10 minutes’ and hash it out live.

💡 Pro Tip: Pre-label PRs by risk and scope. Tag each PR as low-risk, medium-risk, or high-risk, and note whether it affects multiple teams. This way, peer reviews occur more quickly, ensuring reviewers immediately know where to focus their attention, and high-risk changes receive extra scrutiny.

When you make a non-obvious choice, like using Redis over Postgres for caching, write it down in an Architecture Decision Record (ADR) or team wiki. And ensure you link to it in your PR.

With this in place, external reviewers stop questioning decisions that were already debated and decided. Plus, new team members avoid making the same mistakes.

When someone nails a cross-team PR (great description, well-structured code, handled all the edge cases), bookmark it. Share it with new people and reference it when reviewing.

‘Here’s how we usually handle service-to-service auth’ with a link beats explaining it from scratch every time. Build a library of good examples your org can learn from.

These are the top tools for improving code reviews across teams. 🧑💻

ClickUp’s Software Project Management Solution is the everything app for work that combines project management, knowledge management, and chat—all powered by AI that helps you work faster and smarter.

For dev teams managing multiple pull requests, review cycles, and documentation updates, it brings structure and accountability to every stage of the code review process.

Here’s how it keeps reviews moving and communication clear across teams. 💻

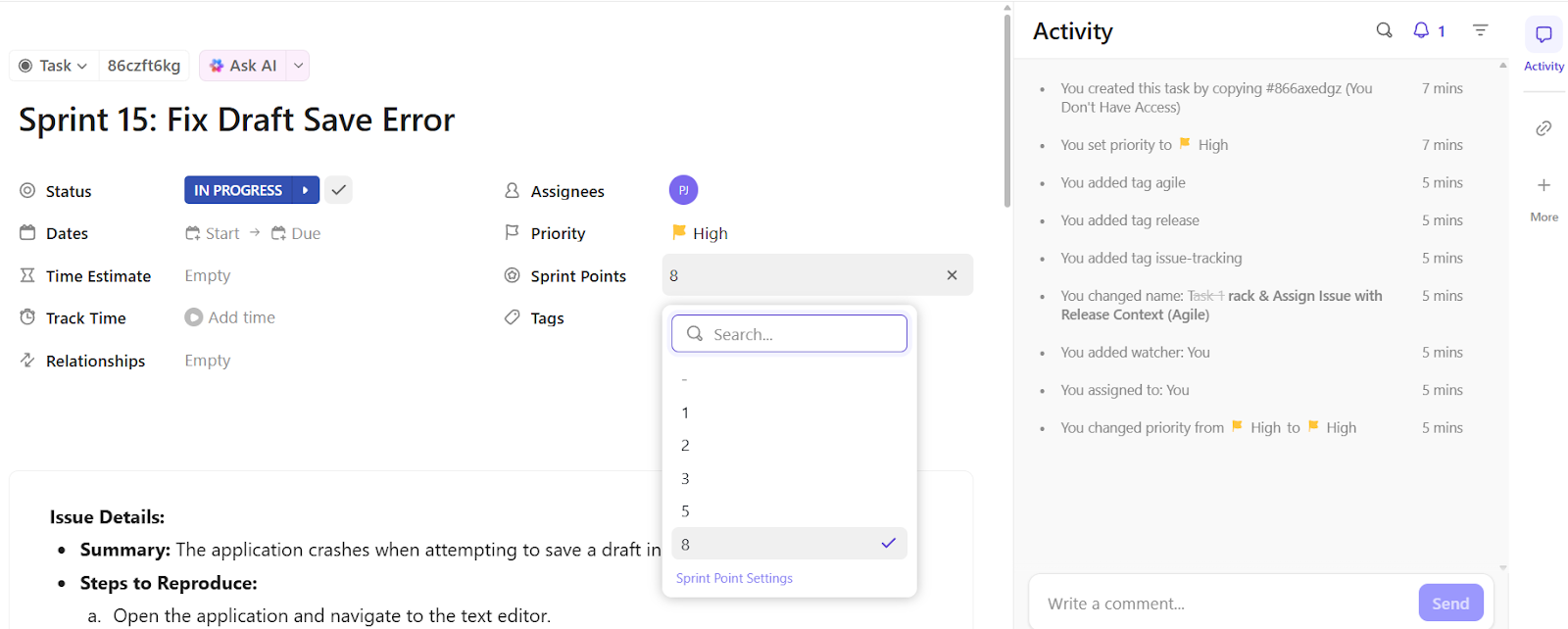

ClickUp Tasks give every pull request a proper home. Each task captures the review’s context, reviewer assignments, and progress in one place, so no PR gets lost or left waiting. Teams can then filter review tasks by sprint, repository, or status to quickly see what’s pending.

Suppose your backend developer pushes a PR for API response optimization. They create a task called ‘Optimize API Caching for Product Endpoints’ and link the PR. The task includes test results, reviewer tags, and a short checklist of areas to focus on. Reviewers drop their notes directly in the task, update the status to ‘Changes Requested’, and reassign it to the DevOps team.

ClickUp Automations eliminate tedious manual steps that often delay reviews. They handle recurring actions, such as reviewer assignment, task progression, and team notifications, so engineers can focus on providing actual feedback.

You can build automation rules such as:

ClickUp Brain, an AI tool for developers, makes review follow-ups effortless. It instantly summarizes reviewer feedback, identifies blockers, and turns it all into actionable tasks with owners and deadlines.

Say a 300-comment PR thread full of ‘nit,’ ‘fix later,’ and ‘needs testing’ remarks. With one prompt, ClickUp Brain pulls out the key issues, creates subtasks like ‘Update API error handling’ or ‘Add unit tests for pagination,’ and assigns them to the right devs.

✅ Try these prompts:

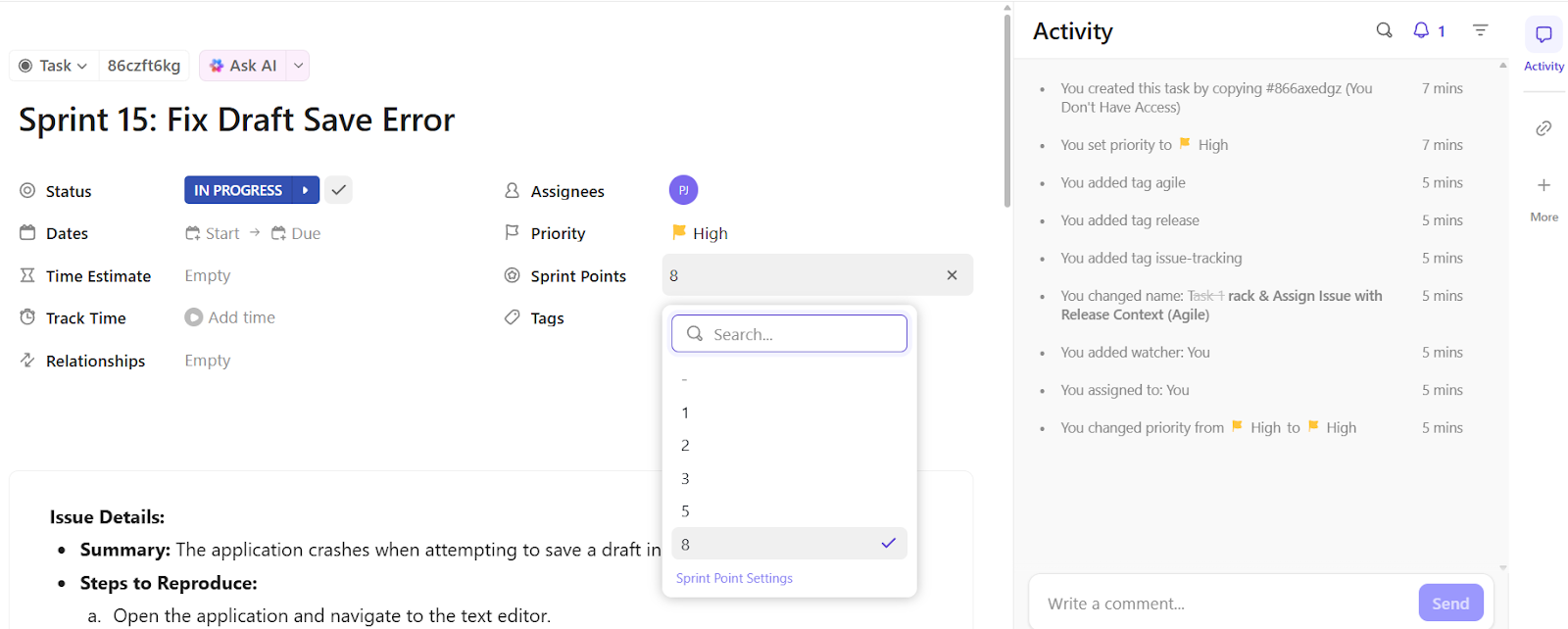

Review discussions often uncover future improvements, such as small refactors, performance tweaks, or testing needs. ClickUp AI Agents handle those automatically, turning review insights into trackable tasks without manual input.

You can use AI Agents to:

For example, multiple PRs highlight missing unit tests in the same module. An AI agent can create a new task called ‘Add Unit Tests for UserService.js’, assign it to QA, and link all related PRs.

📮 ClickUp Insight: When systems fail, employees get creative—but that’s not always a good thing. 17% of employees rely on personal workarounds like saving emails, taking screenshots, or painstakingly taking their own notes to track work. Meanwhile, 20% of employees can’t find what they need and resort to asking colleagues—interrupting focus time for both parties. With ClickUp, you can turn emails into trackable tasks, link chats to tasks, get answers from AI, and more within a single workspace!

💫 Real Results: Teams like QubicaAMF reclaimed 5+ hours weekly using ClickUp—that’s over 250 hours annually per person—by eliminating outdated knowledge management processes. Imagine what your team could create with an extra week of productivity every quarter!

Gerrit operates on a patch-based review system that treats each commit as a distinct change requiring approval before merging. Reviewers assign labels like Code-Review +2 or Verified +1, creating a voting system that determines merge readiness. This approach prevents the ‘approve and forget’ problem common in other tools.

🔍 Did You Know? Many open-source projects, like Linux, still rely heavily on patch-based review workflows reminiscent of the 1970s.

Pull requests form the backbone of GitHub’s review workflow, creating conversation threads around proposed changes. Developers can suggest specific line edits that authors apply directly from the interface, eliminating the back-and-forth of ‘try this instead’ comments. Plus, thread resolution tracking ensures no feedback gets lost in lengthy discussions.

🧠 Fun Fact: The concept of code review dates back to the 1950s, when programmers working on early mainframes like the IBM 704 would manually inspect each other’s punch cards for errors before running jobs.

SonarQube runs automated reviews through static analysis, applying over 6,500 rules across 35+ languages to catch bugs, vulnerabilities, and security holes. The AI agent for coding plugs into your CI/CD pipeline and acts as a gatekeeper before code reaches human reviewers.

🤝 Friendly Reminder: Encourage reviewers to spend 30-60 minutes per session. Longer sessions reduce focus and increase the likelihood of overlooking subtle bugs.

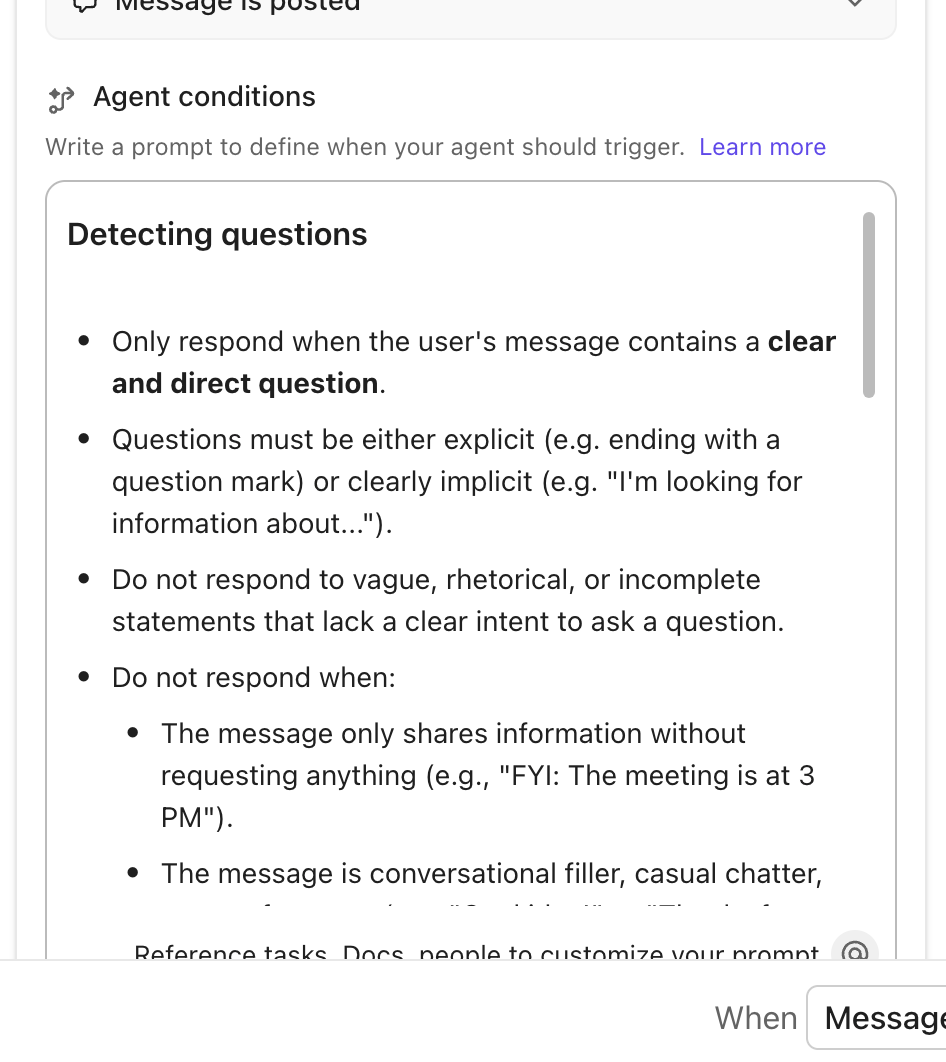

CodeTogether brings real-time collaborative code review straight into your code editor, flipping the usual asynchronous review process into live pair programming sessions. Developers can jump in from Eclipse, IntelliJ, or VS Code. In fact, guests don’t even need to match the host’s IDE and can even join through a browser.

Here’s how to build collaboration that thrives despite distance, differing schedules, and competing priorities. 🪄

Chances are, your cross-team reviewers won’t even be online at the same time as you. Rather than trying to squeeze in a “quick call,” here’s a better way:

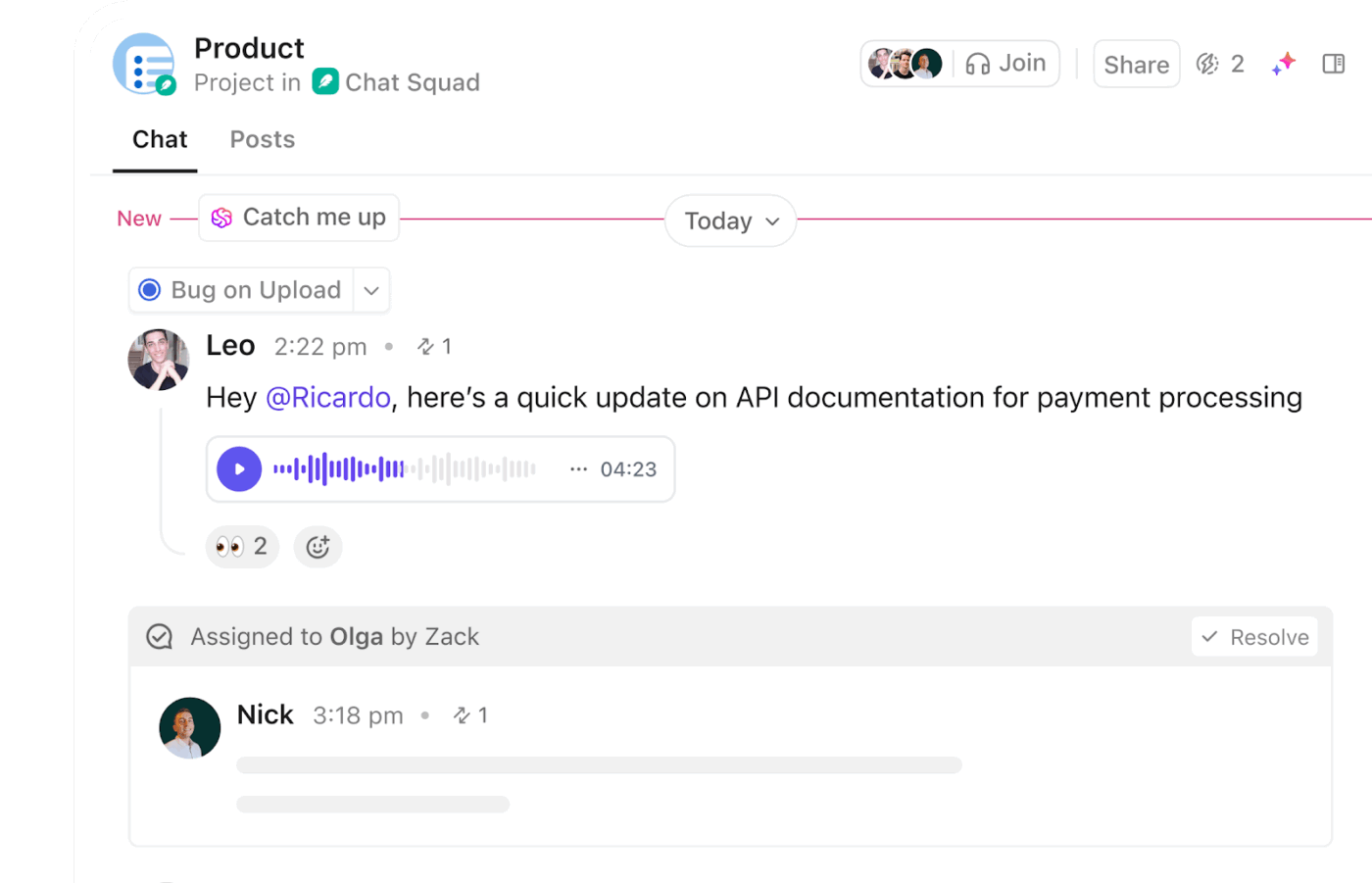

🚀 ClickUp Advantage: Async reviews only work when communication stays clear and easy to follow. ClickUp Chat keeps those conversations connected to the work itself, so updates don’t get lost overnight.

You can post your pull request link, share quick context, and tag teammates who need to review. These features are also compatible with mobile devices.

Writing code documentation is part of shipping the feature. Every cross-team PR should:

Now, here’s what usually happens: the first cross-team PR is painful because there’s no documentation, and you write it as part of that PR. The next five PRs are smooth because reviewers can self-serve. By the 10th PR, new team members are reviewing your code confidently because the knowledge is now outside your head.

Manual context-switching is where reviews get affected. Connect everything:

The goal is that clicking one link should give you the whole story.

🚀 ClickUp Advantage: With ClickUp Brain MAX, you can unify your tools, eliminating AI sprawl. Its contextual universal search lets you pull up related PRs, tickets, and docs from ClickUp, GitHub, and even Google Drive in seconds. Use voice commands to create or update tickets without switching tabs, while AI-powered automation ensures updates flow across your ecosystem.

Single reviewer for refactoring? Works. Single reviewer for authentication changes that touch every microservice? You’re asking for a 2 a.m. incident. For critical systems:

Yes, it’s slow, but production incidents are slower.

🔍 Did You Know? Michael Fagan, while at IBM in the 1970s, developed the first formal system for peer code review: the Fagan Inspection. This structured process defines roles and steps like planning, preparation, inspection meetings, rework, and follow-up to catch defects early in the development lifecycle.

The same person reviewing every external PR becomes a bottleneck, which can lead to burnout. This is what an ideal scenario looks like:

The person on rotation knows they’re the unblocker that week. While everyone else knows they can focus on their own work.

Here, we’re talking specifically about cross-team reviews:

This is where you turn ‘we should write better PRs’ into ‘here’s exactly what a good PR looks like for our team.’

Becoming a better programmer is difficult if you don’t measure. However, most teams track vanity metrics that don’t indicate whether reviews are effective.

Here’s what matters. 📊

If you only measure averages, you must remember that these hide the PRs that sit for a week while your feature is dying. This is what to look at:

The whole point of reviews is to catch bugs before customers do. Track:

🔍 Did You Know? Code review anxiety is not limited to junior developers. A study found that developers at all experience levels can experience anxiety related to code reviews. This challenges the common myth that only less experienced developers are affected.

Your team will tell you if reviews don’t work, but only if you ask every quarter:

If satisfaction is dropping, your metrics might look fine, but your development process is broken. Maybe reviewers are bikeshedding variable names while missing architectural problems. Maybe cross-team reviews feel hostile. The numbers don’t tell you this, your team does.

💡 Pro Tip: Create a quarterly feedback loop with a ClickUp Form to understand how your review process feels to the team. Using a software development template, you can spin up a quick survey that asks focused questions, like how useful reviews are, whether they cause blockers, or if feedback feels constructive.

Did streamlining reviews actually make you ship faster?

The goal here is to achieve sustainable speed while maintaining quality. Measure both, or you’re just measuring how fast you can ship bugs.

Create a ClickUp Dashboard to track every pull request, reviewer, and outcome in one place and see what’s slowing your release cycle.

Set up cards that highlight:

Stick it on a board in the office or share it in Monday’s standup. When metrics are visible, they matter.

Watch this video to learn how to create a dashboard for software project management:

You can also Schedule Reports in ClickUp to ensure the right people receive those insights on autopilot. Just add their email addresses, select a regular cadence (daily, weekly, monthly), and they’ll receive a PDF snapshot.

After which, you can review comment patterns easily:

Here’s what Teresa Sothcott, PMO at VMware, had to say about ClickUp:

ClickUp Dashboards are a real game changer for us because we now have a true real time view into what’s happening. We can easily see what work we’ve completed and we can easily see what work is in progress

Are some teams doing all the work while others ghost?

Use this data to rebalance or formalize expectations. ‘We review your stuff, you review ours’ should be explicit, not hoped for.

Strong code reviews move teams forward. They turn feedback into collaboration, prevent last-minute surprises, and help every developer feel confident before hitting merge. When the process flows, the entire release cycle feels lighter.

ClickUp gives that flow a serious upgrade. It ties together review tasks, team updates, and discussions in a connected space while ClickUp Brain keeps things moving. Review requests find the right people automatically, comments turn into actionable tasks, and every pull request stays visible without chasing updates.

Sign up for ClickUp for free today and see how fast code reviews can move when everything (and everyone) clicks. ✅

To streamline code reviews, focus on keeping pull requests manageable by limiting code changes to around 200-400 lines at a time. Set clear review checklists and provide timely feedback. Automation, such as linting, static analysis, and CI/CD integrations, can handle routine quality checks.

Assign reviewers based on expertise and use collaboration tools to centralize comments. ClickUp can help by linking PRs to tasks, so everyone knows who’s reviewing what and deadlines are visible across time zones.

Enforce coding standards, run automated tests, and use static analysis tools for improving code quality. Conduct frequent and structured peer reviews and prioritize clean, readable, and well-tested code. ClickUp Dashboards can track quality metrics, maintain checklists for reviewers, and create reports to monitor code health.

Platforms like GitHub, GitLab, and Bitbucket are great for cross-team reviews. Code review tools like Danger or SonarQube can automate checks. Additionally, integrating PR tracking into ClickUp keeps everyone aligned and reduces bottlenecks.

Typically, two to three reviewers work best. One should be familiar with the code area, while another can provide a fresh perspective. For routine changes or smaller teams, one reviewer may suffice, while critical or large changes require more than three.

Automation can run tests, check code style, flag vulnerabilities, and send reminders for pending reviews. ClickUp’s automation capabilities can assign PRs, update statuses, and notify reviewers, making the process faster and more consistent.

© 2026 ClickUp