Agentic Reasoning: Role in Decision-Making and Problem-Solving

Sorry, there were no results found for “”

Sorry, there were no results found for “”

Sorry, there were no results found for “”

Agentic reasoning is becoming a cornerstone in how AI systems are built, especially when they need to do more than follow instructions. You’re no longer looking for tools that wait for input. You need systems that can think, adapt, and make the next move.

Most AI today is still reactive. It answers questions, automates tasks, and runs on scripts. But as projects get more complex and data sources multiply, that’s no longer enough. You need reasoning, not just execution.

That’s where agentic AI comes in. It handles complex tasks, navigates ambiguity, and pulls from enterprise data to make smarter decisions. Instead of asking “What should I do next?” it already knows.

This is the kind of intelligence ClickUp Brain was built to support. Designed for teams running high-context, high-speed workflows, it helps you plan, prioritize, and automate. All of this with context awareness baked in.

Interesting right? But let’s explore further how agentic AI reasoning works, what makes it different from traditional systems, and how you can implement it into your workflows effectively.

Building AI that just follows instructions won’t cut it anymore. Here’s why agentic reasoning is redefining how intelligent systems work:

Agentic reasoning is when an AI system can set goals, make decisions, and take action. It does it all without needing constant direction. It’s a shift from reactive execution to intelligent autonomy.

You’ll see it in action when:

These aren’t hardcoded tasks. They’re goal-driven behaviors supported by reasoning models that interpret context and choose actions with purpose.

That’s what sets agentic AI reasoning apart and why it’s a foundation for modern intelligent systems.

📖 Read More: If you want to check out all amazing AI tools available for task optimization, here’s a list of Best AI Apps to Optimize Workflows

As you work with more advanced AI models, traditional logic trees and predefined scripts become limiting.

You need systems that:

That’s where agentic AI reasoning shows its strength. It allows AI agents to bridge gaps between intent and execution, especially in complex environments like enterprise search, product management, or large-scale software development.

It also opens the door to building AI systems that improve over time. With the right architecture, agentic models can continuously improve, adjust priorities, and refine outputs based on what works.

Here’s how the two approaches stack up when applied to real-world AI workflows:

| Feature | Agentic systems | Non-agentic systems |

| Decision-making | Autonomous, context-aware | Trigger-based, reactive |

| Goal-setting | Dynamic and internal | Predefined by external inputs |

| Adaptability | Learns from results and feedback | Requires manual intervention |

| Data handling | Synthesizes across multiple data sources | Limited to one task or dataset at a time |

| Output | Personalized, evolving responses | Static, templated outputs |

Non-agentic workflows have their place, primarily for repetitive automation or narrow-scope tools. But if you’re building for complex problem-solving, context switching, or strategic execution, agentic models offer a far broader range of capabilities.

Building agentic intelligence isn’t about adding more layers to existing automation. It’s about designing AI systems with a reasoning process that mirrors how real agents set objectives, evaluate progress, and adapt over time.

Here are the essential components that power an agentic workflow:

Every reasoning system starts with a clear objective. This goal can be user-defined or generated internally in agentic AI systems based on new inputs or emerging patterns.

The key is initiative, goals aren’t just followed, they’re generated, evaluated, and refined.

Once a goal is defined, the AI breaks it down into smaller tasks. This involves reasoning about dependencies, available resources, and timing.

For example, an agent asked to migrate a legacy database might:

These systems don’t just complete steps; they reason through the best order of operations.

Without memory, there’s no adaptation. Agentic AI needs a persistent understanding of past events, decisions, and external changes. This memory supports:

Unlike traditional logic trees, agentic models can evaluate what worked and what didn’t and continuously improve through iteration.

Execution isn’t the final step; it’s an ongoing, evolving process. The reasoning engine monitors the outcome of each task and makes adjustments as needed.

In a document summarization workflow, for instance, the agent might:

This flexibility separates non-agentic workflows from intelligent systems that can operate independently and still produce accurate, context-aware responses.

When these components work together, you get a smarter system that learns, adjusts, and scales with complexity. Whether you’re building AI applications for engineering, product, or knowledge management, agentic reasoning forms the foundation for consistent, intelligent outcomes.

📖 Also Read: How to Build and Optimize Your AI Knowledge Base

Designing an AI that does the work is easy. Designing one that decides what work matters and how to do it is where things get interesting. That’s where agentic reasoning becomes more than a feature. It becomes the architecture.

Here’s what it takes to implement it into your stack.

You don’t give agentic systems step-by-step instructions. You define boundaries like what the agent can touch, what goals it should pursue, and how far it’s allowed to explore.

That means:

This makes your system resilient. It can handle unexpected input, shifting project scopes, or incomplete data without breaking the flow.

At the heart of implementation is your reasoning engine. The logic layer is responsible for translating goals into tasks, adapting to feedback, and sequencing actions dynamically.

To design this, you’ll need:

Think of it like building a product manager inside your AI. One that constantly evaluates what matters now, not just what was asked originally.

Here’s where most implementations fail: People build intelligent agents that sit on top of non-agentic systems. You can’t plug agentic behavior into a rigid, linear workflow and expect it to thrive.

Your environment must support:

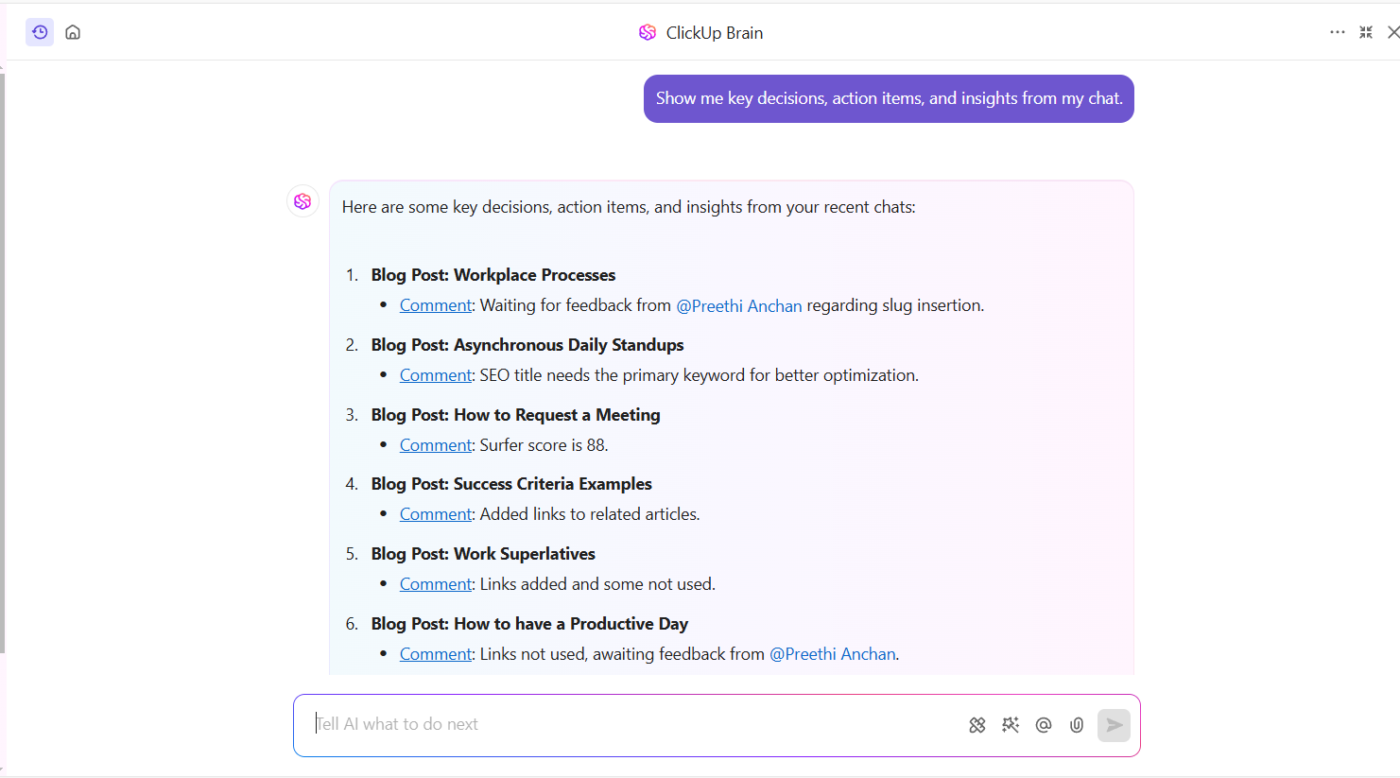

This is where ClickUp Brain comes in. It doesn’t just automate; it enables the agent to reason across tasks, documents, data, and dependencies. When your agent decides a spec document is outdated, it can flag the document, reassign the task, and adjust the sprint goal without waiting for you to notice.

ClickUp Brain plays a significant role in decision-making and problem-solving with its capabilities to analyze, organize, and provide actionable insights. Here’s how it helps:

By leveraging its AI-powered task recommendations and workflow automation features, you can set and track goals, automate tasks, and make informed decisions with ease. Here’s how ClickUp Brain can simplify goal setting and tracking, ensuring alignment with your strategic objectives.

Automation is at the heart of ClickUp Brain, enabling you to focus on high-value tasks while repetitive processes are handled seamlessly:

ClickUp Brain empowers you to make data-driven decisions by providing real-time insights and recommendations:

By integrating ClickUp Brain into your workflow, you can achieve greater efficiency, clarity, and focus. Whether you’re setting ambitious goals, automating repetitive tasks, or making strategic decisions, ClickUp Brain is your ultimate reasoning partner.

With built-in features like AI-powered task recommendations and workflow automation, ClickUp Brain helps your agents focus on impact and not just execution.

With talk to text in ClickUp Brain Max, you can capture goals, risks, or next steps instantly—without pausing to type. The input is immediately processed in context, allowing your workspace to reason across tasks, docs, and dependencies in real time. It’s a faster, more natural way to drive agentic workflows where adaptability and speed matter most.

No agent gets it right the first time. That’s fine if your system is built to learn. Feedback loops are where agentic AI sharpens its edge.

Your job is to:

If you want a system that scales across teams and projects, you have to trade rigidity for relevance.

Agentic reasoning isn’t just about intelligence. It’s about infrastructure. The choices you make around goals, planning, feedback, and environment will decide whether your agent can do more than act as it has to think.

And with tools like ClickUp Brain, you’re not duct-taping reasoning onto old workflows. You’re building a system that can make decisions as fast as your teams move.

📖 Read More: How to Build an AI Agent for Better Automation

Agentic reasoning is being implemented in production environments where logic trees and static automations fail. These are live systems solving for complexity, ambiguity, and strategic decision-making.

Here’s what it looks like in action:

At a fintech company running weekly sprints across five product squads, an agentic system was deployed to monitor scope creep and sprint velocity.

The agent:

It reasons across time, dependencies, and progress data and not just project metadata.

At a B2B SaaS company, L2 support agents were drowning in repeat escalations. An agent was trained on internal ticket threads, documentation updates, and product logs.

It now:

Over time, it started surfacing product bugs from recurring patterns. Something no human caught due to channel fragmentation.

An AI infra team managing model deployment (MLFlow, Airflow, Jenkins) implemented a DevOps agent trained on historical failures.

It autonomously:

This moved incident response from manual alerting to automated reasoning and action with reduced build downtime.

👀 Did You Know? The earliest concept of an AI agent dates back to the 1950s, when researchers built programs that could play chess and reason through moves.

This makes game strategy one of the first real-world tests for autonomous decision-making.

In a law firm managing thousands of internal memos, contracts, and regulatory updates, search was failing under volume.

A retrieval agent now:

The difference? It doesn’t keyword match. It reasons across structured and unstructured data, tuned to user role and case context.

In a health-tech org scaling rapidly across markets, leadership needed a way to adapt quarterly OKRs in flight.

A planning agent was trained to:

It allowed leadership to adapt objectives within the quarter, something previously limited to retro planning.

All these application examples makes it clear that these Agentic reasoning systems enable AI to operate inside your real business logic. Where static rules and workflows can’t keep up.

Building agentic AI is an architectural shift. And with that comes real friction. While the potential is massive, the path to operationalizing agentic reasoning comes with its own set of challenges.

If you’re serious about adoption, these are the constraints you’ll need to design around.

Agentic systems promise that they act independently but that’s also the risk. Without clear boundaries, agents may optimize for the wrong objective or act without enough context.

You’ll need to:

Total freedom isn’t the goal. Safe, goal-aligned autonomy is.

Agents are only as good as the training data they’re built on and most organizations still have fragmented, outdated, or contradictory datasets.

Without reliable signals, reasoning engines will:

Fixing this means consolidating data sources, enforcing standards, and continuously improving your labeled datasets.

Many companies attempt to bolt agentic capabilities onto rigid, non-adaptive systems and it breaks fast.

Agentic systems need:

If your current stack can’t adapt, the agent will hit a ceiling, no matter how intelligent it is.

👀 Did You Know? NASA’s Curiosity rover uses an AI system called AEGIS to autonomously select which rocks to analyze on Mars.

It made real-time scientific decisions without waiting for instructions from Earth.

Retrieval-Augmented Generation (RAG) is powerful but without agentic logic, most RAG systems remain passive.

Problems arise when:

To close this gap, RAG systems need to reason through what to retrieve, why it matters, and how it fits the task. And not just generate text from whatever they find. That means upgrading your rag system to operate like a strategist, not a search engine.

Even if the tech works, people resist giving AI control over prioritization, planning, or cross-functional coordination.

You’ll need to:

Adoption is less about the model and more about clarity, control, and transparency.

Your agent’s ability to adapt depends on what it learns from. If feedback loops aren’t in place, it stagnates.

That means:

Agentic AI systems are meant to continuously improve. Without feedback architecture, they plateau.

Agentic reasoning is a system of models, logic, constraints, and workflows built to reason under pressure. If you treat it like just another automation layer, it will fail.

But if you design for relevance, feedback, and control, your system won’t just act. It’ll think and keep getting better.

⚡ Template Archive: Top AI Templates to Save Time and Improve Productivity

Agentic reasoning is becoming the new standard for how intelligent systems operate in real-world environments. Whether you’re using large language models to handle complex queries, deploying AI solutions to automate decisions, or designing agents that can perform tasks across tools, data, and teams, these systems now face a new bar. They need to reason, adapt, and act with context and intent.

From surfacing the most relevant documents to making sense of fragmented company knowledge and executing on complex tasks with the right context, the ability to deliver relevant information at the right moment is no longer optional.

With ClickUp Brain, you can start building agentic workflows that align work to goals, not just check off tasks. Try ClickUp today.

© 2026 ClickUp