What Is MCP?

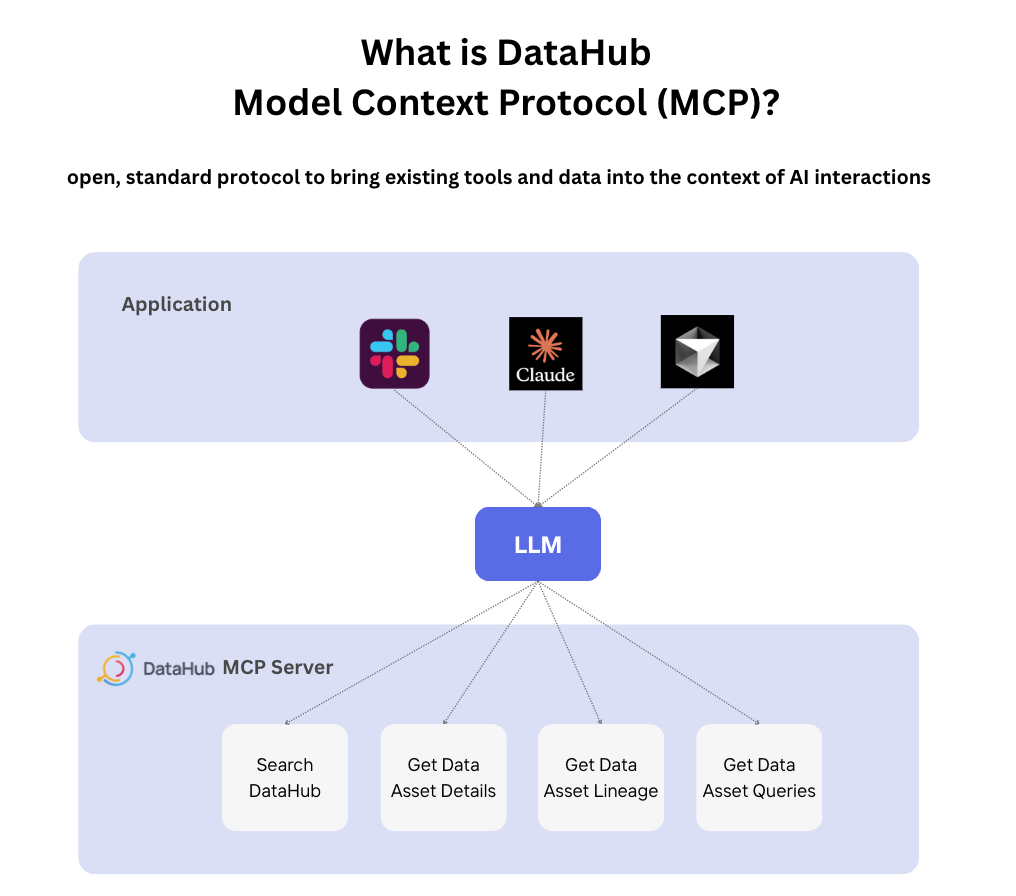

MCP, or Model Context Protocol, is an open-source standard that lets any compliant AI model request data, functions, or prompts from any compliant server through a shared JSON-RPC 2.0 interface.

By standardizing how tools describe their capabilities, MCP replaces bespoke one-off connectors, reducing integrations from exponential complexity (N×M) to linear effort (N+M).

Anthropic announced MCP in November 2024 as their solution to break down information silos that keep AI models isolated from real-world data.

Instead of building separate connectors for every model-to-tool combination, developers now create one MCP server that works with Claude, GPT, or any other compliant AI system.

VentureBeat compared it to a “USB-C port for AI,” enabling models to query databases and interact with CRMs without custom connectors.

Key Takeaways

- MCP simplifies AI integrations by replacing custom connectors with one shared standard.

- It enables AI agents to access real-time data, reducing hallucinations and guesswork.

- Organizations report major efficiency gains from faster development and accurate results.

- MCP’s universal protocol supports tools, data, and prompts across any AI model.

The General Architecture of MCP

MCP operates on a straightforward host-client-server model where AI applications (hosts) connect to MCP servers through a standardized client interface. This architecture enables plug-and-play functionality that eliminates vendor lock-in.

The protocol defines three core capabilities:

- Tools: Executable functions like sending emails, writing files, or triggering API calls

- Resources: Data sources including files, databases, and live feeds

- Prompts: Pre-defined instructions that guide model behavior for specific tasks

- Transports: Communication methods including STDIO for local servers and HTTP for remote access

DataHub’s MCP server illustrates this architecture in practice, unifying metadata across 50+ platforms and providing live context for AI agents.

The server exposes entity search, lineage traversal, and query association as standardized tools, allowing any compliant AI model to discover and interact with data governance workflows.

Why MCP Matters for Agentic Efficiency

MCP transforms AI from isolated language processors into context-aware agents that deliver accurate, real-time insights without hallucination.

The protocol addresses a fundamental limitation in current AI systems: models excel at reasoning but struggle with accessing live data.

Before MCP, connecting an AI assistant to your company’s Slack, GitHub, and customer database required three separate integrations, each with different authentication, error handling, and maintenance overhead.

Real organizations report dramatic efficiency gains. Block’s Goose agent shows thousands of employees saving 50-75% of their time on common tasks, with some processes dropping from days to hours.

The key difference is contextual accuracy. When AI agents access live data through standardized MCP servers, they provide specific answers rather than generic suggestions, reducing the back-and-forth that typically slows collaborative workflows.

Benefits & Performance Gains MCP Unlocks

MCP delivers measurable improvements across three critical areas that directly impact productivity and accuracy:

1. Accuracy Enhancement

By providing models with real-time context, MCP reduces hallucinations and eliminates the guesswork that leads to generic responses. When an AI agent can query your actual customer database rather than relying on training data, it delivers specific insights rather than broad recommendations.

2. Development Speed

Monte Carlo Data reports that implementing MCP reduces integration and maintenance work while accelerating deployment cycles. Instead of building custom connectors for each AI provider, teams create one MCP server that works universally.

3. Operational Efficiency

Block’s incident response demonstrates this impact. Engineers can now search for datasets, trace lineage, pull incident data, and contact service owners through natural language queries, reducing resolution time from hours to minutes.

The compound effect transforms both development velocity and end-user experience, creating a foundation for more sophisticated AI workflows.

Effective Use Cases of MCP & Their Impact

MCP’s versatility spans industries and technical stacks, proving its value beyond simple productivity integrations:

| Domain | Application | Impact Metric |

|---|---|---|

| Software Development | Cursor + GitHub integration | 40% reduction in PR review time |

| Data Governance | DataHub metadata access | Hours to minutes for lineage queries |

| Manufacturing | Tulip quality management | Automated defect trend analysis |

| API Management | Apollo GraphQL exposure | Unified AI access to microservices |

| Productivity | Google Drive, Slack connectors | Seamless cross-platform automation |

Manufacturing use cases particularly highlight MCP’s potential beyond software.

Tulip’s implementation connects AI agents to machine status, defect reports, and production schedules, enabling natural language queries like “summarize quality issues across all lines this week” that automatically aggregate data from multiple systems.

Future Outlook of MCPs

The next 2-5 years will see MCP evolve from a nascent standard to a foundational layer for enterprise AI:

| Current State | Future Direction |

|---|---|

| Local servers, read-only tools | Remote marketplaces, write capabilities |

| Manual server management | Dynamic allocation, containerization |

| Basic authentication | Fine-grained authorization, trust frameworks |

| Simple tool calling | Multi-agent orchestration, workflow automation |

OpenAI’s adoption in March 2025 signals broader industry momentum. Analysts expect major vendors to converge on MCP as the standard protocol for agentic platforms, with enhanced security tooling and regulatory frameworks emerging to address current vulnerabilities.

DataHub’s roadmap points toward AI-optimized SDKs with Pydantic-typed inputs and streaming transport, while research continues on dynamic context management to handle larger tool catalogs without model performance degradation.

Frequently Asked Questions

While MCP builds on function-calling concepts, it standardizes tool discovery, metadata exchange, and transport semantics across vendors. It’s more like the Language Server Protocol for AI agents than a single provider’s API.

Most developers can set up basic MCP servers using existing templates from Replit or DataHub within hours. The protocol uses familiar JSON-RPC patterns, and comprehensive SDKs exist for Python, TypeScript, Java, and Rust.

Start with OAuth 2.1 for authorization, implement user confirmation for destructive operations, and validate all tool descriptions for hidden instructions. Consider gateway solutions that centralize authentication and payload validation.

Anthropic’s Claude Desktop, OpenAI’s ChatGPT and API clients, and various open-source implementations support MCP. The standard is designed for universal compatibility across compliant providers.