How Microsoft Agentic AI Helps Teams Work Smarter, Not Harder

Sorry, there were no results found for “”

Sorry, there were no results found for “”

Sorry, there were no results found for “”

Oct 29, 2025

6min read

After wrestling with five disconnected project tools last quarter, I watched my team spend three hours per week just syncing data between platforms.

That friction vanished when we piloted an autonomous agent that pulled updates from Slack, Jira, and our CRM without human prompting.

Microsoft’s entry into agentic AI promises similar relief at enterprise scale, and this guide unpacks exactly what they offer, how it works, and whether it fits your operation.

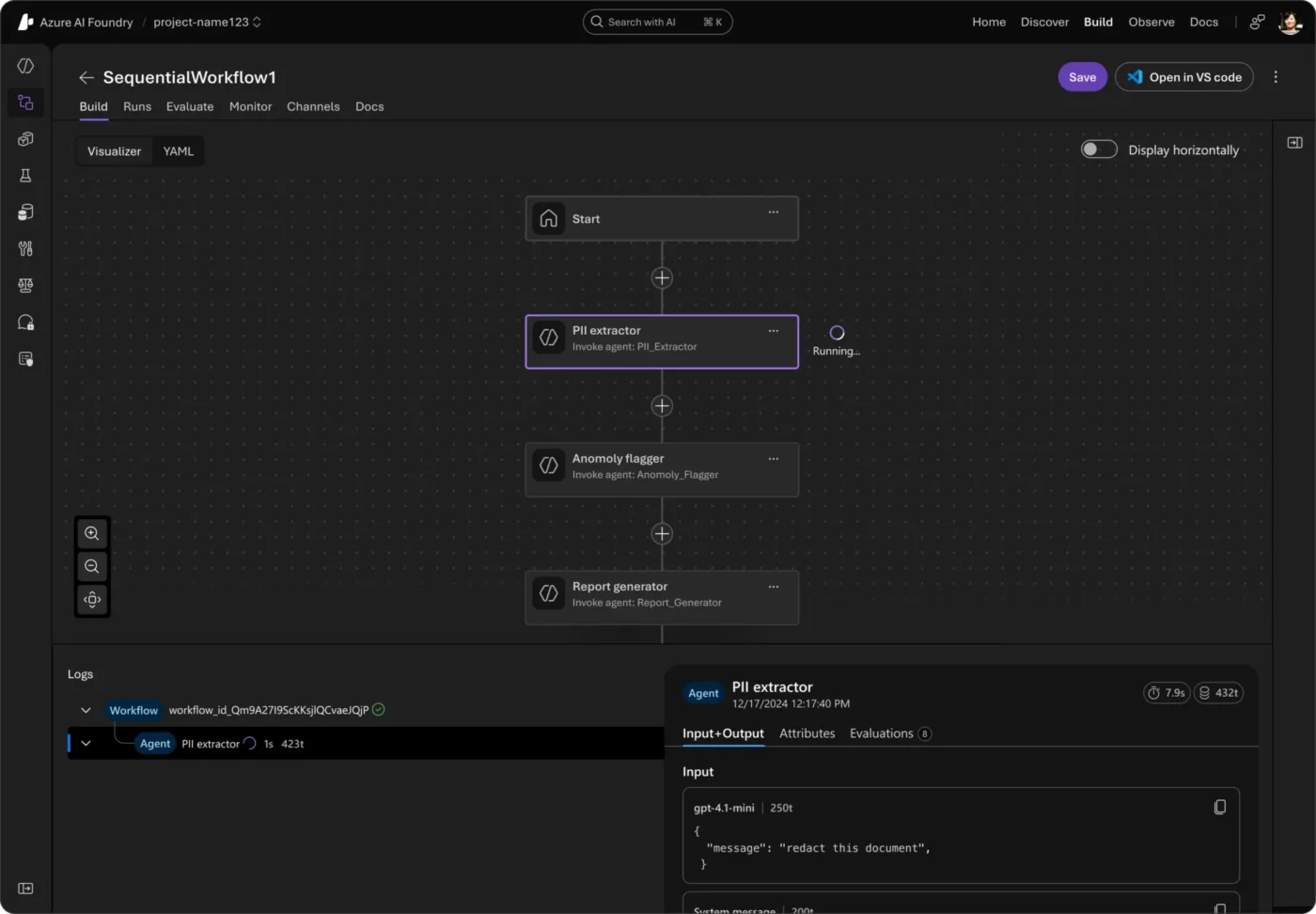

Yes, Microsoft offers agentic AI through multiple channels designed for enterprise adoption. The company unveiled its Azure AI Foundry Agent Service in preview at Ignite 2024, reaching general availability in mid-2025.

This multi-agent orchestration platform sits alongside the Microsoft Agent Framework, an open-source SDK that launched in public preview on October 1, 2025.

For Microsoft 365 users, the company added App Builder and Workflows agents to Copilot in October 2025, enabling teams to build apps and automate tasks via natural language.

These offerings position Microsoft as a cloud-first player emphasizing security, compliance, and integration with existing Azure and Office ecosystems rather than standalone AI novelty.

The architecture reflects lessons from research initiatives like AutoGen and production frameworks like Semantic Kernel, converging them into a unified runtime that handles identity management, telemetry, and responsible AI guardrails by default.

Microsoft’s agentic AI relies on a lead orchestrator agent that coordinates specialized sub-agents, a pattern the company calls “Magentic One.”

This design mirrors Salesforce’s Atlas reasoning engine, where a central controller delegates tasks to workers optimized for specific functions like web search, code execution, or document retrieval.

Each sub-agent can call tools and APIs, collaborate with peers, and maintain memory across multi-step workflows. The orchestrator monitors progress, handles failures, and adjusts the plan when a sub-agent returns unexpected results.

| Component | Business Function |

|---|---|

| Orchestrator Agent | Routes tasks, monitors workflow state, handles exceptions |

| Tool-Caller Agent | Invokes external APIs, databases, or Azure Logic Apps |

| Memory Manager | Persists conversation history and intermediate outputs |

| Compliance Shield | Enforces data-access policies, filters sensitive content |

This choreography runs inside Azure AI Foundry’s runtime, which tracks every decision through OpenTelemetry logs for audit and debugging.

IAgents can leverage over 1,400 Azure Logic Apps workflows as pre-built actions, connecting to platforms like SharePoint, Microsoft Fabric, Bing Search, and even third-party services such as SAP Joule or Google Vertex via open Agent2Agent (A2A) protocols.

That difference matters because it turns agentic AI from a research demo into a drop-in addition to existing enterprise stacks.

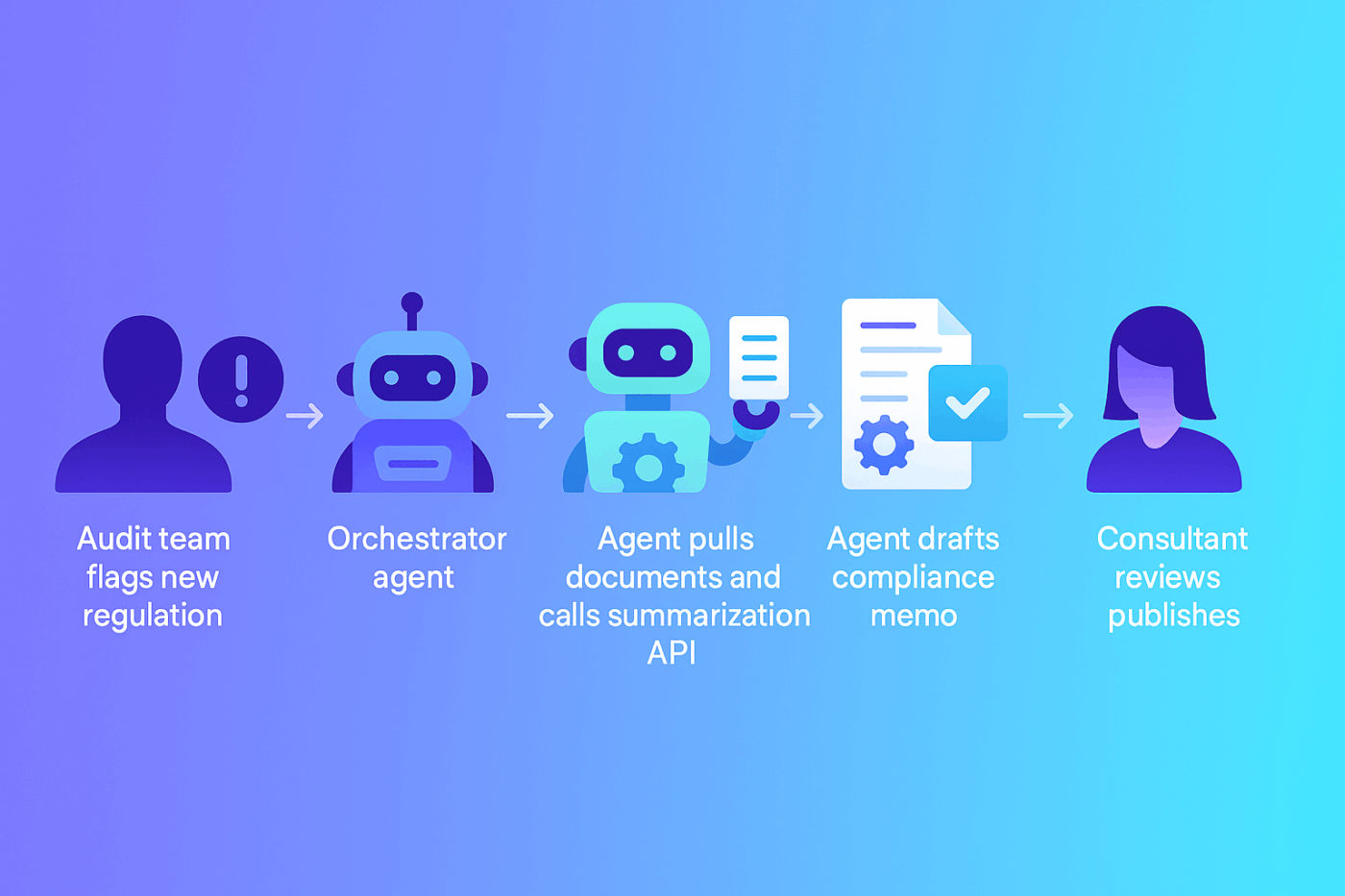

When KPMG’s risk consultants faced a backlog of regulatory updates, their manual compliance checks consumed weeks per audit cycle.

The firm built a Comply AI agent on Azure that reads policy documents, cross-references them against internal controls, and drafts compliance reports autonomously.

The result was a 50 percent reduction in ongoing compliance effort, freeing auditors to focus on interpretation and client advisory rather than document assembly.

Similar pilots at Wells Fargo cut policy-answer times from ten minutes to thirty seconds, a 20× speed improvement that shortened customer wait times at branch locations.

That speed advantage stems partly from Microsoft’s tight integration with its own ecosystem, but how does the platform stack up against alternatives in the agent-orchestration market?

Among the top companies building AI agents, Microsoft is near the top of the list.

Microsoft’s agentic AI stands out through deep embedding in Azure and Office 365, which means agents inherit existing user permissions, identity management via Entra ID, and compliance certifications without custom configuration.

This reduces deployment friction for enterprises already running Microsoft workloads and eliminates the need to build separate authentication or audit pipelines.

The platform also embraces open standards. Microsoft joined the steering committee for the Model Context Protocol (MCP) and designed a public Agent2Agent API so agents can interoperate with tools from AWS Bedrock, SAP, or independent vendors.

This openness contrasts with closed ecosystems that lock agent logic inside proprietary runtimes.

Strengths and trade-offs:

That native integration becomes clearer when you examine how Microsoft’s agents connect to the broader enterprise stack.

Early reactions to Microsoft’s agentic AI offerings reflect cautious optimism mixed with practical concerns. Reddit discussions on r/AI_Agents praise the platform’s ease of use and built-in connectors, while others flag cost surprises.

Which Agent system is best?

byu/Green_Ad6024 inAI_Agents

Representative user voices:

Industry observers note that standardizing protocols like MCP might enable these agents to interoperate across clouds in the future, though today’s implementations remain largely vendor-specific.

The excitement centers on tangible productivity wins, while the caution stems from pricing transparency and the learning curve for organizations new to multi-agent orchestration.

That tension between promise and pragmatism extends to Microsoft’s publicly stated plans for the platform’s evolution.

The Azure AI Foundry Agent Service uses consumption-based pricing, meaning there is no base platform fee. Organizations pay only for the underlying models, APIs, and tools their agents invoke, billed at standard Azure rates.

For example, GPT-4 usage through Azure OpenAI costs approximately $0.06 per 1,000 prompt tokens and $0.12 per 1,000 completion tokens, with no additional premium for running those models inside an agent workflow.

Microsoft 365 Copilot with agent capabilities is priced at $30 per user per month as an add-on for E3, E5, Business Standard, or Business Premium plans.

This license grants access to custom agents, app builder, and workflow automation within M365 apps like Teams, Outlook, and SharePoint.

However, hidden costs can emerge from compute-intensive operations.

Fine-tuning models incurs training charges, and high-throughput scenarios may require reserved capacity or premium SKUs.

Integration services, such as connecting agents to legacy systems via custom Logic App workflows, may demand additional developer time or consulting fees.

Azure Logic Apps connectors themselves bill under their respective service tiers, though the 1,400+ actions are included in the Agent Service with no separate agent fee.

One Reddit user complained about Azure AI Foundry charging a £95 per day standing fee for hosting a fine-tuned model, highlighting the importance of understanding standing charges versus pay-per-use costs when architecting agent solutions.

Why is Azure AI Foundry so expensive?

byu/Alundra828 inAZURE

Organizations should model expected token volumes, API call frequency, and compute requirements before committing to production deployments.

Microsoft’s agentic AI delivers tangible productivity wins through deep Azure and Office 365 integration, making deployment frictionless for enterprises already in the ecosystem.

Early adopters report dramatic efficiency gains, though pricing transparency and feature maturity remain considerations worth monitoring.

The commitment to open standards positions these agents for broader adoption as the technology matures.

For organizations ready to experiment, consumption-based pricing and proven enterprise security make this a compelling entry point into autonomous workflows.

© 2026 ClickUp