Has your AI model ever delivered a confident answer that your users called outdated? That’s the kind of experience that ends with your team questioning its every response.

It sounds like every developer’s and AI enthusiast’s nightmare, right?

Large language models (LLMs) run on training data, but as the data ages, inaccuracies sneak in. Since retraining costs millions, optimization is the smarter play.

Retrieval Augmented Generation (RAG) and fine-tuning are the top frameworks for boosting accuracy. However, given the differences between each approach, they’re ideal for different applications. The right framework is key to improving your LLM effectively.

But which one’s right for you?

This article covers the dilemma in this RAG vs. fine-tuning guide. Whether you’re working with domain-specific data or looking to build high-quality data retrieval solutions, you’ll find answers here!

⏰60-Second Summary

- Improving the performance of LLMs and AI models is a key part of every business and development function. While RAG and fine-tuning are popular approaches, it’s important to understand their nuances and impact

| Aspect | RAG (Retrieval-augmented generation) | Fine-tuning |

| Definition | Allows LLM to retrieve real-time, relevant data from external sources with its dedicated system | Trains a pre-trained model with specialized datasets for domain-specific tasks |

| Performance and accuracy | Great for real-time data retrieval, but accuracy depends on the quality of external data | Improves contextual accuracy and task-specific responses |

| Cost and resource requirements | More cost-effective upfront focuses on real-time data access | Requires more resources for initial training, but cost-effective in the long term |

| Maintenance and scalability | Highly scalable and flexible, but depends on the frequency of updates to external sources | Requires frequent updates and maintenance but offers stable long-term performance |

| Use cases | Chatbots, dynamic document retrieval, real-time analysis | Domain-specific QA systems, sentiment analysis, and brand voice customization |

| When to choose | Fast-changing data, real-time updates, and prioritizing resource costs | Niche customer segments, domain-specific logic, brand-specific customization |

| Ideal for | Industries need real-time, accurate information (finance, legal, customer support) | Industries requiring specific language, compliance, or context (healthcare, legal, HR) |

What Is Retrieval-Augmented Generation (RAG)?

Looking at fresh reports and surveys your LLM missed using? That’s where you need RAG. To understand this better, let’s get into the basics of this approach.

Definition of RAG

RAG is an AI framework that involves additional information retrieval for your LLM to improve response accuracy. Before LLM response generation, it pulls the most relevant data from external sources, such as knowledge sources or databases.

Think of it as the research assistant within the LLM or generative AI model.

👀 Did You Know? LLMs, especially text generators, can hallucinate by generating false yet plausible information. All because of gaps in training data.

Key advantages of RAG

RAG is the additional layer of connected AI your business process needs. To highlight its potential, here are its advantages:

- Reduced training costs: Cuts out the need for frequent model retraining with its dynamical information retrieval. This leads to more cost-effective AI deployment, especially for domains with rapidly changing data

- Scalability: Expands the LLM’s knowledge without increasing the primary system’s size. They help businesses scale, manage large datasets, and run more queries without high computing costs

- Real-time updates: Reflects the latest information in each response and keeps the model relevant. Prioritizing accuracy through real-time updates is vital in many operations, including financial analysis, healthcare, and compliance audits

📮 ClickUp Insight: Half of our respondents struggle with AI adoption; 23% just don’t know where to start, while 27% need more training to do anything advanced.

ClickUp solves this problem with a familiar chat interface that feels just like texting. Teams can jump right in with simple questions and requests, then naturally discover more powerful automation features and workflows as they go without the intimidating learning curve that holds so many people back.

RAG use cases

Wondering where RAG shines? Consider these key use cases:

Chatbots and customer support

Customer queries often require up-to-date and context-aware responses. RAG boosts chatbot capabilities by retrieving the latest support articles, policies, and troubleshooting steps.

This enables more accurate, real-time assistance without extensive pre-training.

Dynamic document retrieval

RAG optimizes document search by pulling the most relevant sections from vast repositories. Instead of generic summaries, LLMs can provide pinpointed answers from updated manuals, research papers, or legal documents.

Adopting RAG-powered LLMs makes information retrieval faster and more precise.

🧠 Fun Fact: Meta, which owns Facebook, Instagram, Threads, and WhatsApp, introduced RAG into LLM development in 2020.

What Is Fine-Tuning?

Let’s get into what fine-tuning does.

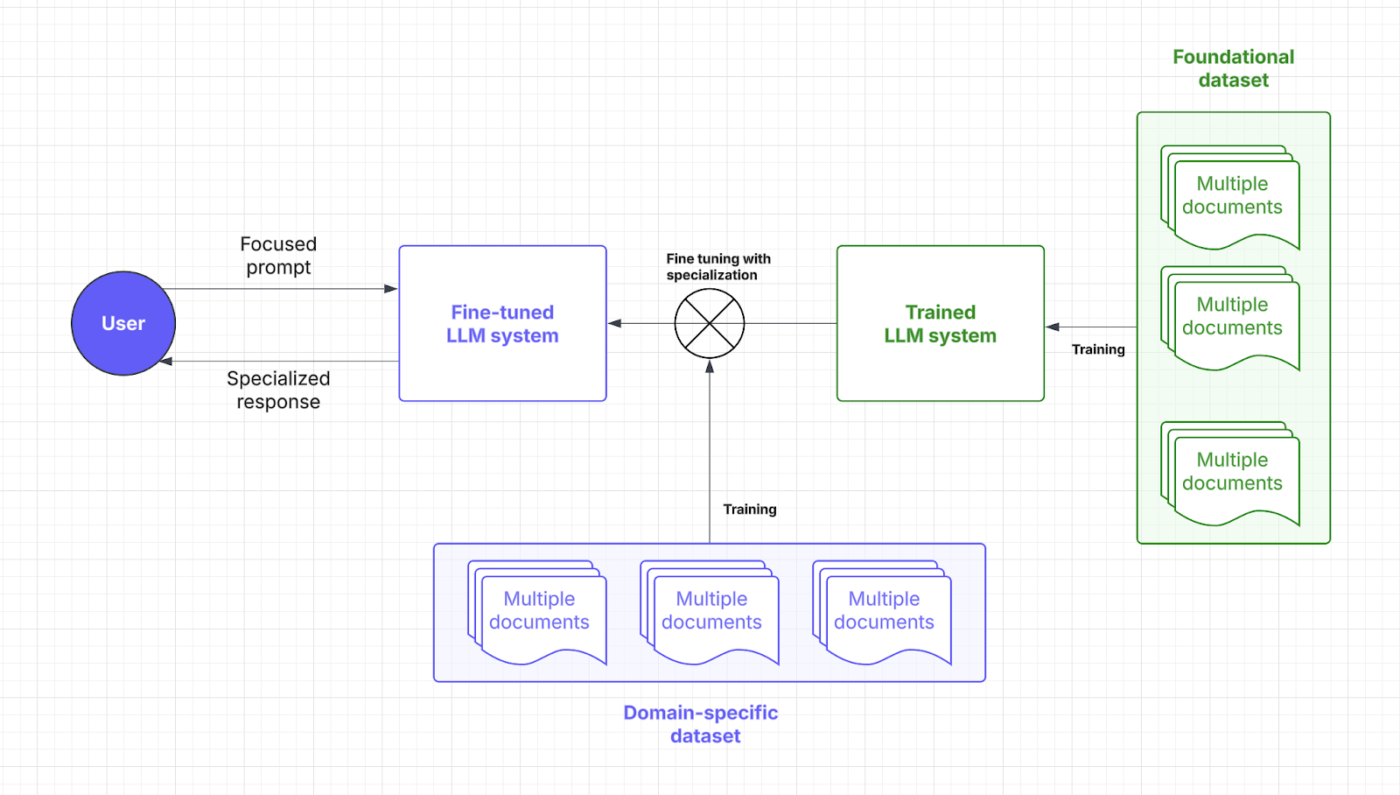

Definition of fine-tuning

Fine-tuning involves training a pre-trained language model. Yes, lots of training, which can be explained via point and focus.

🧠 Did You Know: In Large Language Model (LLM) training, “weights” are the adjustable parameters within the neural network that determine the strength of connections between neurons, essentially storing the learned information; the training process optimizes these weights to minimize prediction errors.

“Focus,” on the other hand, encompasses several aspects: it involves careful data curation to ensure quality and relevance, the utilization of attention mechanisms to prioritize relevant input segments, and the targeted fine-tuning to specialize the model for specific tasks.

Through specialized datasets, fine-tuning allows AI models to narrow down on executing domain-specific tasks. By adjusting model weights and focus, your LLM gains more contextual understanding and accuracy.

Think of fine-tuning that master’s degree your LLM needs to speak your industry’s language. Let’s review where this AI strategy enters the prompt response process:

Benefits of fine-tuning

Fine-tuning techniques are just AI tweaking. It’s more like being able to zoom in on predefined details. Here are its perks:

- Task-specific optimization: Specialized datasets sharpen LLM responses for specific tasks. Want to help users skip the headache of complex prompts? Fine-tuning helps developers get tailored AI solutions

- Improved accuracy for niche applications: Domain knowledge reduces errors and enhances each response’s precision. Fine-tuning also increases an LLM’s reliability, letting businesses relax on micromanagement and manual oversight

- Customization for brand voice and compliance: Fine-tuning teaches LLMs company terms, style, and regulations. This maintains a consistent brand voice and industry-specific compliance

Fine-tuning use cases

Your fine-tuning process unlocks targeted efficiency. Here’s where it excels:

Domain-specific QA systems

Industries like legal, healthcare, and finance rely on precise, domain-aware AI responses. Fine-tuning equips LLMs with specialized knowledge, ensuring accurate question-answering (QA).

A legal AI assistant, for example, can interpret contracts more precisely, while a medical chatbot can provide symptom-based guidance using trusted datasets.

Sentiment Analysis and custom workflows

Businesses use a fine-tuned model to monitor brands, analyze customer feedback, and automate workflows tailored to unique operational needs. An AI-powered tool can detect nuanced sentiment in product reviews, helping companies refine their offerings.

In HR, combining fine-tuning with natural language processing helps AI analyze employee surveys and flag workplace concerns with greater contextual awareness.

💡 Pro Tip: Fine-tuning could involve adding more diverse data to remove potential biases. It’s not exactly domain-specific, but it’s still a crucial application.

Comparison: RAG vs. Fine-Tuning

There’s no denying that both AI strategies aim to boost performance.

But choosing still seems pretty tricky, right? Here’s a breakdown of fine-tuning vs. RAG to help you make the right decision for your LLM investments.

| Aspect | RAG (Retrieval-augmented generation) | Fine-tuning |

| Definition | Allows LLM to retrieve real-time, relevant data from external sources with its dedicated system | Trains a pre-trained model with specialized datasets for domain-specific tasks |

| Performance and accuracy | Great for real-time data retrieval, but accuracy depends on the quality of external data | Improves contextual accuracy and task-specific responses |

| Cost and resource requirements | More cost-effective upfront focuses on real-time data access | Requires more resources for initial training, but cost-effective in the long term |

| Maintenance and scalability | Highly scalable and flexible, but depends on the frequency of updates to external sources | Requires frequent updates and maintenance but offers stable long-term performance |

| Use cases | Chatbots, dynamic document retrieval, real-time analysis | Domain-specific QA systems, sentiment analysis, and brand voice customization |

| When to choose | Fast-changing data, real-time updates, and prioritizing resource costs | Niche customer segments, domain-specific logic, brand-specific customization |

| Ideal for | Industries need real-time, accurate information (finance, legal, customer support) | Industries requiring specific language, compliance, or context (healthcare, legal, HR) |

Need a little more clarity to settle your doubts? Here’s a head-to-head on key aspects that impact your needs.

Performance and accuracy

When it comes to performance, RAG plays a key role by pulling in new data from external sources. Its accuracy and response times depend on the quality of this data. This reliance on external databases enables RAG to deliver up-to-date information effectively.

Fine-tuning, on the other hand, improves how the model processes and responds through specialized retraining. This process yields more contextually accurate responses, especially for niche applications. Fine-tuned LLMs are ideal for maintaining consistency in industries with strict requirements, such as healthcare or finance.

Bottom line: RAG is great for real-time data and fine-tuning for contextually accurate responses.

A Reddit user says,

💡 Pro Tip: To guide your LLM toward a specific output, focus on effective, prompt engineering.

Cost and resource requirements

RAG is typically more cost-effective upfront since it only adds a layer for external data retrieval. By avoiding the need to retrain the entire model, it emerges as a much more budget-friendly option, especially in dynamic environments. However, the operational costs for real-time data access and storage can add up.

Fine-tuning requires more dataset preparation and training resources but is a long-term investment. Once fine-tuned, LLMs need fewer updates, leading to predictable performance and cost savings. Developers should weigh the initial investment against ongoing operational expenses.

Bottom line: RAG is cost-effective, simple to implement, and has quick benefits. Fine-tuning is resource-intensive upfront but improves LLM quality and saves operational costs in the long term.

💡 Pro Tip: Your RAG system is only as smart as the data it pulls from. Keep your sources clean and pack them with accurate, up-to-date data!

Maintenance and scalability

RAG offers excellent scalability as the focus is mainly on expanding the external source. Its flexibility and adaptability make it perfect for fast-moving industries. However, maintenance depends on the frequency of updates to external databases.

Fine-tuning needs fairly frequent maintenance, especially when domain-specific information changes. While it demands more resources, it provides greater consistency over time and gradually requires fewer adjustments. That said, scalability for fine-tuning is much more complex, involving more extensive and diverse datasets.

Bottom line: RAG is best for quick scaling and fine-tuning for minimal maintenance and stable performance.

A Reddit user adds,

👀 Did You Know? There are AI solutions that can smell now. With how complex fragrances are, that’s a lot of regular fine-tuning and complex data retrieval.

Which Approach Is Right for Your Use Case?

Despite understanding the nuances, making the decision may feel empty without apparent reference or context. Let’s run a few business scenarios highlighting how each AI model works better.

When to choose RAG

RAG helps feed your LLM with the right facts and information, including technical standards, sales records, customer feedback, and more.

How do you put this to use? Consider these scenarios to adopt RAG into your operations:

Use case #1: Real-time analysis

- Scenario: A fintech company provides AI-powered market insights for traders. Users ask about stock trends, and the system must fetch the latest market reports, SEC filings, and news

- Why RAG wins: Stock markets move fast, so constantly retraining AI models is expensive and inefficient. RAG keeps things sharp by pulling only the freshest financial data, cutting costs, and boosting accuracy

- Rule of thumb: RAG should be your go-to strategy for AI handling fast-changing data. Popular applications are social media data analysis, energy optimization, cybersecurity threat detection, and order tracking

Use Case #2: Data checks and regulatory compliance

- Scenario: A legal AI assistant helps lawyers draft contracts and verify compliance with evolving laws by pulling the latest statutes, precedents, and rulings

- Why RAG wins: Verifying legal and commercial aspects doesn’t warrant in-depth behavioral updates. RAG does the job quite well by pulling legal texts from a central dataset in real time

- Rule of thumb: RAG excels in resource and statistics-driven insights. Great ways to maximize this would be to use medical AI assistants for treatment recommendations and customer chatbots for troubleshooting and policy updates

Still wondering if you need RAG in your LLM? Here’s a quick checklist:

- Do you need new, high-quality data without changing the LLM itself?

- Does your information change often?

- Does your LLM need to work with dynamic information instead of static training data?

- Would you like to avoid large expenses and time-consuming model retraining?

➡️ Also Read: Best Prompt Engineering Tools for Generative AI

When Fine-tuning is more effective

As we mentioned before, fine-tuning is AI grad school. Your LLM can even learn industry lingo. Here’s an industry-wise spotlight on when it truly shines:

Use case #1: Adding brand voice and tonality

- Scenario: A luxury brand creates an AI concierge to interact with customers in a refined, exclusive tone. It must embody brand-specific tonalities, phrasing, and emotional nuances

- Why fine-tuning wins: Fine-tuning helps the AI model capture and replicate the brand’s unique voice and tone. It delivers a consistent experience across every interaction

- Rule of thumb: Fine-tuning is more effective if your LLMs must adapt to a specific expertise. This is ideal for genre-oriented immersive gaming, thematic and empathetic storytelling, or even branded marketing copy

🧠 Fun Fact: LLMs trained in such soft skills excel at analyzing employee sentiment and satisfaction. But only 3% of businesses currently use generative AI in HR.

Use case #2: Content moderation and context-based insights

- Scenario: A social media platform uses an AI model to detect harmful content. It focuses on recognizing platform-specific language, emerging slang, and context-sensitive violations

- Why fine-tuning wins: Soft skills like sentence phrasing are often out of scope for RAG systems. Fine-tuning improves an LLM’s understanding of platform-specific nuances and industry jargon, especially relevant content moderation

- Rule of thumb: Choosing fine-tuning is wise when dealing with cultural or regional differences. This also extends to adapting to industry-specific terms like medical, legal, or technical lingo

About to fine-tune your LLM? Ask yourself these key pointers:

- Does your LLM need to deliver to a niche customer segment or brand theme?

- Would you like to add proprietary or domain-specific data to the LLM’s logic?

- Do you need quicker responses without losing accuracy?

- Are your LLMs delivering offline solutions?

- Can you allocate dedicated resources and computing power for retraining?

Improving user experience is great. However, many businesses also need AI as a productivity boost to justify high investment costs. That’s why adopting a pre-trained AI model is often a go-to choice for many.

👀 Did You Know? Gen AI has the potential to automate work activities that save up to 70 percent of employees’ time. Effectively asking AI for insights plays a huge role here!

How ClickUp Leverages Advanced AI Techniques

Choosing between RAG and fine-tuning is quite a huge debate.

Even going through a few Reddit threads is enough to confuse you. But who said you need to pick just one?

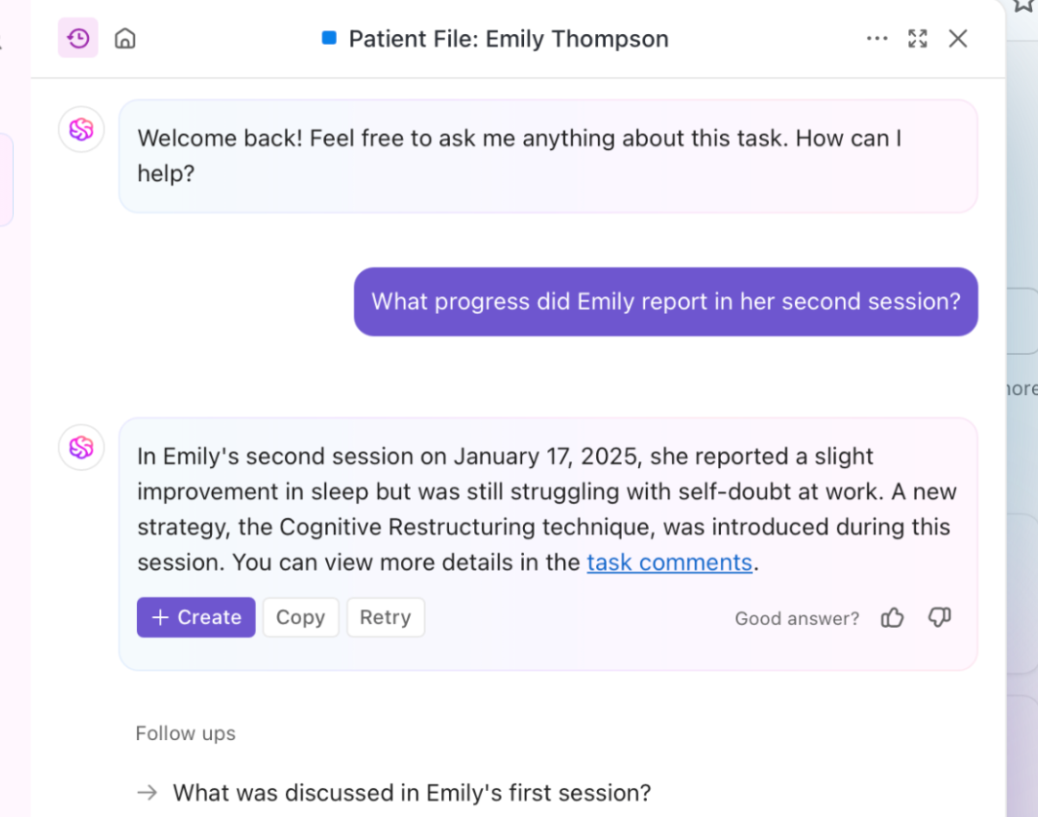

Imagine having customizable AI models, automation, and task management all in one place. That’s ClickUp, the everything app for work. It brings project management, documentation, and team communication under one roof and is powered by next-gen AI.

In short, it excels at anything, especially with its comprehensive AI solution: ClickUp Brain.

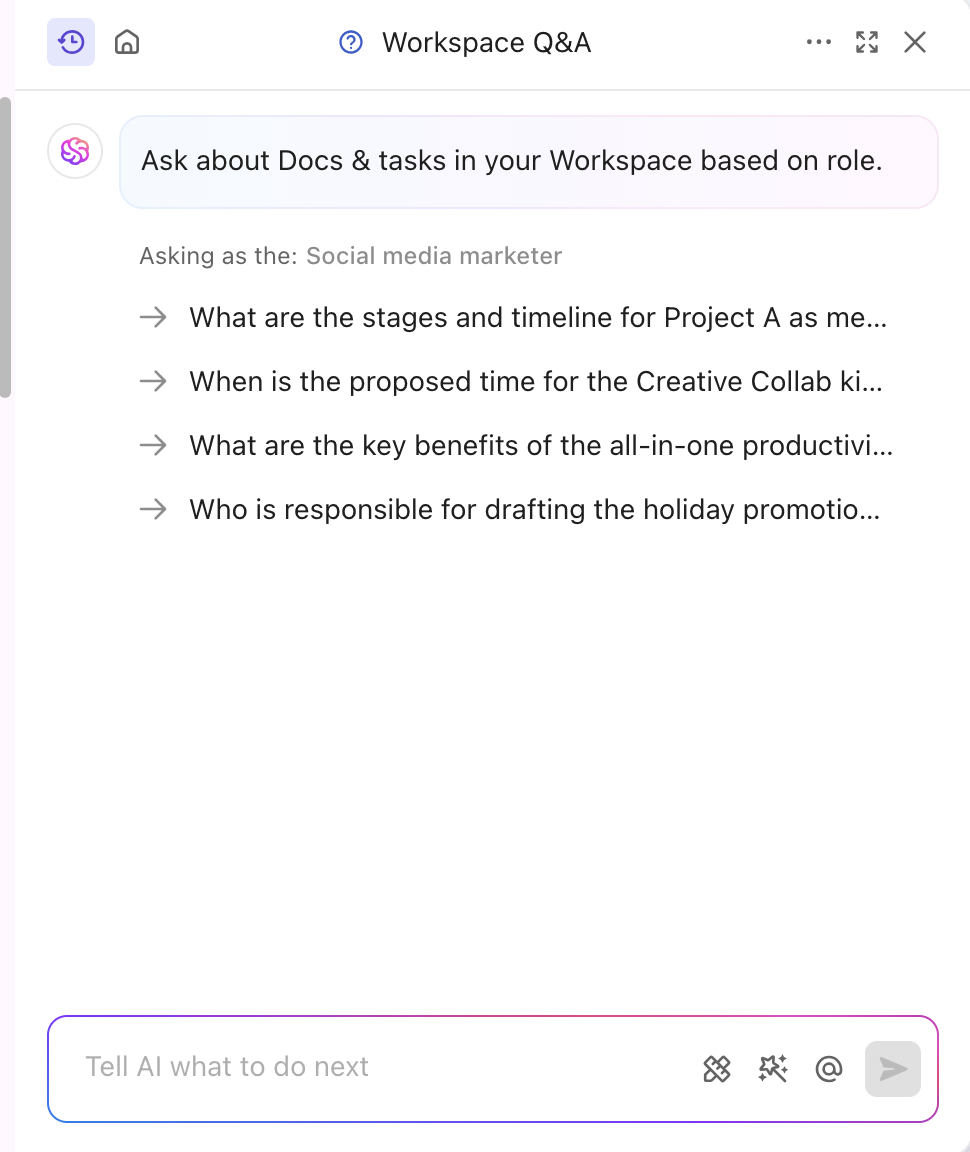

Need quick, context-aware insights? Brain pulls real-time information from your docs, tasks, and resources. That’s amped up RAG in action. Topping that, its foundational LLM, Brain, can generate reports and routine project updates.

The AI tool is also fine-tuned to match your industry and segment, delivering professional and creative insights. It even personalizes content in real time without any manual training. Brain blends fine-tuning and RAG to automate project updates, task assignments, and workflow notifications. Want responses that are tailored to your role? ClickUp Brain can do that, too!

Aside from specializing in content, ClickUp also powers its platform with a powerful knowledge-based AI feature.

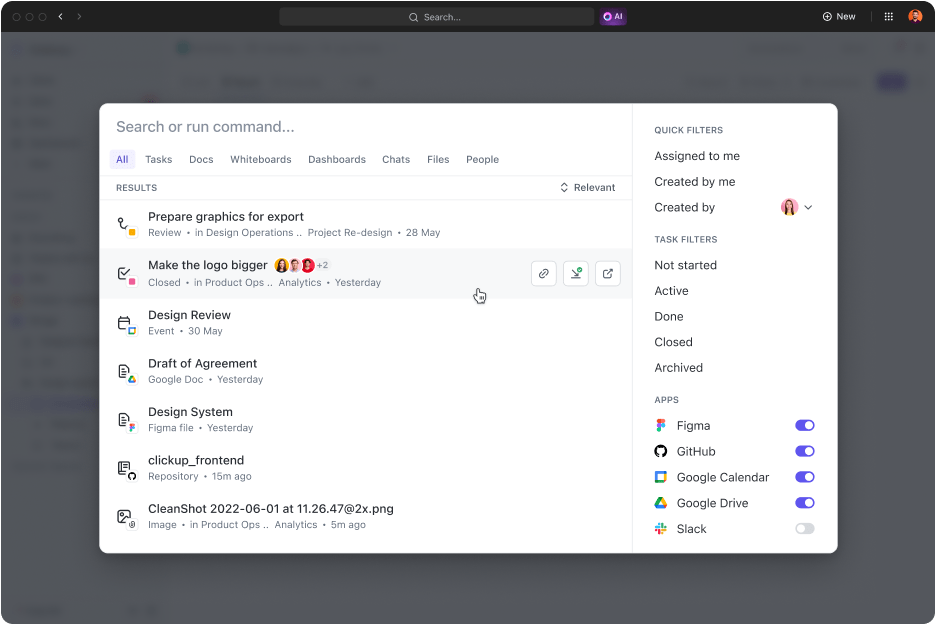

The ClickUp Connected Search is an AI-powered tool that quickly retrieves any resource from your integrated space. Whether you need documents for today’s standup or answers to any task, a simple query pulls up source links, citations, and detailed insights.

It also launches apps, searches your clipboard history, and creates snippets. The best part? All of it is accessible with one click from your command center, action bar, or desktop.

Digital marketing experts, Hum JAM’s president says,

Powering Up Gen AI and LLM Accuracy with ClickUp

RAG power responses, sharpened by fresh external data and fine-tuning, are used for specific tasks and behaviors. Both improve AI performance, but the right approach defines your pace and efficiency.

In dynamic industries, the decision often comes down to which method to adopt first. A powerful pre-trained solution is usually the wiser choice.

If you want to improve service quality and productivity, ClickUp is a great partner. Its AI capabilities drive content generation, data retrieval, and analytical responses. Plus, the platform comes with 30+ tools that cover everything from task management to generating stunning visuals.

Sign up with ClickUp today!