How to Overcome Common AI Challenges

Sorry, there were no results found for “”

Sorry, there were no results found for “”

Sorry, there were no results found for “”

Most of us have had some experience ‘talking’ with the latest AI tools on the block. If you’ve spent enough time with AI, you already know it’s like that brilliant but forgetful friend who has great ideas but sometimes forgets what you two talked about. Or that always-on-the-phone colleague who shares dubious news reports from random chat threads, spreading misinformation.

That’s just the tip of the iceberg when we talk about challenges in artificial intelligence.

Researchers from Oregon State University and Adobe are developing a new training technique to reduce social bias in AI systems. If this technique proves reliable, it could make AI fairer for everyone.

But let’s not get ahead of ourselves. This is just one solution among many needed to tackle the numerous AI challenges we face today. From technical hitches to ethical quandaries, the road to reliable AI is paved with complex issues.

Let’s unpack these AI challenges together and see what it takes to overcome them.

As AI technology advances, it confronts a range of issues. This list explores ten pressing AI challenges and outlines practical solutions for responsible and efficient AI deployment.

Algorithmic bias refers to the tendency of AI systems to exhibit biased outputs, often due to the nature of their training data or design. These biases can manifest in numerous forms, often perpetuating and amplifying existing societal biases.

An example of this was observed in an academic study involving the generative AI art generation application Midjourney. The study revealed that when generating images of people in various professions, the AI disproportionately depicted older professionals with specialized job titles (e.g., analyst) as male, highlighting a gender bias in its output.

Transparency in AI means being open about how AI systems operate, including their design, the data they use, and their decision-making processes. Explainability goes a step further by ensuring that anyone, regardless of their tech skills, can understand what decisions AI is making and why. These concepts help tackle fears about AI, such as biases, privacy issues, or even risks like autonomous military uses.

Understanding AI decisions is crucial in areas like finance, healthcare, and automotive, where they have significant impacts. This is tough because AI often acts as a ‘black box’—even its creators can struggle to pinpoint how it makes its decisions.

Scaling AI technology is pivotal for organizations aiming to capitalize on its potential across various business units. However, achieving this scalability of AI infrastructure is fraught with complexities.

According to Accenture, 75% of business leaders feel that they will be out of business in five years if they cannot figure out how to scale AI.

Despite the potential for a high return on investment, many companies find it difficult to move beyond pilot projects into full-scale deployment.

Zillow’s home-flipping fiasco is a stark reminder of AI scalability challenges. Their AI, aimed at predicting house prices for profit, had error rates up to 6.9%, leading to severe financial losses and a $304 million inventory write-down.

The scalability challenge is most apparent outside of tech giants like Google and Amazon, which possess the resources to leverage AI effectively. For most others, especially non-tech companies just beginning to explore AI, the barriers include a lack of infrastructure, computing power, expertise, and strategic implementation.

Also read: Essential AI stats to know today

Generative AI and deepfake technologies are transforming the fraud landscape, especially in the financial services sector. They make it easier and cheaper to create convincing fakes.

For example, in January 2024, a deepfake impersonating a CFO instructed an employee to transfer $25 million, showcasing the severe implications of such technologies.

This rising trend highlights the challenges banks face as they struggle to adapt their data management and fraud detection systems to counter increasingly sophisticated scams that not only deceive individuals but also machine-based security systems.

The potential for such fraud is expanding rapidly, with projections suggesting that generative AI could push related financial losses in the U.S. to as high as $40 billion by 2027, a significant leap from $12.3 billion in 2023.

When different organizations or countries use AI together, they must ensure that AI behaves ethically according to everyone’s rules. This is called ethical interoperability, and it’s especially important in areas like defense and security.

Right now, governments and organizations have their own set of rules and ethos. For instance, check out Microsoft’s Guidelines for Human-AI Interaction:

However, there’s a lack of standardization in this ethos and rules across the globe.

Right now, AI systems come with their own set of ethical rules, which might be okay in one place but problematic in another. When these systems interact with humans, if they don’t behave as expected, it can lead to misunderstandings or mistrust.

Artificial Intelligence (AI) is zooming into almost every part of our lives—from self-driving cars to virtual assistants, and it’s brilliant! But here’s the catch: how we use AI can sometimes stir up serious ethical headaches. There are thorny ethical issues around privacy, bias, job displacement, and more.

With AI being able to do tasks humans used to do, there’s a whole debate about whether it should even be doing some of them.

For example, should AI write movie scripts? Sounds cool, but it sparked a massive stir in the entertainment world with strikes across the USA and Europe. And it’s not just about what jobs AI can take over; it’s also about how it uses our data, makes decisions, and sometimes even gets things wrong. This has everyone from tech builders to legal eagles hustling to figure out how to handle AI responsibly.

When developing AI machine learning models, it can be challenging to distinguish correctly between training, validation, and testing datasets. The AI model training dataset teaches the model, the validation dataset tunes it, and the testing dataset evaluates its performance.

Mismanagement in splitting these datasets can lead to models that either fail to perform adequately due to underfitting or perform too well on training data but poorly on new, unseen data due to overfitting.

This misstep can severely hamper the model’s ability to function effectively in real-world AI applications, where adaptability and accuracy on standardized data are key.

When AI makes decisions, things can get tricky, especially in critical areas like healthcare and banking. One big problem is that we can’t always see how AI systems come up with their decisions.

This can lead to unfair decisions that nobody can explain. Plus, these systems are targets for hackers who, if they get in, could steal a lot of important data.

Also read: The most popular AI tools for students

Right now, there isn’t a single global watchdog for AI; regulation varies by country and even by sector. For example, there’s no central body specifically for AI in the US.

What we see today is a patchwork of AI governance and regulations enforced by different agencies based on their domain—like consumer protection or data privacy.

This decentralized approach can lead to inconsistencies and confusion; different standards may apply depending on where and how AI is deployed. This makes it challenging for AI developers and users to ensure they’re fully compliant across all jurisdictions.

Also read: AI tools for lawyers

Imagine having technology that can think like a human. That’s the promise of Artificial General Intelligence (AGI), but it comes with big risks. Misinformation is one of the main issues here.

With AGI, one can easily create fake news or convincing false information, making it harder for everyone to figure out what’s true and what’s not.

Plus, if AGI makes decisions based on this false info, it can lead to disastrous outcomes, affecting everything from politics to personal lives.

Also read: The complete AI glossary

When you’re knee-deep in AI, picking the right tools isn’t just a nice-to-have; it’s a must to ensure your AI journey doesn’t turn disastrous. It’s about simplifying the complex, securing your data, and getting the support you need to solve AI challenges without breaking the bank.

The key is to choose tailored AI software that enhances productivity while also safeguarding your privacy and the security of your data.

Enter ClickUp Brain, the Swiss Army knife for AI in your workplace.

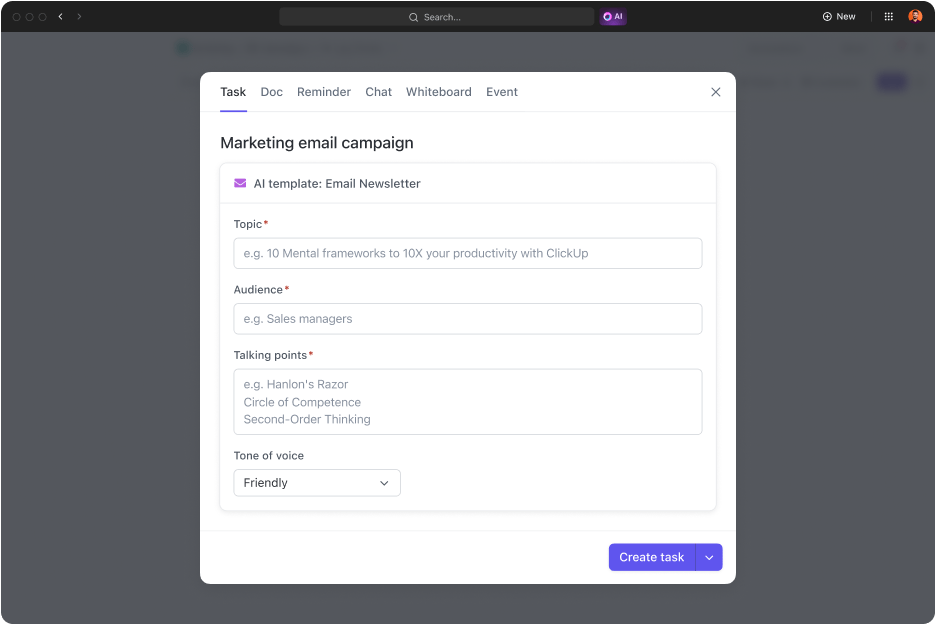

ClickUp Brain is designed to handle everything AI-related—from managing your projects and documents to enhancing team communication. With the AI capabilities of ClickUp Brain, you can tackle data-related challenges, improve project management, and boost productivity, all while keeping things simple and secure. ClickUp Brain is a comprehensive solution that:

ClickUp Brain integrates intelligently into your workflow to save you time and effort while also safeguarding your data. It is (like the rest of the ClickUp platform) GDPR compliant, and doesn’t use your data for training.

Here’s how it works:

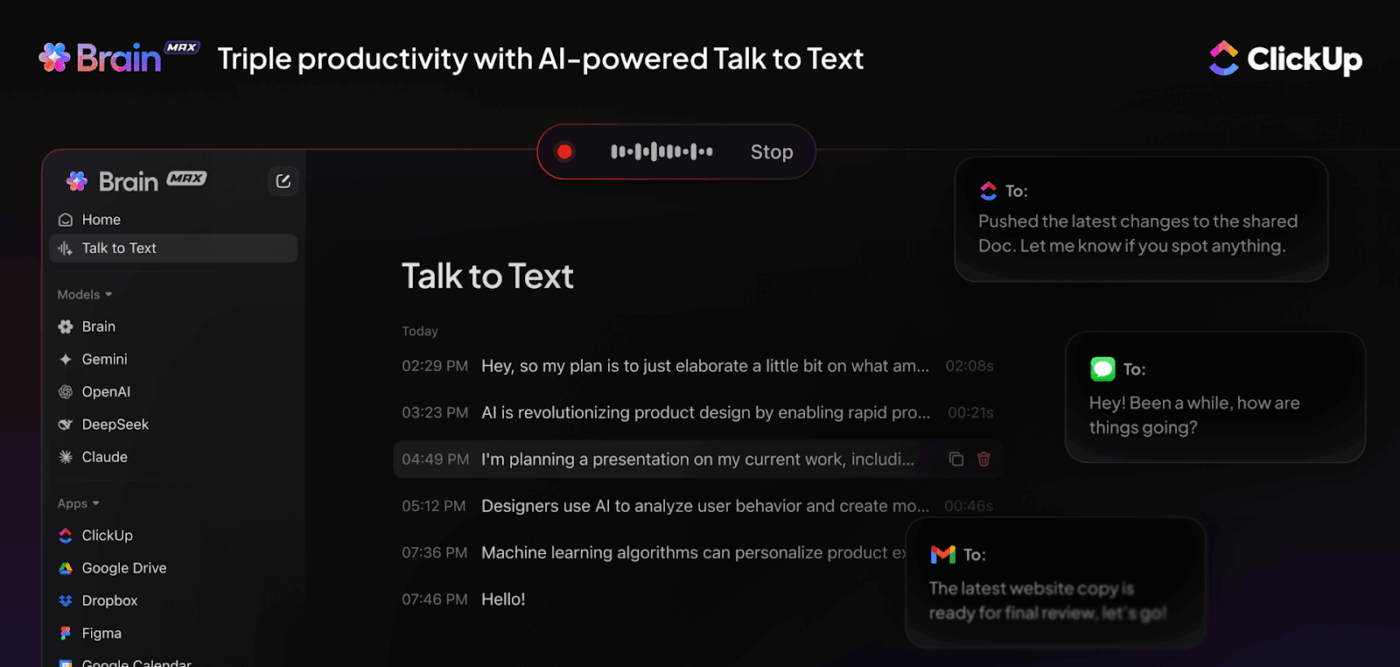

With ClickUp Brain MAX, you get more than built-in intelligence—you get speed and capture in the flow of work. Its Talk to Text feature lets you dictate ideas, tasks, or notes in real time, turning speech into structured work instantly. Whether it’s capturing feedback during proofing or logging action items on the go, you never lose a thought.

Despite the AI challenges we’ve discussed, we can agree that artificial intelligence has come a long way. It has evolved from basic automation to sophisticated systems that can learn, adapt, and predict outcomes. Many of us have now integrated AI into various aspects of our lives, from virtual assistants to advanced data collection and analysis tools.

As AI advances, we can expect even more innovations, AI hacks, and AI tools to enhance productivity, improve decision-making, and revolutionize industries. This progress opens up new possibilities, driving us toward a future where AI plays a crucial role in both personal and professional spheres.

With AI tools like ClickUp Brain, you can make the most of AI technologies while also securing yourself against AI challenges to privacy and data security. ClickUp is your go-to AI-powered task management tool for everything from software projects to marketing. Choose ClickUp to securely transform your organization into a data and AI-powered entity while you grow team productivity.

Ready to transform your workflows with AI? Sign up for ClickUp now!

© 2025 ClickUp