Mastering the AI Robots TXT Generator

Defining the AI Robots TXT Generator

An AI Robots TXT Generator automates the crafting and updating of robots.txt files—essential for directing search engine crawlers and managing site indexing.

Traditionally, creating robots.txt involved manual coding or static file uploads, often leading to errors and outdated directives.

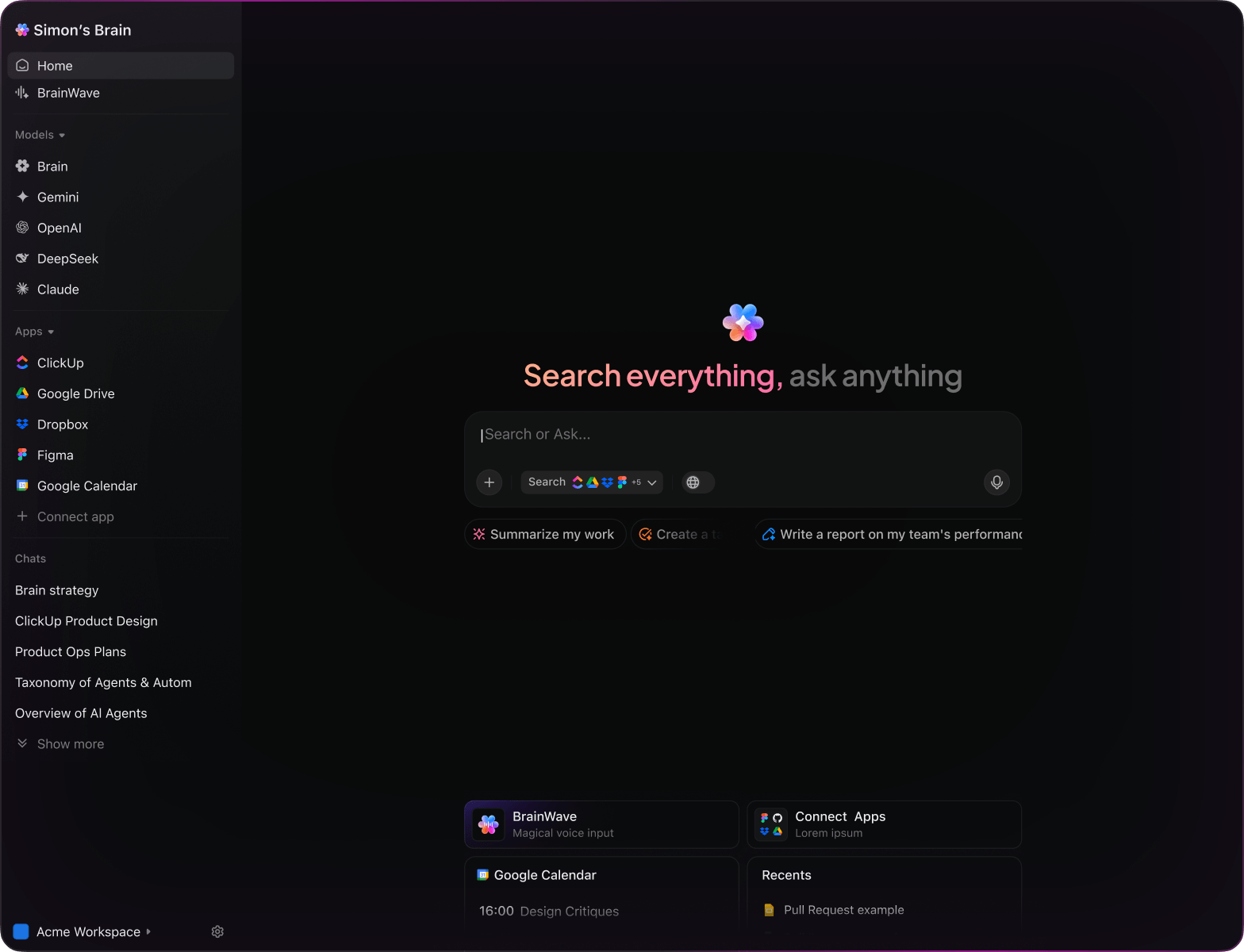

AI shifts this paradigm by interpreting natural language commands and dynamically generating accurate, customized robots.txt files. By integrating with ClickUp Brain, these files become part of your SEO workflow—living documents that evolve alongside your content strategy and site architecture.

Why ClickUp Brain Stands Out in Robots TXT Generation

Conventional Robots TXT Tools

- Manual edits: Requires coding knowledge or text editor use, prone to syntax mistakes.

- Static files: Updates need manual uploads to servers, risking outdated rules.

- Limited integration: Separate from project management, causing workflow gaps.

- Minimal collaboration: Hard to track changes or review directives across teams.

- Basic logic: Lacks contextual understanding of site structure or SEO goals.

ClickUp Brain & AI Robots TXT Generator

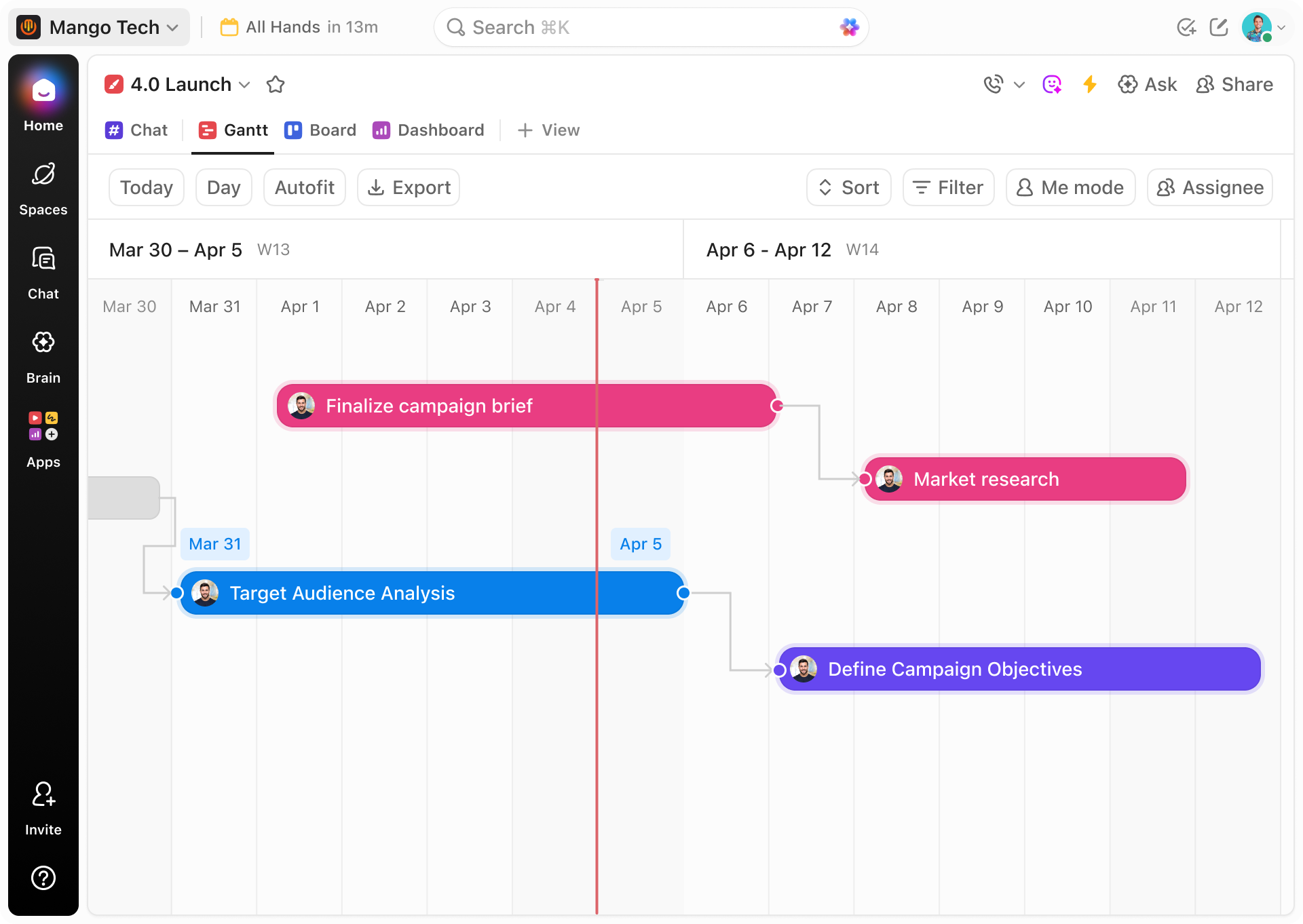

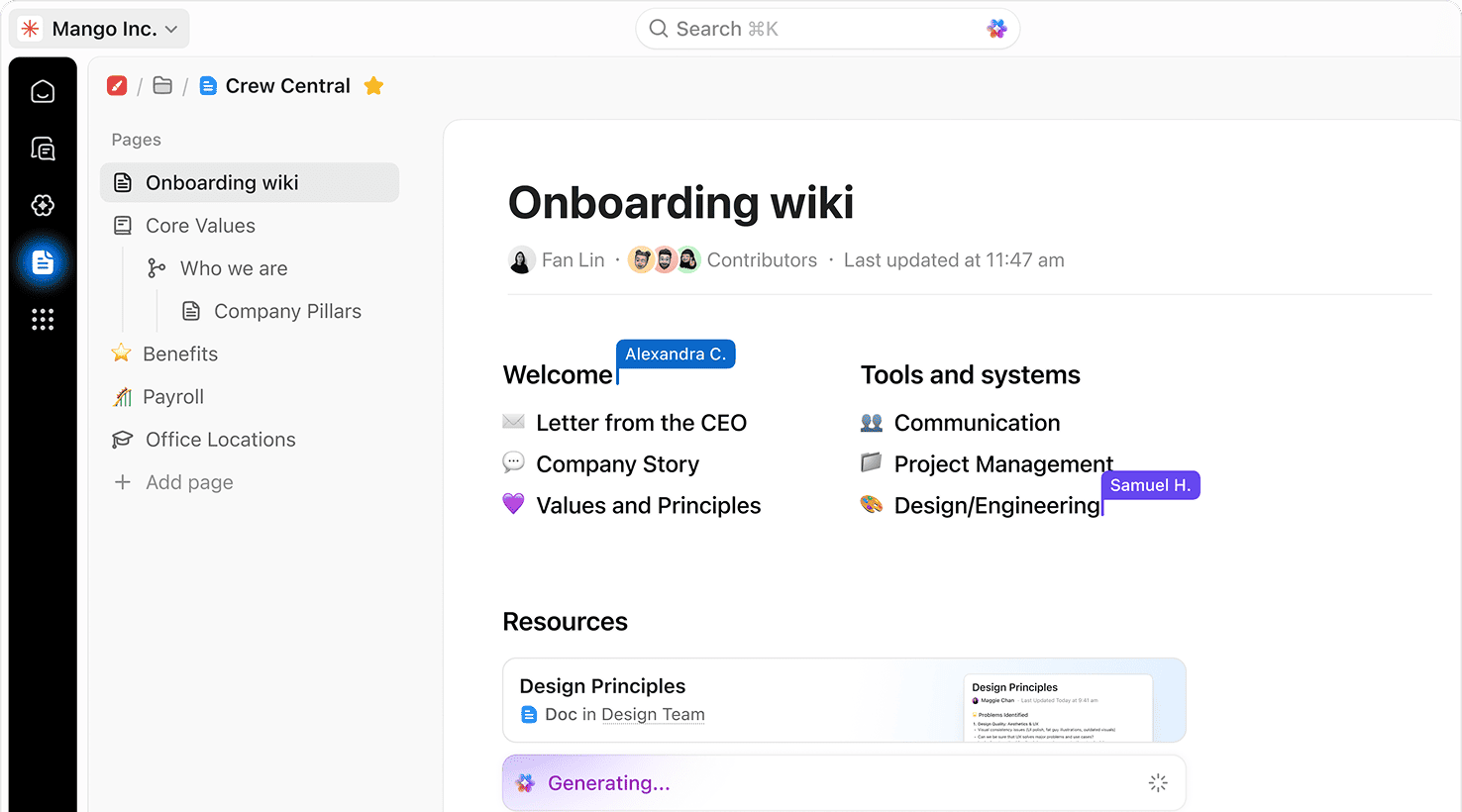

- Embedded in your workflow: Create and update robots.txt within ClickUp alongside SEO projects.

- Natural language input: Generate directives by simply describing crawler rules.

- Real-time collaboration: Teams co-create and review files with version control.

- Dynamic updates: Files adjust automatically as site structure or SEO priorities change.

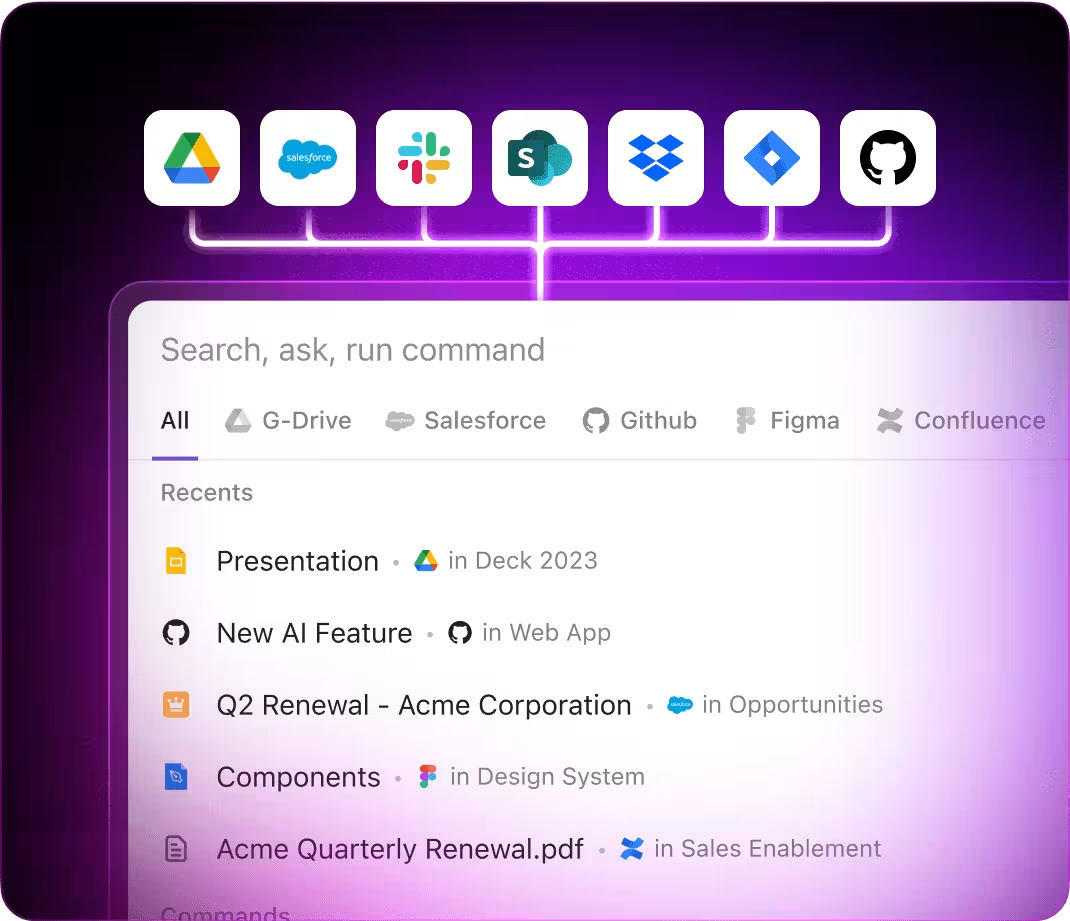

- Brain Max capabilities: Customize complex rules and integrate data sources for precise crawler management.

How to Generate an AI Robots TXT File

1. Gather Site Structure and SEO Goals

Traditional approach: Manually compile directory paths and crawling preferences.

With ClickUp Brain:

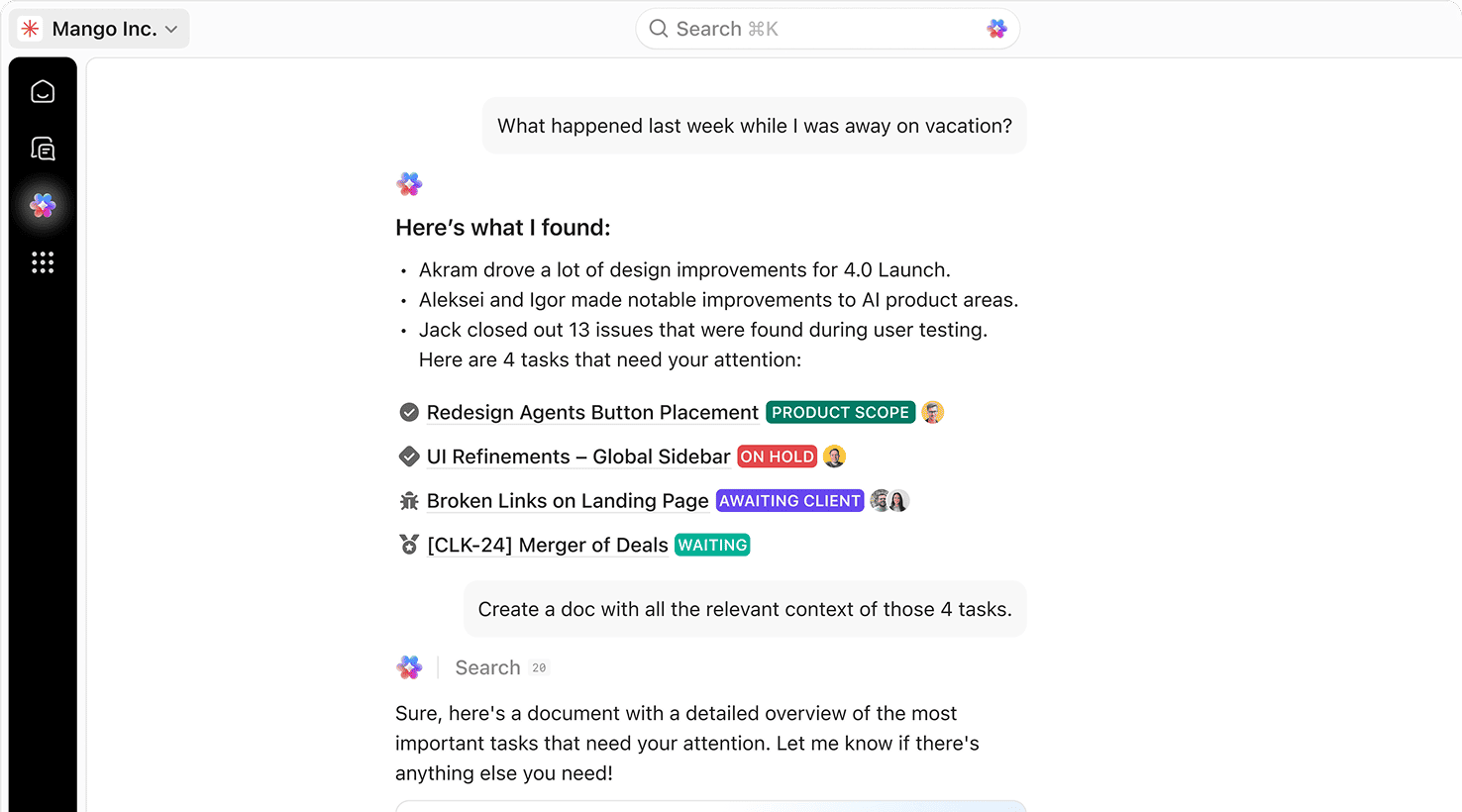

Automatically sync your website’s sitemap, SEO tasks, and indexing priorities to inform the AI’s directive generation.

2. Define Crawler Access Using Natural Language

Traditional approach: Write disallow or allow rules in raw text format.

With ClickUp Brain:

Simply input commands like “Block all search engines from /private folder” or “Allow Googlebot full access.” The AI interprets and formats these rules accurately.

3. Customize Rules with Advanced Logic

Traditional approach: Manually code exceptions and user-agent-specific instructions.

With ClickUp Brain:

Leverage Brain Max to blend data from analytics and SEO tools, creating tailored access rules that reflect real user behavior and crawler needs.

4. Deploy and Monitor Robots TXT File

Traditional approach: Upload static files via FTP or CMS, then manually track crawler activity.

With ClickUp Brain:

Publish directly from ClickUp and receive alerts on crawler errors or indexing issues, enabling proactive SEO adjustments.

3 Key Applications of AI Robots TXT Generator

Accelerate SEO Audits and Compliance

Manage Large-Scale Website Changes

Simplify Multi-User Collaboration on Crawl Policies